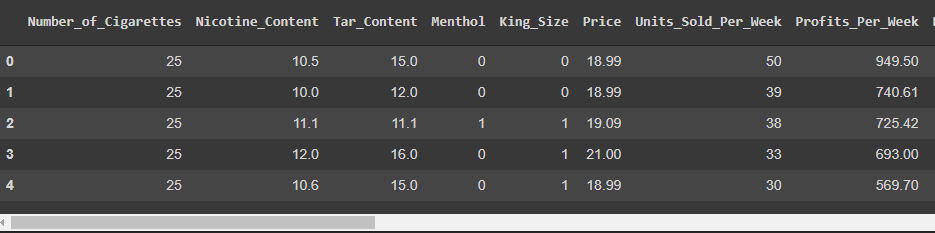

I am attempting to transform some columns of my data frame with the MinMaxScaler() from Scikit-Learn. The data looks as such:

The columns I wish to transform:

ct_columns = ['Number_of_Cigarettes', 'Nicotine_Content', 'Tar_Content', 'Price', 'Units_Sold_Per_Week', 'Profits_Per_Week']

Pass them to a column transformer:

ct = ColumnTransformer( (MinMaxScaler(),

ct_columns)

)

Assign the input features and label, then pass them to train_test_split:

X = one_hot_cigarette_df.drop('Units_Sold_Per_Week', axis=1)

y = one_hot_cigarette_df['Units_Sold_Per_Week']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=RANDOM_STATE)

When I attempt to then pass the column transformer to the fit method I get the issue:

ct.fit(X_train)

271 return

272

--> 273 names, transformers, _ = zip(*self.transformers)

274

275 # validate names

TypeError: zip argument #1 must support iteration

CodePudding user response:

You should replace ct = ColumnTransformer((MinMaxScaler(), ct_columns)) with

ct = ColumnTransformer([('scaler', MinMaxScaler(), ct_columns)])

You should also drop the label name 'Units_Sold_Per_Week' from ct_columns if you are planning to apply the transformer only to the feature matrix.

import numpy as np

import pandas as pd

from sklearn.compose import ColumnTransformer

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

np.random.seed(0)

# generate the data

df = pd.DataFrame(

columns=['Units_Sold_Per_Week', 'Number_of_Cigarettes', 'Nicotine_Content', 'Tar_Content', 'Price', 'Profits_Per_Week'],

data=np.random.lognormal(1, 0.5, (100, 6))

)

# extract the features and target

X = df.drop('Units_Sold_Per_Week', axis=1)

y = df['Units_Sold_Per_Week']

# split the data

X_train, X_test, Y_train, Y_test = train_test_split(X, y, random_state=100)

# scale the features

ct_columns = ['Number_of_Cigarettes', 'Nicotine_Content', 'Tar_Content', 'Price', 'Profits_Per_Week']

ct = ColumnTransformer([('scaler', MinMaxScaler(), ct_columns)])

ct.fit(X_train)

X_train_scaled = ct.transform(X_train)

X_test_scaled = ct.transform(X_test)