I have several processes represented by azure functions and I need to chain them. They are mainly interacting with the DB and working with the huge amount of data, so each function could take even 1 hour - therefor I can't use HTTP triggers and ADF pipelines (as the timeout is 230s).

What approach would you recommend for such a scenario: To chain couple of long-running functions (3-5 functions).

I have already tried Durable functions. They are working fine, but I had to move all functions inside of one Function app, which I wanted to avoid.

I would like to ask about experiences with Event Hub or Event Grid, if this technology is suitable for such a scenario as well and if yes, what approach would you choose and why? Or any other options?

Thanks a lot for your opinions.

CodePudding user response:

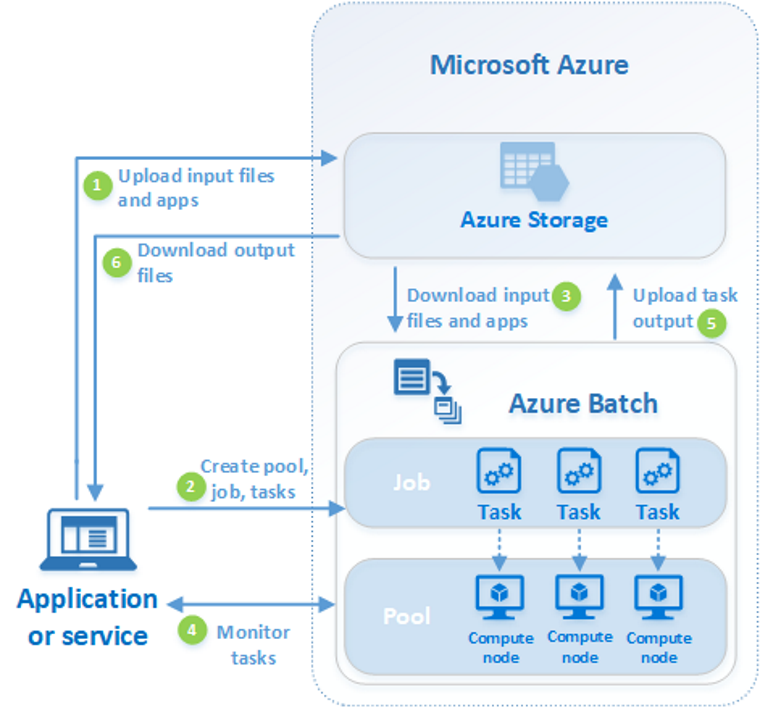

Azure Batch may be a better fit for long-running jobs.

CodePudding user response:

I would like to ask about experiences with Event Hub or Event Grid, if this technology is suitable for such a scenario as well and if yes, what approach would you choose and why? Or any other options?

Event Hub should be used as your entry point for Big Data. (when developing IOT solutions, you should use Azure Iot Hub as it's bi-directional).

About Event Grid, it's just a repository for events where you can publish/subscribe to events.

I think you should stay with Durable Functions. As another alternative, you can move the processing part to Azure Container Instances and use Service Bus Queue / Topic to notify the process status.