I am using Pyspark for writing this piece of code:

df.na.fill("").show()

# Refering columns by names

rdd2=df.rdd.map(lambda x:

(x.firstName "" x.lastName,x.street "," x.town,x.city,x.code) #error line

)

df2=rdd2.toDF(["name","address","city","code"])

df2.display()

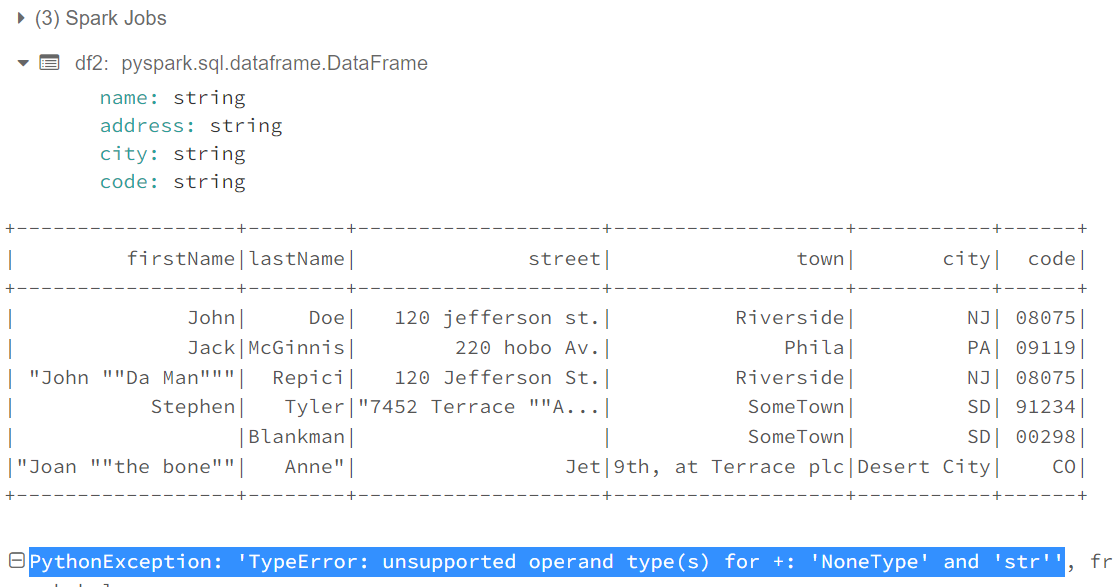

I am getting error on line no 4 which says:

PythonException: 'TypeError: unsupported operand type(s) for : 'NoneType' and 'str''

This is the output which i am getting for the csv file i am working on

I am using the function df.na to convert the null values to string, but it still shows the error for string and none type. P.S: I am new to Pyspark so please help me out how can i avoid this error

CodePudding user response:

You need to do:

df = df.na.fill("")

Otherwise it won't persist the conversion of none to string.