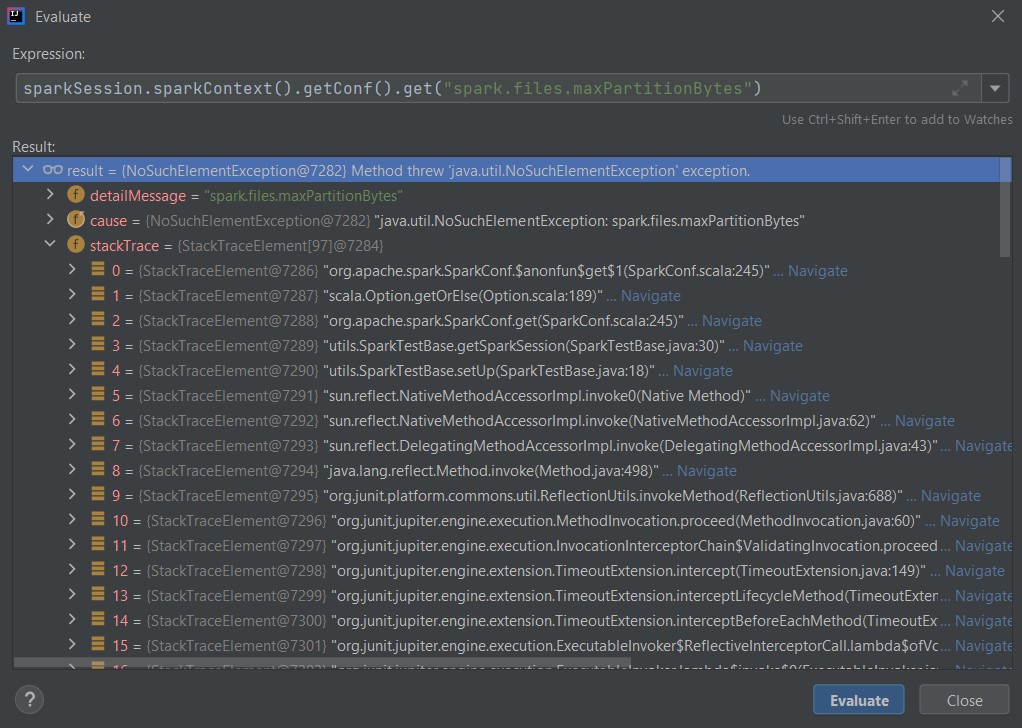

When I try to do this:

SparkConf conf = new SparkConf().setAppName("SparkUnitTest").setMaster("local[*]");

SparkSession sparkSession = SparkSession

.builder()

.config(conf)

.getOrCreate();

JavaSparkContext sparkContext = JavaSparkContext.fromSparkContext(sparkSession.sparkContext());

sparkContext.getConf().get("spark.files.maxPartitionBytes")

I am receiving this error:

java.util.NoSuchElementException: spark.files.maxPartitionBytes

CodePudding user response:

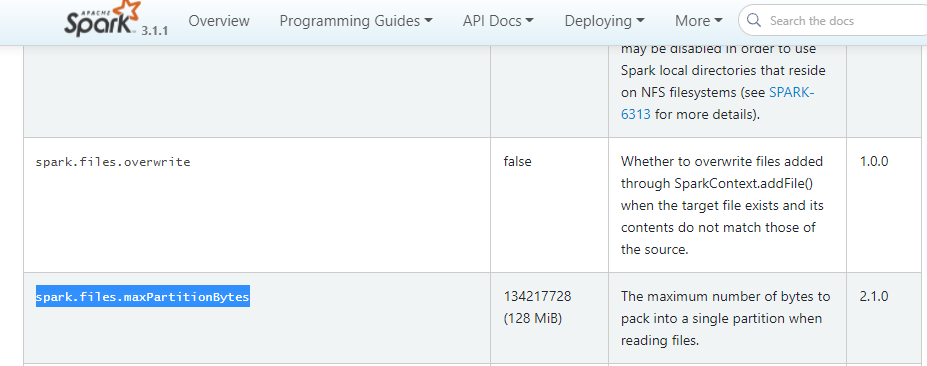

The configuration property you are looking for is:

spark.sql.files.maxPartitionBytes

And not:

spark.files.maxPartitionBytes

See more details in spark sql performance tuning

CodePudding user response:

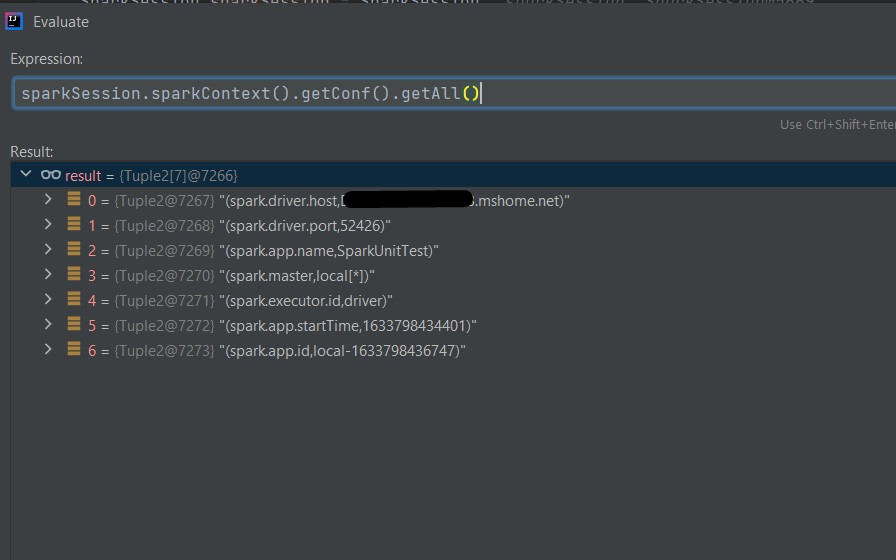

Viewing Spark Properties

"The application web UI at http://:4040 lists Spark properties in the “Environment” tab. This is a useful place to check to make sure that your properties have been set correctly. Note that only values explicitly specified through spark-defaults.conf, SparkConf, or the command line will appear. For all other configuration properties, you can assume the default value is used."

https://spark.apache.org/docs/3.1.1/configuration.html#spark-properties