Hi all I have this image that has a white overlay on it, I am trying to equalize the color or in other words remove the white overlay so it is one color. I am new to image processing and I thought maybe to extract the color channel then using the equalize histogram it if that works? What would be the best approach to this? Thanks!

CodePudding user response:

Here is a simple attempt to match the mean of the inner region with that of the outer region. It does not work terribly well because it is a global change and does not take into account brightness variation across the image. But you can play around with it to start.

It takes a mask image and gets the means of the inner and outer regions. Then gets the difference and subtracts from the inner region.

Input:

import cv2

import numpy as np

# load image

img = cv2.imread('writer.jpg', cv2.IMREAD_GRAYSCALE)

# rectangle coordinates

x = 61

y = 8

w = 663

h = 401

# create mask for inner area

mask = np.zeros_like(img, dtype=np.uint8)

mask[y:y h, x:x w] = 255

# compute means of inner rectangle region and outer region

mean_inner = np.mean(img[np.where(mask == 255)])

mean_outer = np.mean(img[np.where(mask == 0)])

# compute difference in mean values

bias = 0

diff = mean_inner - mean_outer bias

# print mean of each

print("mean of inner region:", mean_inner)

print("mean of outer region:", mean_outer)

print("difference:", diff)

# subtract diff from img

img_diff = cv2.subtract(img, diff)

# blend with original using mask

result = np.where(mask==255, img_diff, img)

# save resulting masked image

cv2.imwrite('writer_balanced.jpg', result)

# show results

cv2.imshow("IMAGE", img)

cv2.imshow("MASK", mask)

cv2.imshow("RESULT", result)

cv2.waitKey(0)

cv2.destroyAllWindows()

mean of inner region: 195.44008004122423

mean of outer region: 154.1415758021116

difference: 41.298504239112646

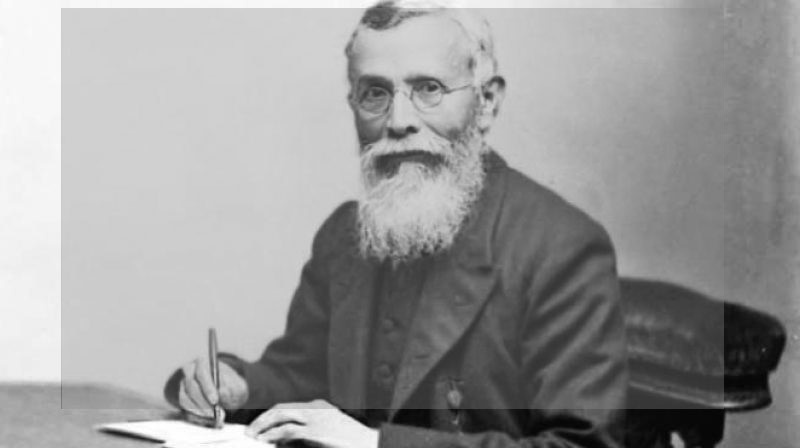

Result:

You can change the bias to make the inner region lighter or darker over all.

CodePudding user response:

here's my best attempt:

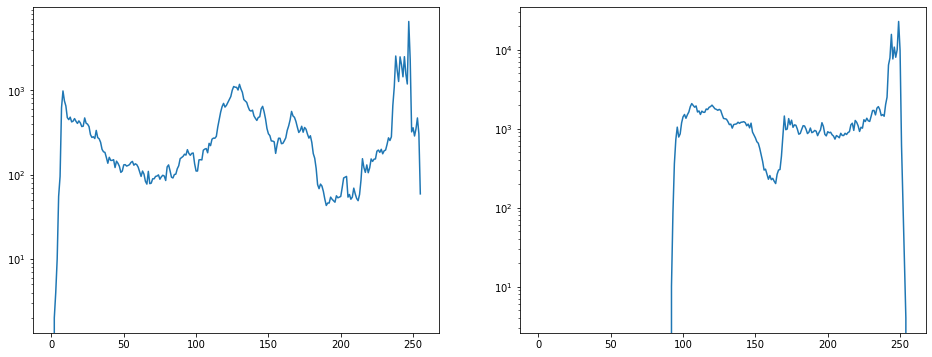

Grayscale histograms of the reference area, and the area to fix:

You see, the blending with white caused the histogram to be squeezed and moved rightward. An original value of 255 was mapped to 255, but the darker the original value, the more it is brightened. The mask area contains samples of presumably black (the backrest) that are also black in the untouched reference area, so we can estimate what's going on... original black was mapped to a grayscale value of ~88.

I find the minima in both spectra and use those for a linear mapping:

refmax = 255

refmin = gray[~mask].min()

fixmax = 255

fixmin = gray[mask].min()

composite = im.copy()

composite[mask] = np.clip((composite[mask] - fixmin) / (fixmax - fixmin) * (refmax - refmin) refmin, 0, 255)

And that's it.