I have a dockerfile:

FROM apache/airflow:2.1.2-python3.8

ENV PYTHONPATH "${PYTHONPATH}:/"

ADD ./aws/credentials /home/airflow/.aws/credentials

ADD ./aws/config /home/airflow/.aws/config

RUN pip install -r requirements.pip

And a docker-compose.yml:

version: '3'

services:

webserver:

image: airflow2

command: webserver

ports:

- 8080:8080

healthcheck:

test: [ "CMD", "curl", "--fail", "http://localhost:8080/health" ]

interval: 10s

timeout: 10s

retries: 5

restart: always

build:

context: .

dockerfile: Dockerfile

env_file:

- ./airflow.env

container_name: webserver

volumes:

- ./database_utils:/database_utils

scheduler:

image: sch-airflow2

command: scheduler

healthcheck:

test: [ "CMD-SHELL", 'airflow jobs check --job-type SchedulerJob --hostname "$${HOSTNAME}"' ]

interval: 10s

timeout: 10s

retries: 5

restart: always

container_name: scheduler

build:

context: .

dockerfile: Dockerfile

env_file:

- ./airflow.env

volumes:

volumes:

- ./database_utils:/database_utils

depends_on:

- webserver

When running an script that uses boto3 to connect to s3 it works fine. The issue here is that I am adding the credentials to the image which is a bad practice. Then I delete this line from the dockerfile:

ADD ./aws/credentials /home/airflow/.aws/credentials

And I add this in the docker-compose volume section:

- ./aws:/home/airflow/.aws

If I run the script now it fails with:

botocore.exceptions.InvalidConfigError: The source profile "default" must have credentials.

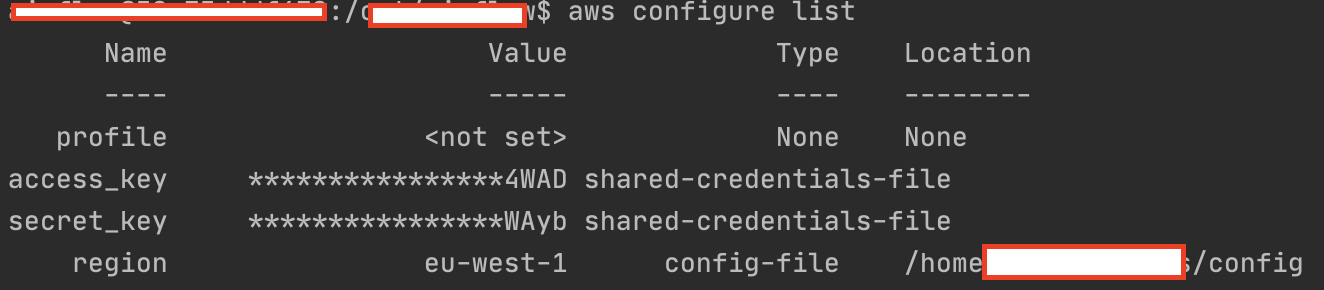

I understant the error and I checked that credentials are provided in the docker container:

aws configure list

It seems fine, so I don't get it why it returns the error.

I also tried setting up the ENV variables in the ./airflow.env:

AWS_ACCESS_KEY_ID=XXXXXXXXX

AWS_SECRET_ACCESS_KEY=XXXXXXXXXXXX

The only way that I achieve it working is with the ADD in the dockerfile.

CodePudding user response:

Something somewhere is specifying the default profile to be used.

This can happen because:

- You specify

--profile defaultas a command line parameter - You have an environment variable of

AWS_PROFILEwith value ofdefault - Your

~/.aws/credentialscontains[default] - Your

~/.aws/configfile contains[default]

The above, ordered in terms of precedence, tell AWS to use the default profile.

Double-check the default profile is not being referenced in any of the above (most likely your config or credentials file).

CodePudding user response:

One possible reason is that, as explained when describing ADD in the Docker documentation:

All new files and directories are created with a UID and GID of 0, unless the optional

--chownflag specifies a given username, groupname, or UID/GID combination to request specific ownership of the content added.

whereas when you configure a host directory as a volume the file permissions of the host are preserved. Maybe the AWS SDK takes this fact into account when looking for a valid set of credentials by matching UID/GID.

Another possible reason may be that the AWS SDK performs some kind of initialization when installing the software. You can verify this point by running pip prior running in your Dockerfile:

ADD ./aws/credentials /home/airflow/.aws/credentials

and see if the software can still found the appropriate set of credentials.