I have the following issue. I am trying to scrape some information of a website. After a search query I get multiple results and I have to click on each result and copy some information out of it. Problem is, I can't / don't want to use static xpaths. They seem to break after a few searches.

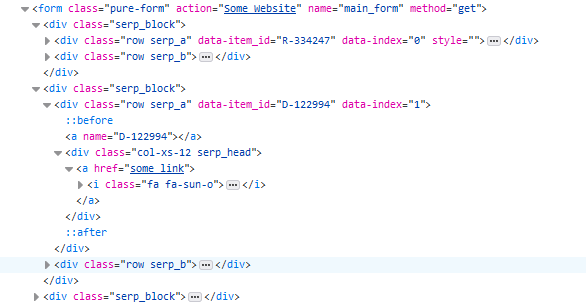

Each child block does have a "data-index". How can I specifically select the href underneath data-index="1" here?

I tried a few things but I came to no conclusion.

CodePudding user response:

If you are using

//div[@data-index='1']

to locate the web element like this :

first_element = driver.find_element_by_xpath("//div[@data-index='1']")

Now first_element should represent the data-index 1.

You can call the find_element_by_xpath on this first_element web element like this :

href_link = first_element.find_element_by_xpath(".//descendant::a[@href]").get_attribute('href')

print(href_link)

CodePudding user response:

You need to apply loop over [class"row serp_a"]

j=1

for links in driver.find_elements_by_xpath("//div[@class='serp_block']/div[@class='row serp_a']"):

urls = '-'.join(driver.find_element_by_xpath("//div[@class='serp_block']/div[@class='row serp_a'][%d]/div/a" % (j,)).get_attribute('href'))

print(urls)

It will collect all urls under the div blocks you want. Is this helpful?