I have a large number of csv data files that are located in many different subdirectories. The files all have the same name and are differentiated by the subdirectory name.

I'm trying to find a way to import them all into r in such a way that the subdirectory name for each file populates a column in the datafile.

I have generated a list of the files using list.files(), which I've called tto_refs.

CodePudding user response:

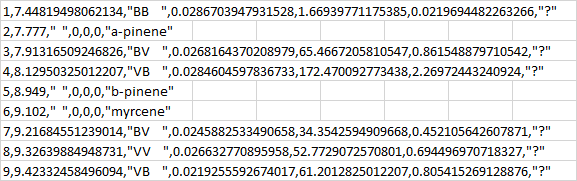

Your files are in the UTF-16 (or UCS-2) character encoding. This means that each character is represented by two bytes. Because the data only contain ASCII characters, the second byte of each character is 0.

Because R is expecting a single-byte-per-character encoding, it thinks the second byte is meant to be a null character, which should not be present in a CSV file.

In addition the files contain a byte-order-mark at the start of the first line, which is being converted to garbage. You need a UTF-16 to UTF-8 converter program. This should also remove the byte order mark (which is not required in UTF-8).

Personally I would do this using the tool iconv. If I were using Windows I would use Cygwin to install it.

for f in *.CSV do iconv -f UTF-16 -t UTF-8 <"$f" >"${f%.CSV}-utf8.csv" doneIf you don't like this approach there are several other tools listed as answers to this question.