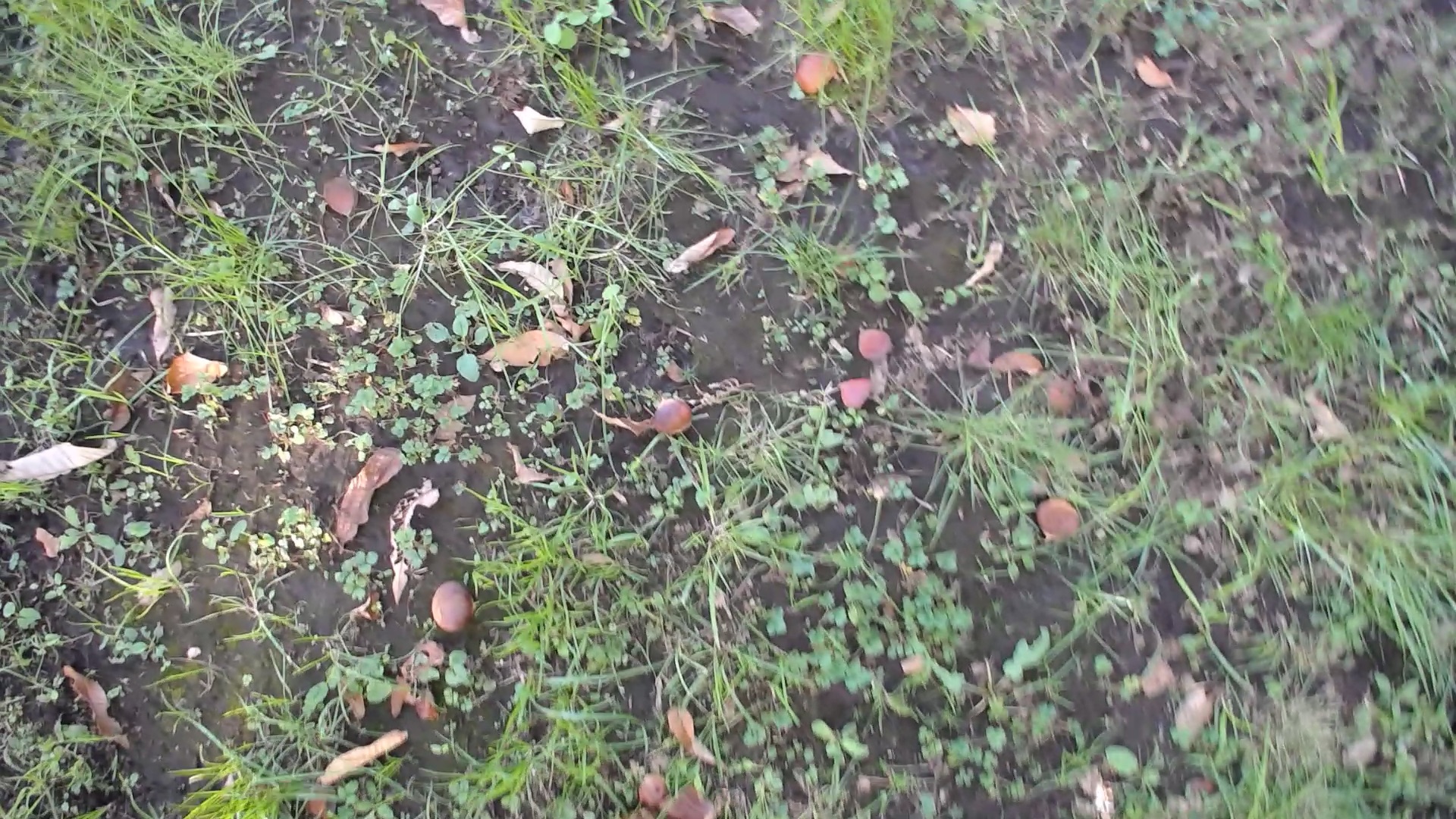

I have some images of the ground of an orchard, which look like these:

Now, I want to extract a region of chestnut from image1.png and then put it on image2.png.

So I made a mask image with Adobe Photoshop, and the result (exported as mask.png) looks like this:

You can see the selected region fits the edge of the chestnut tightly.

You can see the selected region fits the edge of the chestnut tightly.

However, when I read the exported mask image with Python, the selected region did not fit the edge anymore. It was a rectangle that looked like this:

This is what I have done when reading the images and generating result.png.

import numpy as np

from PIL import Image

import matplotlib.pyplot as plt

# read image1.png

image1 = np.array(Image.open('image1.png').convert('RGB'))

# read mask.png

mask = np.array(Image.open('mask.png').convert('RGB'))

# Boolean indices representing the selected region

region = np.all(img != 255, axis=-1)

# "region" has a shape of (height, width)

# so we have to add an axis corresponding to color channels

region = np.dstack([region, region, region])

plt.imshow(np.where(region, image1, 255))

plt.axis('off')

plt.savefig('result.png', bbox_inches='tight')

Is there any tool by which I can use the mask image as it is (not like result.png but like mask.png)?

CodePudding user response:

You can try OpenCV Python. Technically, your mask.png image is a RGBA color image with the RGB stand for Red-Green-Blue and A stand for transparency. When you used np.array(Image.open('mask.png').convert('RGB')), you have removed the transparence channel away from the image.

import numpy as np

import cv2

import matplotlib.pyplot as plt

mask = cv2.imread('mask.png', cv2.IMREAD_UNCHANGED)

cv2.imwrite('result.png',mask)