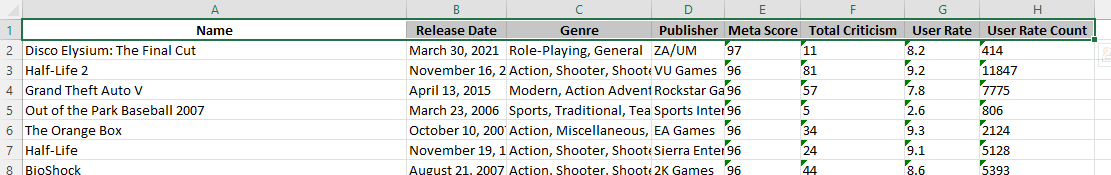

I'm currently trying to create a dataset that contains specific Metacritic game data. Firstly, I get the list of all game URLs which then are exported as a .csv file, and then I run the next script for scraping and get all the data as the .xlsx file.

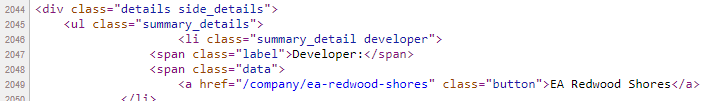

Now I need to modify the script so it can also get the developer data in addition to the publisher.

Should it be something like this?

developer = soup.find('div', class_="details side_details").find('span', class_="label")

This is what I'm seeing on Meta:

Script for getting links:

import urllib.request

import csv

import os

import time

from bs4 import BeautifulSoup

from user_agent import generate_user_agent

filepath='gamelinks.csv'

file_exists = os.path.isfile(filepath)

if (file_exists):

os.remove(filepath)

metacritic_base = "http://www.metacritic.com/browse/games/release-date/available/pc/metascore?view=detailed&page="

hdr= {'Accept': 'text/html,application/xhtml xml,application/xml;q=0.9,*/*;q=0.8', 'User-Agent': generate_user_agent(device_type="desktop", os=('mac', 'linux', 'win'))}

page_start = 0

page_end = 40

for i in range(page_start,page_end):

print("Scraping Page {} - {} Pages Left".format(i, page_end - (i 1)))

#

links= []

metacritic = metacritic_base str(i)

page = urllib.request.Request(metacritic, headers=hdr)

content = urllib.request.urlopen(page).read()

soup = BeautifulSoup(content, 'html.parser')

right_class=soup.find_all('div', class_='browse_list_wrapper')

for item in right_class:

try:

hrefs = item.find_all('a', class_="title", href=True)

for it in hrefs:

link = it['href']

links.append(link)

except: pass

with open(filepath, 'a') as output:

writer = csv.writer(output, lineterminator='\n')

for val in links:

writer.writerow([val])

time.sleep(1)

#

print("Im done.")

Script for scraping:

import urllib.request

import csv

import os

from bs4 import BeautifulSoup

from user_agent import generate_user_agent

import json

import time

import xlsxwriter

import pprint

pp = pprint.PrettyPrinter(width=80, compact=True)

## Link File

filepath='gamelinks.csv'

file_exists = os.path.isfile(filepath)

if (file_exists is False):

print("Wrong filepath.!")

links = []

with open(filepath, 'r') as input:

reader = csv.reader(input)

for r in reader:

links.append(r[0])

## File

## Xlsx

filepath = 'gameDataset.xlsx'

file_exists = os.path.isfile(filepath)

if (file_exists):

os.remove(filepath)

workbook = xlsxwriter.Workbook('gameDataset.xlsx')

worksheet = workbook.add_worksheet()

worksheet.write(0, 0, "Name")

worksheet.write(0, 1, "Release Date")

worksheet.write(0, 2, "Genre")

worksheet.write(0, 3, "Publisher")

worksheet.write(0, 4, "Meta Score")

worksheet.write(0, 5, "Total Criticism")

worksheet.write(0, 6, "User Rate")

worksheet.write(0, 7, "User Rate Count")

row = 1

## Xlsx

metacritic_base = "http://www.metacritic.com"

hdr= {'Accept': 'text/html,application/xhtml xml,application/xml;q=0.9,*/*;q=0.8', 'User-Agent': generate_user_agent(device_type="desktop", os=('mac', 'linux', 'win'))}

count = 1

exception_list = []

for link in links:

print("Scraping Game {} - {} Games Left".format(count, len(links)-count))

#

metacritic = metacritic_base link

try:

page = urllib.request.Request(metacritic, headers=hdr)

content = urllib.request.urlopen(page).read()

soup = BeautifulSoup(content, 'html.parser')

data = json.loads(soup.find('script', type='application/ld json').text)

cl_count = soup.find('div', class_="userscore_wrap").find('span', class_="count")

user_rate_count = cl_count.find('a').text.replace(' Ratings', '')

user_rating = soup.find('div', class_="user").text

rating_count = data['aggregateRating']['ratingCount']

rating_value = data['aggregateRating']['ratingValue']

date = data['datePublished']

genre_list = data['genre']

name = data['name']

publishers_list = []

publishers = data['publisher']

for pb in publishers:

publishers_list.append(pb['name'])

worksheet.write(row, 0, name)

worksheet.write(row, 1, date)

worksheet.write(row, 2, ", ".join(genre_list))

worksheet.write(row, 3, ", ".join(publishers_list))

worksheet.write(row, 4, rating_value)

worksheet.write(row, 5, rating_count)

worksheet.write(row, 6, user_rating)

worksheet.write(row, 7, user_rate_count)

row = 1

#

#time.sleep(2)

except BaseException as e:

exception_list.append("On game link {}, Error : {}".format(count,str(e)))

count = 1

workbook.close()

if(len(exception_list) > 0):

filepath = "exceptions"

file_exists = os.path.isfile(filepath)

if (file_exists):

os.remove(filepath)

with open(filepath, 'a') as output:

writer = csv.writer(output, lineterminator='\n')

for e in exception_list:

writer.writerow([e])

CodePudding user response:

Note: Please focus in new questions and provide just a mcve

How to select the developer?

You can go with find() or with css selectors - Select the element with class named developer and containes the <a>:

soup.select_one('.developer a').text

Example

import requests

from bs4 import BeautifulSoup

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.94 Safari/537.36'}

r = requests.get('https://www.metacritic.com/game/playstation-5/farming-simulator-22',headers=headers)

soup = BeautifulSoup(r.content, 'lxml')

soup.select_one('.developer a').text

Output

Giants Software

EDIT

Focus - Question mainly deals with extracting the developer, but I will also provide a solution to create your excel file, but with pandas.

Example

import urllib.request

from bs4 import BeautifulSoup

import json

import pandas as pd

metacritic_base = "http://www.metacritic.com"

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.94 Safari/537.36'}

links = ['/game/playstation-5/farming-simulator-22','/game/playstation-5/grand-theft-auto-the-trilogy---the-definitive-edition']

data_list = []

exception_list = []

for count,link in enumerate(links):

metacritic = metacritic_base link

print(metacritic)

try:

page = urllib.request.Request(metacritic, headers=headers)

content = urllib.request.urlopen(page).read()

soup = BeautifulSoup(content, 'html.parser')

data = json.loads(soup.find('script', type='application/ld json').text)

data_list.append({

'Name' : data['name'],

'Release Date' : data['datePublished'],

'Genre' : ", ".join(data['genre']),

'Publisher' : ", ".join([x['name'] for x in data['publisher']]),

'Developer' : soup.select_one('.developer a').text,

'Meta Score' : data['aggregateRating']['ratingValue'],

'Total Criticism' : data['aggregateRating']['ratingCount'],

'User Rates' : soup.find('div', class_="user").text,

'User Rating Count' : soup.select_one('.userscore_wrap a').get_text(strip=True).replace(' Ratings', '')

})

except BaseException as e:

exception_list.append("On game link {}, Error : {}".format(count,str(e)))

# will give you a data frame, what wil give you the excel file if comment out the .to_excel...

pd.DataFrame(data_list)#.to_excel('gameDataset.xlsx', index=False)

Output excel file

| Name | Release Date | Genre | Publisher | Developer | Meta Score | Total Criticism | User Rates | User Rating Count |

|---|---|---|---|---|---|---|---|---|

| Farming Simulator 22 | November 22, 2021 | Simulation, General | Giants Software, Solutions 2 GO | Giants Software | 78 | 4 | 6.8 | 6.8 |

| Grand Theft Auto: The Trilogy - The Definitive Edition | November 11, 2021 | Miscellaneous, Compilation | Rockstar Games | Rockstar Games | 56 | 38 | 0.9 | 0.9 |