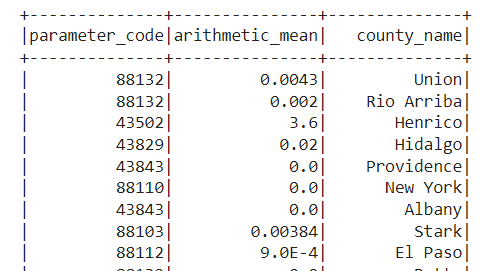

I'm currently using pyspark in a google colab notebook and after doing some data cleaning I finished with a dataframe that has: parameter code; county name ; arithmetic mean for each day

i need to have a table that gives for each parameter the name of the county with highest value and lowest value

After doing this groupby i ended up getting what i wanted (have for each parameter code and county name the value for the highest value) but only for the max value, i also need for the min value

county_param_value_small_grouped_parameter_code = county_param_value_small.groupby('parameter_code','county_name').max('arithmetic_mean').orderBy("parameter_code","max(arithmetic_mean)",ascending=False).show(10)

What I wanted to end up with would be a table with 2 county name and 2 values for each parameter code, being highest value and lowest value. But I am having a lot of trouble manipulating this because it always returns an object, i have to make all the conditions in one go and have a .show() in the end.

CodePudding user response:

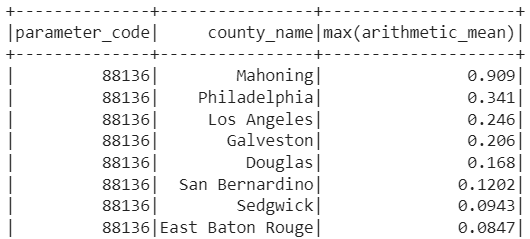

One solution to the problem is to use window functions to attach for every row having the same parameter_code the max and min arithmetic_mean across the group. Finally filter rows where the arithmetic_mean is equal to the min or max value of the group.

from pyspark.sql import functions as F

from pyspark.sql import Window as W

data = [("1", "IN", 10, ), ("1", "NL", 20, ), ("1", "DE", 15, ), ("2", "US", 100, ), ("2", "BE", 200, ), ("2", "FR", 150, )]

df = spark.createDataFrame(data, ("parameter_code", "county_name", "arithmetic_mean", ))

window_spec = W.partitionBy("parameter_code")

df.withColumn("max_arithmetic_mean", F.max("arithmetic_mean").over(window_spec))\

.withColumn("min_arithmetic_mean", F.min("arithmetic_mean").over(window_spec))\

.filter((F.col("arithmetic_mean") == F.col("max_arithmetic_mean")) | (F.col("arithmetic_mean") == F.col("min_arithmetic_mean")))\

.select("parameter_code", "county_name", "arithmetic_mean").show()

Output

-------------- ----------- ---------------

|parameter_code|county_name|arithmetic_mean|

-------------- ----------- ---------------

| 1| IN| 10|

| 1| NL| 20|

| 2| US| 100|

| 2| BE| 200|

-------------- ----------- ---------------