I'm running an ETL pipeline through Google Cloud Data Fusion. A quick summary of the pipeline's actions:

- Take in a csv file which is a list of names

- Take in a table from bigquery-public-data

- Join the two together and then output the results to a table

- Also output the results to a Group By, where is consolidates duplicates, and sums their scores.

- Output the resulting list of author names and scores to both a table, and a CSV file in a Google Cloud Storage bucket.

All of this should be working properly, the two tables are appearing with the correct data, and are queryable.

However, the CSV output from the Group By is coming out into the GCS bucket as 37 different parts, each named with the default naming system ("part-r-00000" to "part-r-00036"). They do appear in the CSV format (both text/csv and application/csv have resulted in usable CSV files.

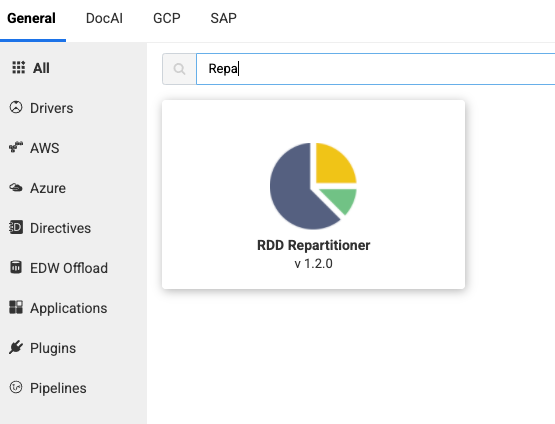

I want the output to export into the GCS bucket folders as a single csv file with a given name (author_rankings.csv). Below I'm attaching a screenshot of the pipeline and an image of some of the output. Please let me know if I can provide any additional information.

Thank you for any insight.

Thanks and Regards,

Sagar