Context

I have three services in place, each of which generate a certain JSON payload (and take different times to do so) that is needed to be able to process a message which is the result of combining all three JSON payloads into a single payload. This final payload in turn is to be sent to another Kafka Topic so that it can then be consumed by another service.

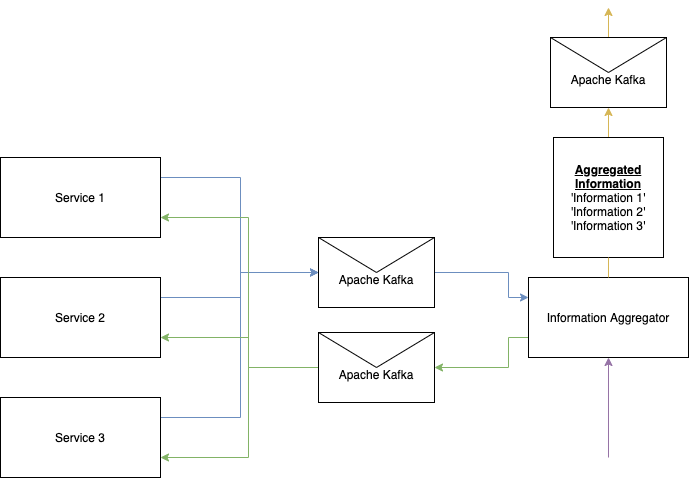

Below you can find a diagram that better explains the problem at hand. The information aggregator service receives a request to aggregate information, it sends that request to a Kafka topic so that Service 1, Service 2 and Service 3 consume that request and send their data (JSON Payload) to 3 different Kafka Topics.

The Information Aggregator has to consume the messages from the three services (Which are sent to their respective Kafka Topics at very different times e.g. Service 1 takes half an hour to respond while service 2 and 3 take under 10 minutes) so that it can generate a final payload (Represented as Aggregated Information) to send to another Kafka Topic.

Research

After having researched a lot about Kafka and Kafka Streams, I came across this article that provides some great insights on how this should be elaborated.

In this article, the author consumes messages from a single topic while in my specific use case I must consume from three different topics, wait for each message from each topic with a certain ID to arrive so that I can then signal my process that it can proceed to consume the 3 messages with the same ID in different topics to generate the final message and send that final message to another Kafka topic (Which then another service will consume that message).

Thought Out Solution

My thoughts are that I need to have a Kafka Stream checking all three topics and when it sees that has all the 3 messages available, send a message to a kafka topic called e.g. TopicEvents from which the Information Aggregator will be consuming and by consuming the message will know exactly which messages to get from which topic, partition and offset and then can proceed to send the final payload to another Kafka Topic.

Questions

Am I making a very wrong use of Kafka Streams and Batch Processing?

How can I signal a Stream that all of the messages have arrived so that it can generate the message to place in the TopicEvent so as to signal the Information Aggregator that all the messages in the different topics have arrived and are ready to be consumed?

Sorry for this long post, any pointers that you can provide will be very helpful and thank you in advance

CodePudding user response:

How can I signal a Stream that all of the messages have arrived

You can do this using Streams and joins. Since joins are limited to 2 topics you'll need to do 2 joins to get the event where all 3 have occurred.

Join TopicA and TopicB to get the event when A and B have occurred. Join AB with TopicC to get the event where A, B and C occur.