I have a Delta dataframe containing multiple columns and rows.

I did the following:

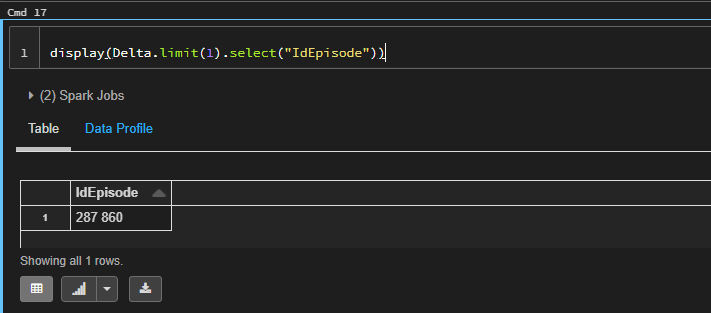

Delta.limit(1).select("IdEpisode").show()

---------

|IdEpisode|

---------

| 287 860|

---------

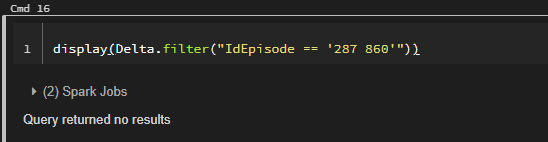

But then, when I do this:

Delta.filter("IdEpisode == '287 860'").show()

It returns 0 rows which is weird because we can clearly see the Id present in the dataframe.

I figured it was about the ' ' in the middle but I dont see why it would be a problem and how to fix it.

IMPORTANT EDIT:

Doing Delta.limit(1).select("IdEpisode").collect()[0][0]

returned: '287\xa0860'

And then doing:

Delta.filter("IdEpisode == '287\xa0860'").show()

returned the rows I've been looking for. Any explanation ?

CodePudding user response:

This character is called NO-BREAK SPACE. It's not a regular space that's why it is not matched with your filtering.

You can remove it using regexp_replace function before applying filter:

Delta = spark.createDataFrame([('287\xa0860',)], ['IdEpisode'])

Delta.filter("regexp_replace(IdEpisode, '[\\u00A0]', ' ') = '287 860'").show()

# ---------

#|IdEpisode|

# ---------

#| 287 860|

# ---------