Im using a Postgres DB via PGAdmin 4.13

For the sake of performance, I'm trying to run two separate selects, and then do an inner join on them. However, it appears to be running every row against every other row, and I'm returning a table that exponentially too large (literally 1000x bigger than either query alone returns.)

First query returns 4320 rows:

SELECT * FROM schema_name.table_one WHERE resultset_name LIKE 'My test results%'

Second query returns 1080 rows:

SELECT * FROM schema_name.table_two WHERE resultset_name LIKE 'My test results%'

Join returns 518000 rows :D

SELECT *

FROM

(SELECT * FROM schema_name.table_one WHERE resultset_name LIKE 'My test results%') t1

INNER JOIN

(SELECT * FROM schema_name.table_two WHERE resultset_name LIKE 'My test results%') t2

ON t1.resultset_name = t2.resultset_name

Attempting to run a more traditional inner or left join runs forever...

SELECT *

FROM schema_name.table_two

INNER JOIN schema_name.table_one

ON schema_name.table_two.resultset_name = schema_name.table_one.resultset_name

WHERE schema_name.table_two.resultset_name LIKE 'My test results%'

NOTE: That the WHERE schema_name.table_two.resultset_name LIKE 'My test results%' requires the schema_name.table_two. to be in the WHERE clause, otherwise it errors on an ambiguous column name.

QUESTION 1: Why is the JOIN from two subselects returning so many rows? My intention was to slim down memory use - by returning two smaller tables - and then joining only the data in those tables. Can the query be corrected to do this?

QUESTION 2: If my initial join of two subselects cannot work the way I want it to, how can I filter down the memory use for the traditional join?

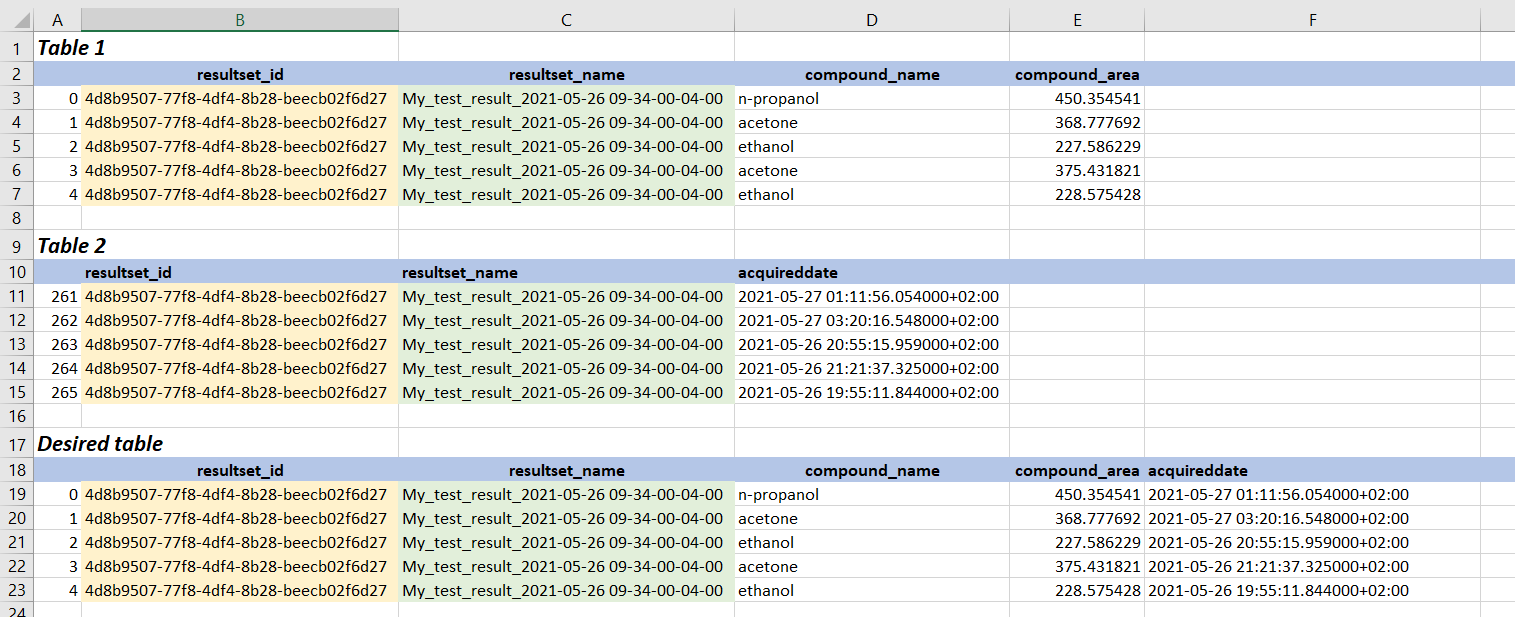

EDIT: For additional clarity

CodePudding user response:

QUESTION 1: you are joining via resultset_name. I suspect that a lot of rows have the same value in this column. If you have 10 rows in Table_one with resultset_name='My test results 1', and table_two has 20 rows with the same value then the result will have 10x20=200 rows for the same resultset_name.

QUESTION 2: Most database systems do this optimisation themselves (without you having to restructure/rearrange your query). I guess it is because of Q1 that it uses lots of resources.

Are you certain that the join condition is t1.resultset_name = t2.resultset_name?

If you can't confirm that this is the case, do some profiling of the resultset_name to find out how many unique values in how many rows, and if the scenario I mentioned in Q1 applies.

Edit: if table_two has the same date for every row for the same resultset_name (or if you are interested on any one of them), then you can reduce table_two two one row per resultset_name before joining to table_one, as follows:

SELECT *

FROM

schema_name.table_one t1

INNER JOIN

( SELECT t2a.resultset_name,

min(t2a.date_of_experiment) as date_of_experiment

FROM schema_name.table_two t2a

WHERE t2a.resultset_name LIKE 'My test results%'

GROUP BY t2a.resultset_name) t2

ON t1.resultset_name = t2.resultset_name

AND t1.resultset_name LIKE 'My test results%'