When stress-testing a TCP server of mine with a large number of connections, I figured that connection requests will throw a SocketException after some time. The exception displays randomly either

Only one usage of each socket address (protocol/network address/port) is normally permitted.

or

No connection could be made because the target machine actively refused it.

as its message.

This happens usually but randomly after some seconds and some tens of thousands of connects and disconnects. To connect, I use the local end point IPEndPoint clientEndPoint = new(IPAddress.Any, 0);, which I believe would give me the next free ephemeral port.

To isolate the issue, I wrote this simple program that runs both a TCP server and many parallel clients for a simple counter:

using System.Diagnostics;

using System.Net;

using System.Net.Sockets;

CancellationTokenSource cancellationTokenSource = new();

CancellationToken cancellationToken = cancellationTokenSource.Token;

const int serverPort = 65000;

const int counterRequestMessage = -1;

const int randomCounterResponseMinDelay = 10; //ms

const int randomCounterResponseMaxDelay = 1000; //ms

const int maxParallelCounterRequests = 10000;

#region server

int counterValue = 0;

async void RunCounterServer()

{

TcpListener listener = new(IPAddress.Any, serverPort);

listener.Start(maxParallelCounterRequests);

while (!cancellationToken.IsCancellationRequested)

{

HandleCounterRequester(await listener.AcceptTcpClientAsync(cancellationToken));

}

listener.Stop();

}

async void HandleCounterRequester(TcpClient client)

{

await using NetworkStream stream = client.GetStream();

Memory<byte> memory = new byte[sizeof(int)];

//read requestMessage

await stream.ReadAsync(memory, cancellationToken);

int requestMessage = BitConverter.ToInt32(memory.Span);

Debug.Assert(requestMessage == counterRequestMessage);

//increment counter

int updatedCounterValue = Interlocked.Add(ref counterValue, 1);

Debug.Assert(BitConverter.TryWriteBytes(memory.Span, updatedCounterValue));

//wait random timeout

await Task.Delay(GetRandomCounterResponseDelay());

//write back response

await stream.WriteAsync(memory, cancellationToken);

client.Close();

client.Dispose();

}

int GetRandomCounterResponseDelay()

{

return Random.Shared.Next(randomCounterResponseMinDelay, randomCounterResponseMaxDelay);

}

RunCounterServer();

#endregion

IPEndPoint clientEndPoint = new(IPAddress.Any, 0);

IPEndPoint serverEndPoint = new(IPAddress.Parse("127.0.0.1"), serverPort);

ReaderWriterLockSlim isExceptionEncounteredLock = new(LockRecursionPolicy.NoRecursion);

bool isExceptionEncountered = false;

async Task RunCounterClient()

{

try

{

int counterResponse;

using (TcpClient client = new(clientEndPoint))

{

await client.ConnectAsync(serverEndPoint, cancellationToken);

await using (NetworkStream stream = client.GetStream())

{

Memory<byte> memory = new byte[sizeof(int)];

//send counter request

Debug.Assert(BitConverter.TryWriteBytes(memory.Span, counterRequestMessage));

await stream.WriteAsync(memory, cancellationToken);

//read counter response

await stream.ReadAsync(memory, cancellationToken);

counterResponse = BitConverter.ToInt32(memory.Span);

}

client.Close();

}

isExceptionEncounteredLock.EnterReadLock();

//log response if there was no exception encountered so far

if (!isExceptionEncountered)

{

Console.WriteLine(counterResponse);

}

isExceptionEncounteredLock.ExitReadLock();

}

catch (SocketException exception)

{

bool isFirstEncounteredException = false;

isExceptionEncounteredLock.EnterWriteLock();

//log exception and note that one was encountered if it is the first one

if (!isExceptionEncountered)

{

Console.WriteLine(exception.Message);

isExceptionEncountered = true;

isFirstEncounteredException = true;

}

isExceptionEncounteredLock.ExitWriteLock();

//if this is the first exception encountered, rethrow it

if (isFirstEncounteredException)

{

throw;

}

}

}

async void RunParallelCounterClients()

{

SemaphoreSlim clientSlotCount = new(maxParallelCounterRequests, maxParallelCounterRequests);

async void RunCounterClientAndReleaseSlot()

{

await RunCounterClient();

clientSlotCount.Release();

}

while (!cancellationToken.IsCancellationRequested)

{

await clientSlotCount.WaitAsync(cancellationToken);

RunCounterClientAndReleaseSlot();

}

}

RunParallelCounterClients();

while (true)

{

ConsoleKeyInfo keyInfo = Console.ReadKey(true);

if (keyInfo.Key == ConsoleKey.Escape)

{

cancellationTokenSource.Cancel();

break;

}

}

My initial guess is, that I run out of ephemeral ports because I somehow do not free them correctly. I just Close() and Dispose() my TcpClients in both my client and server code when a request is finished. I thought that would automatically release the port, but when I use netstat -ab in a console, it gives me countless entries like this, even after closing the application:

TCP 127.0.0.1:65000 kubernetes:59996 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:59997 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:59998 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:59999 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:60000 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:60001 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:60002 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:60003 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:60004 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:60005 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:60006 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:60007 TIME_WAIT

TCP 127.0.0.1:65000 kubernetes:60009 TIME_WAIT

Also, my PC sometimes gets a lot of stutter some time after I exit the application. I assume this is due to Windows cleaning up my leaked port usage?

So I wonder, what am I doing wrong here?

CodePudding user response:

Only one usage of each socket address (protocol/network address/port) is normally permitted. ... My initial guess is, that I run out of ephemeral ports because I somehow do not free them correctly.

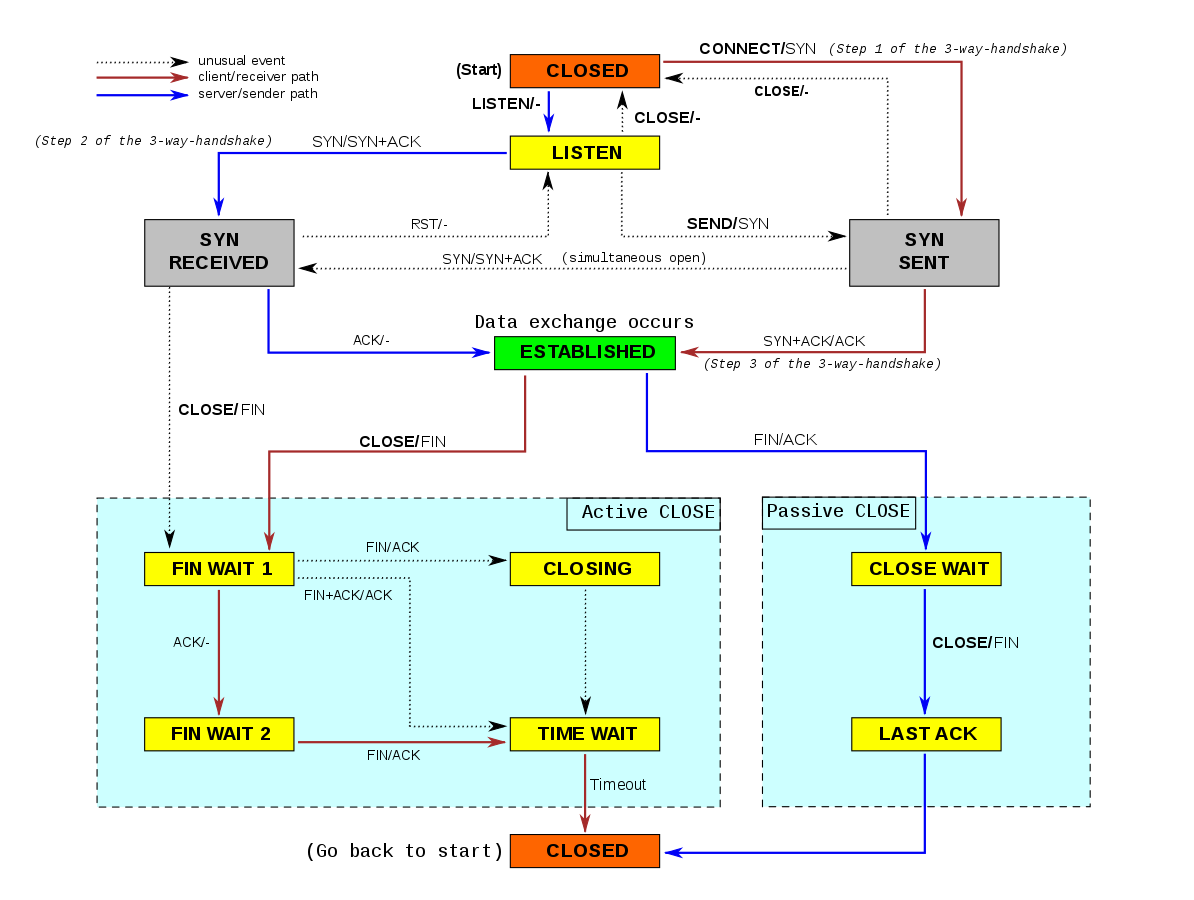

TIME_WAIT is a perfectly normal state each TCP connection will enter when the connection is actively closed, i.e. explicitly calling close after sending the data or implicitly closing when exiting the application. See this diagram (source

It will take some timeout to leave the TIME_WAIT state and enter CLOSED. As long as the connection is in TIME_OUT the specific combination of source ip, port and destination ip,port cannot be used for new connections. This effectively limits the number of connections possible within some time from one specific IP address to another specific IP. Note that typical servers don't run into such limits since they get many connections from different system, and from each source IP only a few connections.

There is not much one can do about this except not actively closing the connection. If the other side triggers the connection first (sending FIN) and one continues with this close (ACKing the FIN and sending own FIN) then no TIME_WAIT will happen. Of course in your specific scenario of a single client and a single server this will just shift the problem to the server.

No connection could be made because the target machine actively refused it.

This has another reason. The server does a listen on the socket and gives the intended size of the backlog (the OS might will probably not use exactly this value). This backlog is used to accept new TCP connections in the OS kernel and the server will the call accept to get these accepted TCP connections. If the server calls accept less often than new connections get established the backlog will fill up. And once the backlog is full the server will refuse new connection, resulting in the error you see. In other words: this happens if the server is not able to keep up with the client.