I'm using pyspark's create_map function to create a list of key:value pairs. My problem is that when I introduce key value pairs with string value, the key value pairs with float values are all converted to string!

Does anyone know how to avoid this happening?

To reproduce my problem:

import pandas as pd

import pyspark.sql.functions as F

from pyspark.sql import SparkSession

spark = SparkSession.builder.master("local").appName("test").getOrCreate()

test_df = spark.createDataFrame(

pd.DataFrame(

{

"key": ["a", "a", "a"],

"name": ["sam", "sam", "sam"],

"cola": [10.1, 10.2, 10.3],

"colb": [10.2, 12.1, 12.1],

}

)

)

test_df.withColumn("test", F.create_map(

F.lit("a"), F.col("cola").cast("float"),

F.lit("b"), F.col("colb").cast("float"),

F.lit("key"), F.lit("default"),

F.lit("name"), F.lit("ext"),

)).show()

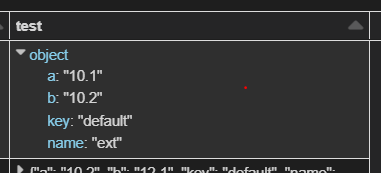

If you observe inside the mapping created... the values for cola and colb are strings, not floats!

CodePudding user response:

No, you can't. MapType values must have the same type. The same applies for the keys.

MapType(keyType, valueType, valueContainsNull): Represents values comprising a set of key-value pairs. The data type of keys is described bykeyTypeand the data type of values is described byvalueType

You can use StructType instead:

test_df.withColumn(

"test",

F.struct(

F.col("cola").cast("float").alias("a"),

F.col("colb").cast("float").alias("b"),

F.lit("default").alias("key"),

F.lit("ext").alias("name"),

)

).printSchema()

#root

#|-- key: string (nullable = true)

#|-- name: string (nullable = true)

#|-- cola: double (nullable = true)

#|-- colb: double (nullable = true)

#|-- test: struct (nullable = false)

#| |-- a: float (nullable = true)

#| |-- b: float (nullable = true)

#| |-- key: string (nullable = false)

#| |-- name: string (nullable = false)