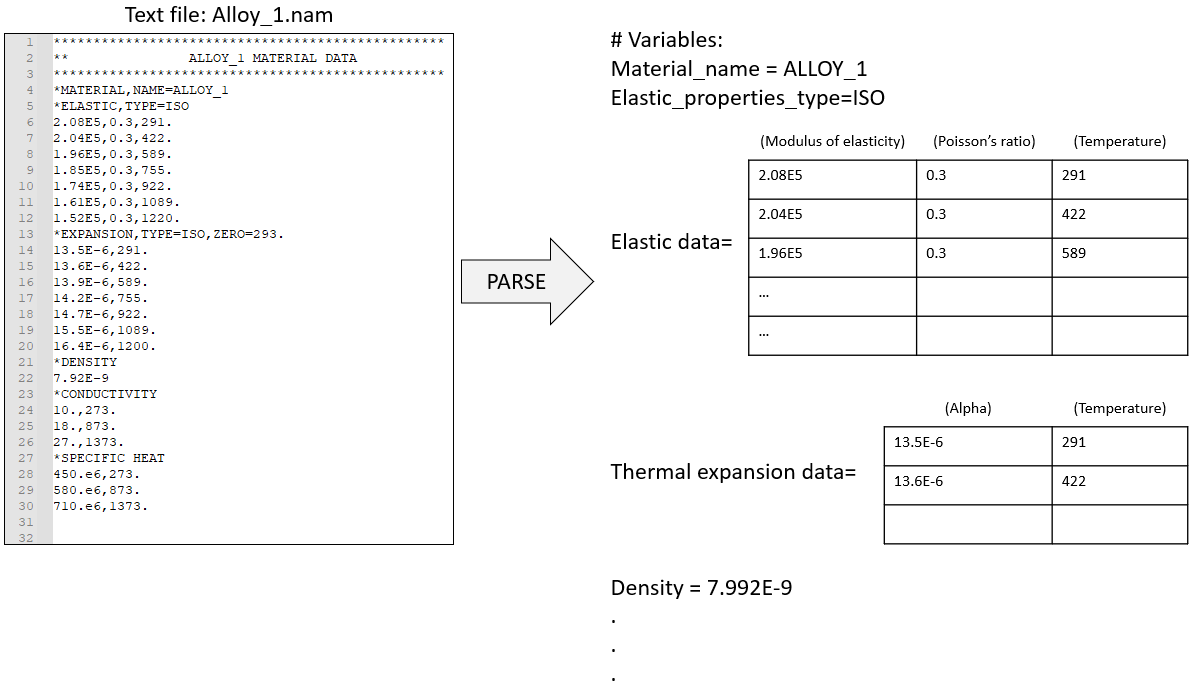

I have a keyword based materials data file. I want to parse data from this file and create variables and matrices to work on them in a Python script. The material file may have comment lines in the very top starting with the string "**", I simply want to ignore these and parse the data on other lines that follows a keyword of the form *keyword_1, and also their comma-delimited parameters of the form param_1=param1.

What is the fastest and easiest way to parse data from this kind of keyword based text file with Python? Can I use pandas for this and how?

below is a sample input material file: alloy_1.nam

*************************************************

** ALLOY_1 MATERIAL DATA

*************************************************

*MATERIAL,NAME=ALLOY_1

*ELASTIC,TYPE=ISO

2.08E5,0.3,291.

2.04E5,0.3,422.

1.96E5,0.3,589.

1.85E5,0.3,755.

1.74E5,0.3,922.

1.61E5,0.3,1089.

1.52E5,0.3,1220.

*EXPANSION,TYPE=ISO,ZERO=293.

13.5E-6,291.

13.6E-6,422.

13.9E-6,589.

14.2E-6,755.

14.7E-6,922.

15.5E-6,1089.

16.4E-6,1200.

*DENSITY

7.92E-9

*CONDUCTIVITY

10.,273.

18.,873.

27.,1373.

*SPECIFIC HEAT

450.e6,273.

580.e6,873.

710.e6,1373.

CodePudding user response:

The way is to create a list of dictionaries where each element is a key = Category name and the data in the form of dataframe. We have to use a temporary dictionary to store the comma separated data which gets appended into the list of dictionary each time a new category is found.

Use the pandas.Dataframe() to create the dataframe

Below is the code:

with open('/Users/rpghosh/scikit_learn_data/test.txt') as f:

lines = f.readlines()

# empty list of dataframes

lst_dfs = []

# empty dictionary to store each dataframe temporarily

d = {}

dfName = ''

PrevdfName = ''

createDF = False

for line in lines:

if re.match('^\*{1}([A-Za-z0-9,=]{1,})\n$', line):

variable = line.lstrip('*').rstrip().split(',')

PrevdfName = dfName

dfName = variable[0]

createDF = False

if (not createDF) and len(d) > 0:

df = pd.DataFrame(d)

# append a dictionary which has category and dataframe

lst_dfs.append( { PrevdfName : df} )

d = {}

elif re.match('^[0-9]([0-9,]){1,}\n$',line):

#dfName = PrevdfName

data = line.rstrip().split(',')

for i in range(len(data)):

# customised column name

colName = 'col' str(i 1)

# if the colname is already present in the

# dictionary keys then append the element

# to existing key's list

if colName in d.keys():

d[colName].append( data[i])

else:

d[colName] = [data[i]]

else:

createDF = False

d={}

df = pd.DataFrame(d)

lst_dfs.append({ dfName : df})

To view the output , you will have a list of dataframes , so the code will be -

for idx, df in enumerate(lst_dfs):

print(f"{idx=}")

print(df)

print()

Output :

idx=0

{'elastic': col1 col2 col3

0 21 22 23

1 11 12 13

2 31 32 33}

idx=1

{'expansion': col1 col2 col3

0 4 5 6

1 41 15 16

2 42 25 26}

idx=2

{'density': col1

0 12343}

idx=3

{'conductivity': col1 col2 col3 col4 col5 col6

0 54 55 56 51 55 56

1 42 55 56 51 55 56

2 54 55 56 51 55 56

3 42 55 56 51 55 56}