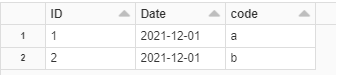

I have a dataframe.

from pyspark.sql.types import *

input_schema = StructType(

[

StructField("ID", StringType(), True),

StructField("Date", StringType(), True),

StructField("code", StringType(), True),

])

input_data = [

("1", "2021-12-01", "a"),

("2", "2021-12-01", "b"),

]

input_df = spark.createDataFrame(data=input_data, schema=input_schema)

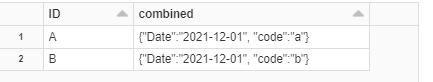

I would like to perform a transformation that combines a set of columns and stuff into a json string. The columns to be combined is known ahead of time. The output should look like something below.

Is there any sugggested method to achieve this? Appreciate any help on this.

CodePudding user response:

You can create a struct type and then convert to json:

from pyspark.sql import functions as F

col_to_combine = ['Date','code']

output = input_df.withColumn('combined',F.to_json(F.struct(*col_to_combine)))\

.drop(*col_to_combine)

output.show(truncate=False)

--- --------------------------------

|ID |combined |

--- --------------------------------

|1 |{"Date":"2021-12-01","code":"a"}|

|2 |{"Date":"2021-12-01","code":"b"}|

--- --------------------------------