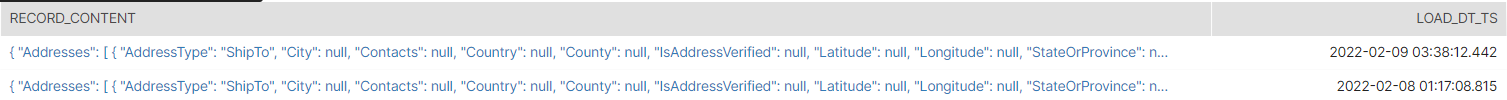

Attached picture is my source data in a Snowflake table. I need to copy this into S3 as 2 individual files.

EXECUTE IMMEDIATE

$$

DECLARE

load_dt_tss timestamp;

file_name varchar2(30);

c1 CURSOR FOR SELECT DISTINCT RECORD_CONTENT, LOAD_DT_TS FROM mytable where LOAD_DT_TS >= '2022-02-09 00:00:00';

BEGIN

for record in c1 do

load_dt_tss:=RECORD.LOAD_DT_TS;

file_name:=load_dt_tss||'.csv';

EXECUTE IMMEDIATE 'copy into @STG_SF_S3/'||:file_name|| 'from (select record_content from mytable where LOAD_DT_TS >= ''2022-02-09 00:00:00'') FILE_FORMAT = (TYPE=JSON,COMPRESSION = NONE) single = FALSE overwrite=FALSE';

end for;

RETURN 0;

END;

$$ ;

Above given is the Query I'm using. When running this query, I get Error as: "Uncaught exception of type 'STATEMENT_ERROR' on line 10 at position 3 : SQL compilation error: syntax error line 1 at position 40 unexpected '21' ".

Can someone please help.

CodePudding user response:

CREATE database AAP_LDS_DEV_DB;

create schema AAP_LDS_DEV_DB.PURCHASE_ORDER;

create table AAP_LDS_DEV_DB.PURCHASE_ORDER.T_PO_STG(RECORD_CONTENT variant, LOAD_DT_TS timestamp_ntz );

insert into AAP_LDS_DEV_DB.PURCHASE_ORDER.T_PO_STG (SELECT parse_json(column1), to_timestamp(column2) from values

('{"blar":"blar1"}','2022-02-10'),

('{"blar":"blar2"}','2022-02-11')

);

EXECUTE IMMEDIATE

$$

DECLARE

sql text;

file_name text;

c1 CURSOR FOR SELECT DISTINCT RECORD_CONTENT, LOAD_DT_TS FROM AAP_LDS_DEV_DB.PURCHASE_ORDER.T_PO_STG where LOAD_DT_TS >= '2022-02-09 00:00:00';

BEGIN

for record in c1 do

file_name := to_char(RECORD.LOAD_DT_TS, 'yyyymmdd_hhmmss') || '.csv';

sql := 'copy into @STG_SF_S3_DEV_JJC/' || :file_name || ' from (select record_content from AAP_LDS_DEV_DB.PURCHASE_ORDER.T_PO_STG where LOAD_DT_TS = ''' || RECORD.LOAD_DT_TS || ''') FILE_FORMAT = (TYPE=JSON,COMPRESSION = NONE) single = FALSE overwrite=FALSE';

EXECUTE IMMEDIATE sql;

end for;

RETURN 0;

END;

$$ ;

CodePudding user response:

EXECUTE IMMEDIATE

$$

DECLARE

file_name varchar2(30);

records variant;

c1 CURSOR FOR SELECT DISTINCT RECORD_CONTENT, LOAD_DT_TS FROM mytable where LOAD_DT_TS >= '2022-02-09 00:00:00';

BEGIN

for record in c1 do

file_name := 'PO'||'_'||to_date(RECORD.LOAD_DT_TS)||'_'||TO_TIME(RECORD.LOAD_DT_TS)||'.json';

records := RECORD.record_content;

create or replace temporary table temp_test_pvt(records variant);

insert into temp_test_pvt select parse_json(:records) ;

EXECUTE IMMEDIATE 'copy into @STG_SF_S3/' || :file_name ||

' from (select distinct records from temp_test_pvt)

FILE_FORMAT = (TYPE=JSON, COMPRESSION = NONE) single = true overwrite=FALSE detailed_output = TRUE ';

end for;

RETURN 0;

END;

$$ ;

This is my final query. With this I am able to COPY one record as one file from Snowflake table into S3.