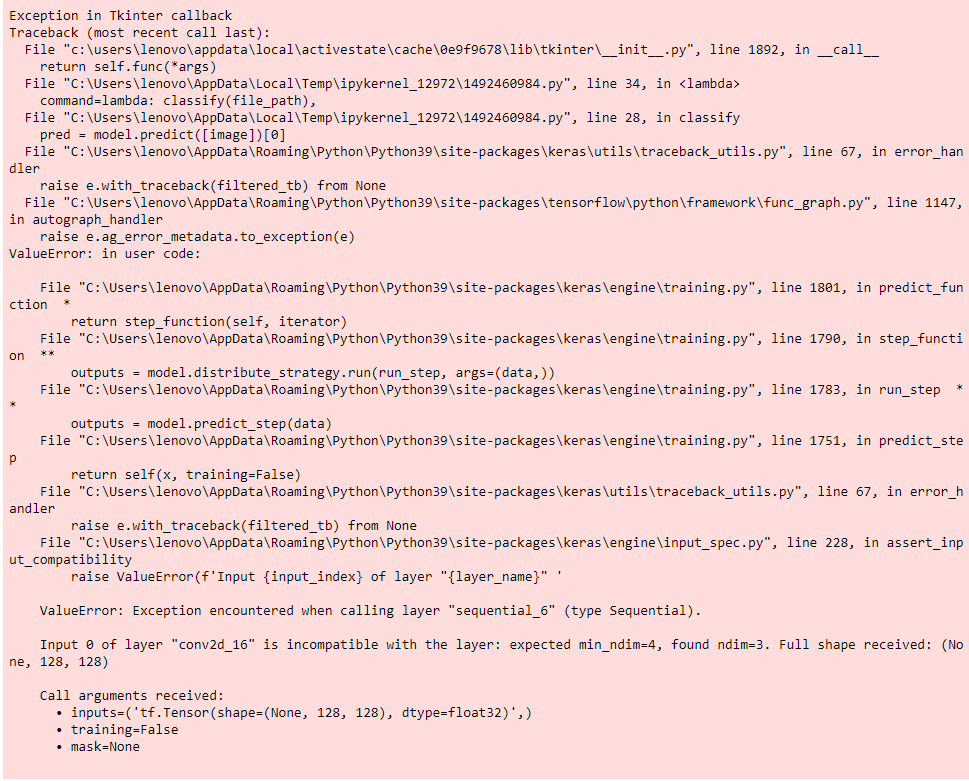

I'm trying to train my model to read some x-ray Images, I'm using Jupyter Notebook, I imported the Libraries, Defined the image properties, Prepared the dataset, Created the neural net model, Defined callbacks... and Managed the Data, Trained the model, and I'm using Tkinter as a gui to use the method here's what I get when I press the button to run the method :

WARNING:tensorflow:Model was constructed with shape (None, 128, 128, 3) for input KerasTensor(type_spec=TensorSpec(shape=(None, 128, 128, 3), dtype=tf.float32, name='conv2d_16_input'), name='conv2d_16_input', description="created by layer 'conv2d_16_input'"), but it was called on an input with incompatible shape (None, 128, 128).

And here's my neural network model :

model=Sequential()

model.add(Conv2D(32,(3,3),activation='relu',input_shape=(Image_Width,Image_Height,Image_Channels)))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Conv2D(64,(3,3),activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Conv2D(128,(3,3),activation='relu'))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(512,activation='relu'))

model.add(BatchNormalization())

model.add(Dropout(0.5))

model.add(Dense(1,activation='sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer='adam',metrics=['accuracy'])

I've put 3 dimensions into the input_shape, Image_Width=128 Image_Height=128 Image_Channels=3

Model was constructed with shape (None, 128, 128, 3) for input, I don't see why I get why I get expected min_ndim=4, found ndim=3 correct me if I'm wrong I'm new into this, Thanks for your time.

Edit: My tkinter gui Code to run the method :

import tkinter as tk

from tkinter import filedialog

from tkinter import *

from PIL import ImageTk, Image

import numpy

from keras.models import load_model

model = load_model('C:/Users/lenovo/PneumoniaClassification/pneumoniatest9999_10epoch.h5')

#dictionary to label all traffic signs class.

classes = {

0:'Normal',

1:'Pneumonia',

}

#initialise GUI

top=tk.Tk()

top.geometry('800x600')

top.title('Pneumonia Classification')

top.configure(background='#CDCDCD')

label=Label(top,background='#CDCDCD', font=('arial',15,'bold'))

sign_image = Label(top)

def classify(file_path):

global label_packed

image = Image.open(file_path)

image = image.resize((128,128))

image = numpy.expand_dims(image, axis=0)

image = numpy.array(image)

image = image/255

pred = model.predict([image])[0]

sign = classes[pred]

print(sign)

label.configure(foreground='#011638', text=sign)

def show_classify_button(file_path):

classify_b=Button(top,text="Classify Image",

command=lambda: classify(file_path),

padx=10,pady=5)

classify_b.configure(background='#364156', foreground='white',

font=('arial',10,'bold'))

classify_b.place(relx=0.79,rely=0.46)

def upload_image():

try:

file_path=filedialog.askopenfilename()

uploaded=Image.open(file_path)

uploaded.thumbnail(((top.winfo_width()/2.25),

(top.winfo_height()/2.25)))

im=ImageTk.PhotoImage(uploaded)

sign_image.configure(image=im)

sign_image.image=im

label.configure(text='')

show_classify_button(file_path)

except:

pass

upload=Button(top,text="Upload an image",command=upload_image,padx=10,pady=5)

upload.configure(background='#364156', foreground='white',font=('arial',10,'bold'))

upload.pack(side=BOTTOM,pady=50)

sign_image.pack(side=BOTTOM,expand=True)

label.pack(side=BOTTOM,expand=True)

heading = Label(top, text="Pneumonia Classification",pady=20, font=('arial',20,'bold'))

heading.configure(background='#CDCDCD',foreground='#364156')

heading.pack()

top.mainloop()

CodePudding user response:

Your model needs the input shape (samples, width, height, channels). So when you call model.predict, you should feed an image to your model with the shape (1, 128, 128, 3). This corresponds to the predefined input shape of your model: input_shape=(Image_Width,Image_Height,Image_Channels) (excluding the batch / samples dimension). I assume you want to make a prediction for a single image, hence the number 1.

If you are feeding grayscale images to your model, you will have to convert them to RGB, for example with tf.image.grayscale_to_rgb:

model.predict(tf.image.grayscale_to_rgb(image))

Full working example for reference:

import tensorflow as tf

model=tf.keras.Sequential()

model.add(tf.keras.layers.Conv2D(32,(3,3),activation='relu',input_shape=(128, 128, 3)))

model.add(tf.keras.layers.BatchNormalization())

model.add(tf.keras.layers.MaxPooling2D(pool_size=(2,2)))

model.add(tf.keras.layers.Dropout(0.25))

model.add(tf.keras.layers.Conv2D(64,(3,3),activation='relu'))

model.add(tf.keras.layers.BatchNormalization())

model.add(tf.keras.layers.MaxPooling2D(pool_size=(2,2)))

model.add(tf.keras.layers.Dropout(0.25))

model.add(tf.keras.layers.Conv2D(128,(3,3),activation='relu'))

model.add(tf.keras.layers.BatchNormalization())

model.add(tf.keras.layers.MaxPooling2D(pool_size=(2,2)))

model.add(tf.keras.layers.Dropout(0.25))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(512,activation='relu'))

model.add(tf.keras.layers.BatchNormalization())

model.add(tf.keras.layers.Dropout(0.5))

model.add(tf.keras.layers.Dense(1,activation='sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer='adam',metrics=['accuracy'])

image = tf.random.normal((1, 128, 128, 3))

print(model.predict(image))