I have an Azure Data Factory pipeline which launches 4 Databricks activities in parallel.

The 4 activities do almost the same thing: Write different data in 4 different SQL Server tables in the same database

val df= spark.sql("SELECT * FROM TAB1")

df

.write

.format("com.microsoft.sqlserver.jdbc.spark")

.mode("overwrite")

.option("truncate", value = true)

.option("reliabilityLevel", "BEST_EFFORT")

.option("tableLock", "false")

.option("url", url)

.option("dbtable", "dbo.TAB1")

.option("user", u)

.option("password", p)

.option("schemaCheckEnabled", "false")

.option("batchsize", "1048576")

.save()

We noticed that although the job executes successfully, sometimes it fails with an:

SQLServerException: The connection is closed error.

The data we try to write in SQL takes between 10 and 20 minutes to finish entirely.

I am thinking maybe the fact that we execute the 4 jobs in parallel is the source of the problem, but I am not sure.

Any help is appreciated.

CodePudding user response:

As you have mention that you are running 4 Databricks activities in parallel, in those activities you are writing data to 4 different tables in the same database and the error occurs only sometimes - so there are high chances that you are facing the capacity issue on SQL Server side.

If you are using Azure SQL Database, you can upgrade the tier and this should work fine.

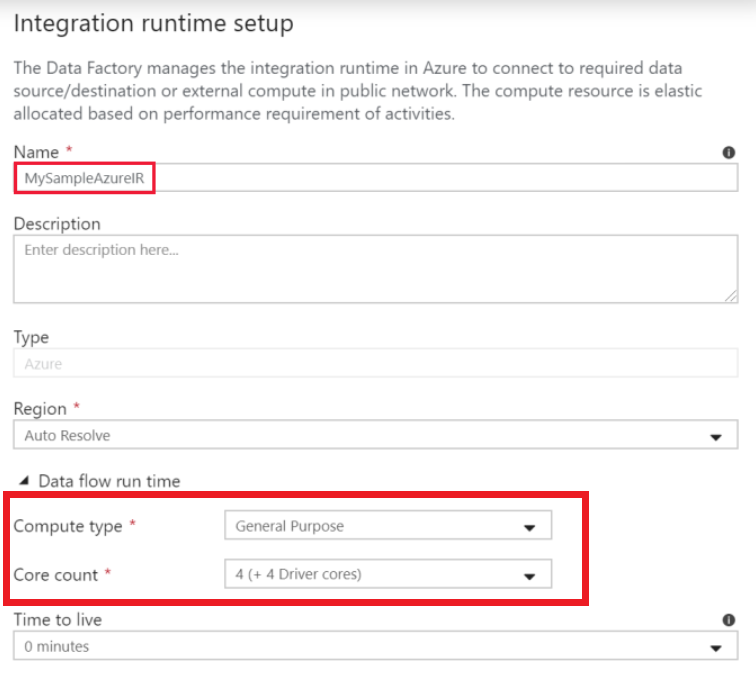

Also, make sure the Azure Integration Runtime has sufficient Cores and Memory to run your 4 pipelines in parallel. If data is huge it might be possible that IR is incapable to process it. Create a new IR and use it to run your pipelines. Refer below image.

Alternatively, you can run your pipeline in serial instead of parallel, this will leave the database and IR with enough memory to deal with the data.