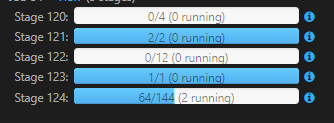

I'm in a configuration of databricks with 1 work, which gives a cluster two nodes and 12 cores each. The distribuition of this is stage is almost 2 per cluster. I tried to raise the number of works in Databrics to 4 workers and number of runnings (active tasks) raised to 8. I searched over the internet and got no answer. i'm new in pyspark too.

There's any way to grow this number without need to scale the number of workers?

CodePudding user response:

As per your existing configuration, it depends on the way you partitioned. But if you are having a finite number of partitions, use the below code to execute.

val input: DStream[...] = ...

val partitionedInput = input.repartition(numPartitions = your number of partitions)

CodePudding user response:

It looks like an issue or missconfiguration in spark (or databricks). The problem was solved running the same code on a cluster without gpu. It went from 2 runnings to 20.