I am working on comparing two images for differences.

Problem is that it works fine when I download some image from web, but not work when I tried to compare images which are captured from Phone camera. Any idea where an I doing it wrong?

I m working in Google Colab. I tried using 'structural_similarity' and dilate and findContours method, both are not working with camera images.

I tried using template matching then align the image and then try to capture the differences but still got the same result.

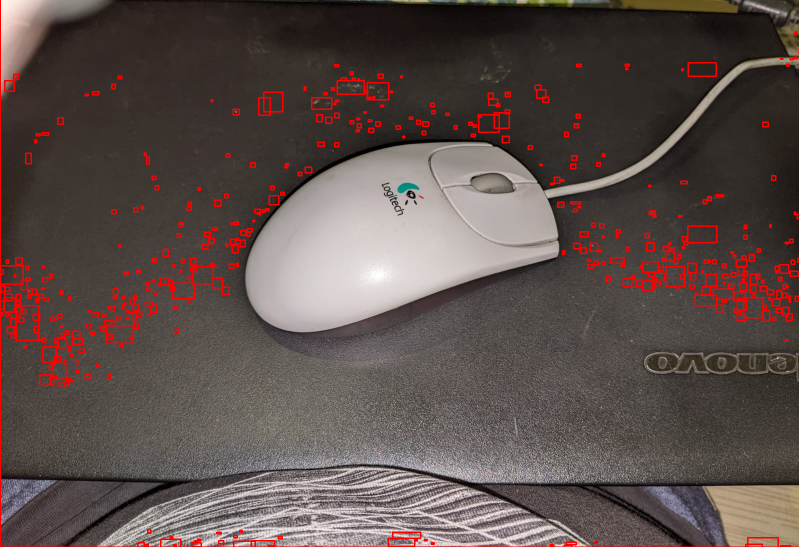

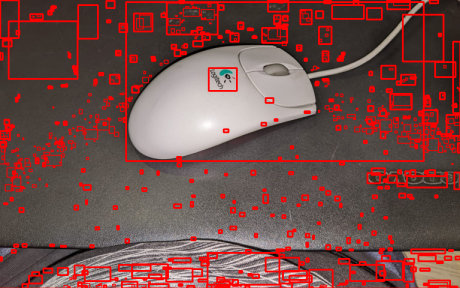

As you see in picture - it shows all the nits n bits of differences but not showing the bigger object 'the mouse' as a difference.

Here is my code:

import cv2

from skimage.metrics import structural_similarity

import imutils

ref = cv2.imread('/content/drive/My Drive/Image Comparison/1.png')

target = cv2.imread('/content/drive/My Drive/Image Comparison/2.png')

gray_ref = cv2.cvtColor(ref, cv2.COLOR_BGR2GRAY)

gray_compare = cv2.cvtColor(target, cv2.COLOR_BGR2GRAY)

(score, diff) = structural_similarity(gray_ref,gray_compare, full=True)

diff = (diff * 255).astype("uint8")

thresh = cv2.threshold(diff, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1]

contours = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

contours = imutils.grab_contours(contours)

no_of_differences = 0

for c in contours:

(x, y, w, h) = cv2.boundingRect(c)

rect_area = w*h

if rect_area > 10:

no_of_differences =1

cv2.rectangle(ref, (x, y), (x w, y h), (0, 0, 255), 2)

cv2.rectangle(target, (x, y), (x w, y h), (0, 0, 255), 2)

print("# of differences = ", no_of_differences)

scale_percent = 60 # percent of original size

width = int(ref.shape[1] * scale_percent / 100)

height = int(target.shape[0] * scale_percent / 100)

dim = (width, height)

# resize image

resized_ref = cv2.resize(ref, dim, interpolation = cv2.INTER_AREA)

resized_target = cv2.resize(target, dim, interpolation = cv2.INTER_AREA)

cv2_imshow(resized_ref)

cv2_imshow(resized_target)

cv2.waitKey(0)

CodePudding user response:

There are two main issues with your solution:

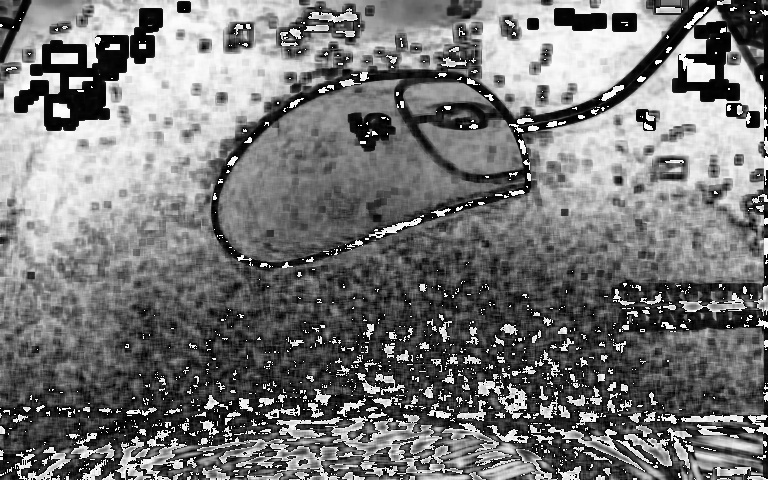

structural_similarityreturns positive and negative values (in range [-1, 1]).

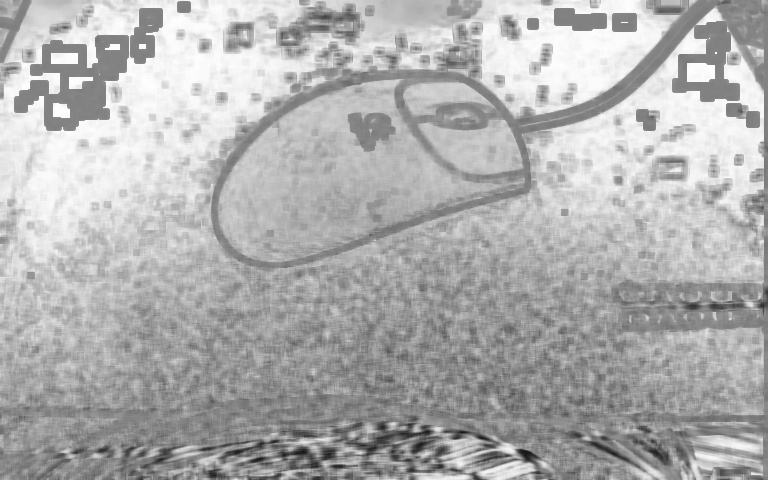

The conversion:diff = (diff * 255).astype("uint8")applies range [0, 1], but for range [-1, 1], we may use the following conversion:diff = ((diff 1) * 127.5).astype("uint8") # Convert from range [-1, 1] to [0, 255].Using

cv2.thresholdwith automatic thresholdcv2.THRESH_OTSUis not good enough for thresholding the image (at least not when applies afterstructural_similarity).

We may replace it with

As you can see, there are data overflows.diff = ((diff 1) * 127.5).astype("uint8"):

Original

thresh = cv2.threshold(diff, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1]:

As you can see, about half of

As you can see, about half of threshis white.Result of

thresh = cv2.adaptiveThreshold(diff, 255, cv2.ADAPTIVE_THRESH_GAUSSIAN_C, cv2.THRESH_BINARY_INV, 175, 30):

Remark:

The images you have posted include the markings, and are not the same size.

Next time, try to post clean images instead of screenshots...