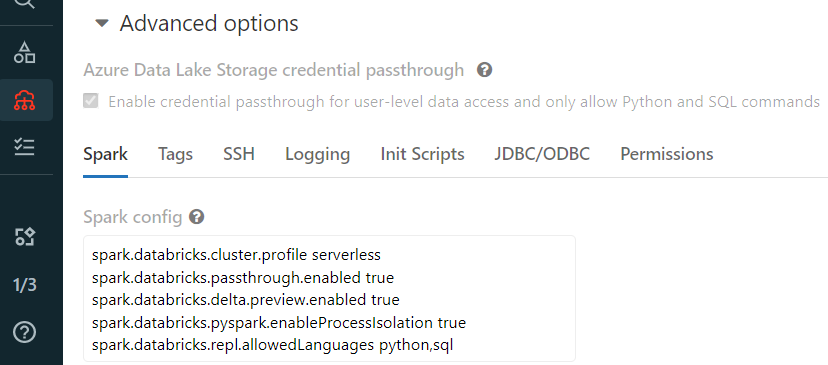

When creating an Azure Databricks and configuring its cluster, I had chosen the default languages for Spark to be python,sql. But now I want to add Scala, as well. When running the Scala script I was getting the following error. So, my online search took me to

CodePudding user response:

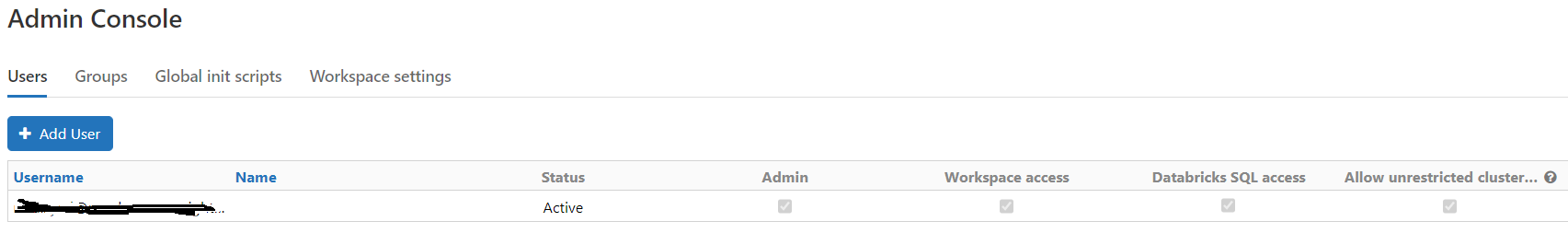

You need to click "Edit" button in the cluster controls - after that you should be able to change Spark configuration. But you can't enable Scala for the High concurrency clusters with credentials passthrough as it supports only Python & SQL (doc) - primary reason for that is that with Scala you can bypass users isolation.

If you need credentials passthrough Scala, then you need to use Standard cluster, but it will work only with a single specific user (doc).