im trying to use opencv to do face recognition using facenet512. i converted the model to onnx format using tf2onnx. i know that the input of the model should be an image like :(160,160,3). so i tried doing this using this script :

void convertDimention(cv::Mat input, cv::Mat &output)

{

vector<cv::Mat> channels(3);

cv::split(input, channels);

int size[3] = { 160, 160, 3 };

cv::Mat M(3, size, CV_32F, cv::Scalar(0));

for (int i = 0; i < size[0]; i ) {

for (int j = 0; j < size[1]; j ) {

for (int k = 0; k < size[2]; k ) {

M.at<float>(i,j,k) = channels[k].at<float>(i,j)/255;

}

}

}

M.copyTo(output);

}

after converting the image from (160,160) to (160,160,3) i still get this error :

error: (-215:Assertion failed) (int)_numAxes == inputs[0].size() in function 'getMemoryShapes'

full code :

#include <iostream>

#include <opencv2/dnn.hpp>

#include "opencv2/core/core.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include <vector>

using namespace std;

void convertDimention(cv::Mat input, cv::Mat &output)

{

vector<cv::Mat> channels(3);

cv::split(input, channels);

int size[3] = { 160, 160, 3 };

cv::Mat M(3, size, CV_32F, cv::Scalar(0));

for (int i = 0; i < size[0]; i ) {

for (int j = 0; j < size[1]; j ) {

for (int k = 0; k < size[2]; k ) {

M.at<float>(i,j,k) = channels[k].at<float>(i,j)/255;

}

}

}

M.copyTo(output);

}

int main()

{

cv::Mat input,input2, output;

input = cv::imread("image.png");

cv::resize(input,input, cv::Size(160,160));

convertDimention(input,input2);

cv::dnn::Net net = cv::dnn::readNetFromONNX("facenet512.onnx");

net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);

cout << input.size << endl;

cout << input2.size << endl;

net.setInput(input2);

output = net.forward();

}

I know that i'm doing this in the wrong way(since i'm new to this). Is there any other way to change the dimensions so that it fits the model input ?

thanks in advance.

CodePudding user response:

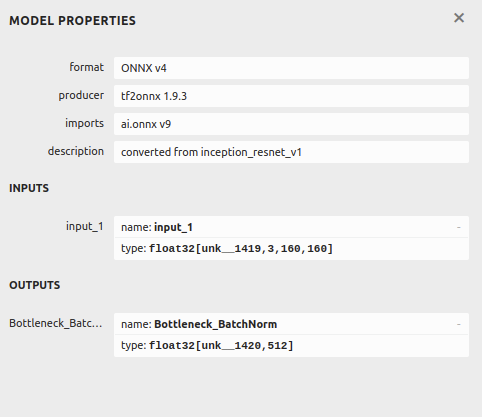

using netron i was able to visualize the input of the model :

the origin of the problem was from the conversion of the model i just had to change this :

model_proto, _ = tf2onnx.convert.from_keras(model, output_path='facenet512.onnx')

to this :

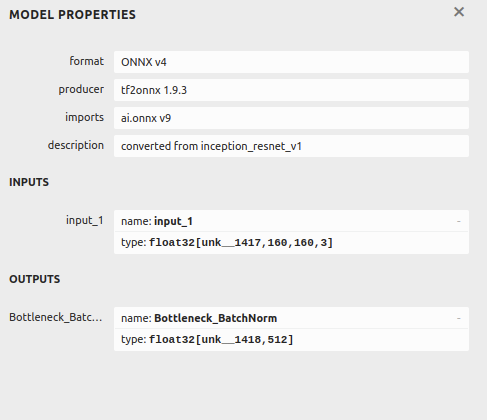

nchw_inputs_list = [model.inputs[0].name] model_proto, _ = tf2onnx.convert.from_keras(model, output_path='facenet512.onnx',inputs_as_nchw=nchw_inputs_list)

i checked again in netron and the result were :

i can now use the model from opencv using cv::dnn::BlobFromImage()