I am using a script in git bash, which performs few curl calls to HTTP endpoints expecting and producing protobuf.

The curl-output is piped to a custom proto2json.exe file and finally the result is saved to a JSON file:

#!/bin/bash

SCRIPT_DIR=$(dirname $0)

JSON2PROTO="$SCRIPT_DIR/json2proto.exe"

PROTO2JSON="$SCRIPT_DIR/proto2json.exe"

echo '{"key1":"value1","version":3}' | $JSON2PROTO -v 3 > request.dat

curl --insecure --data-binary @request.dat --output - https://localhost/protobuf | $PROTO2JSON -v 3 > response.json

The script works well and now I am trying to port it to Powershell:

$SCRIPT_DIR = Split-Path -parent $PSCommandPath

$JSON2PROTO = "$SCRIPT_DIR/json2proto.exe"

$PROTO2JSON = "$SCRIPT_DIR/proto2json.exe"

@{

key1 = value1;

version = 3;

} | ConvertTo-Json | &$JSON2PROTO -v 3 > request.dat

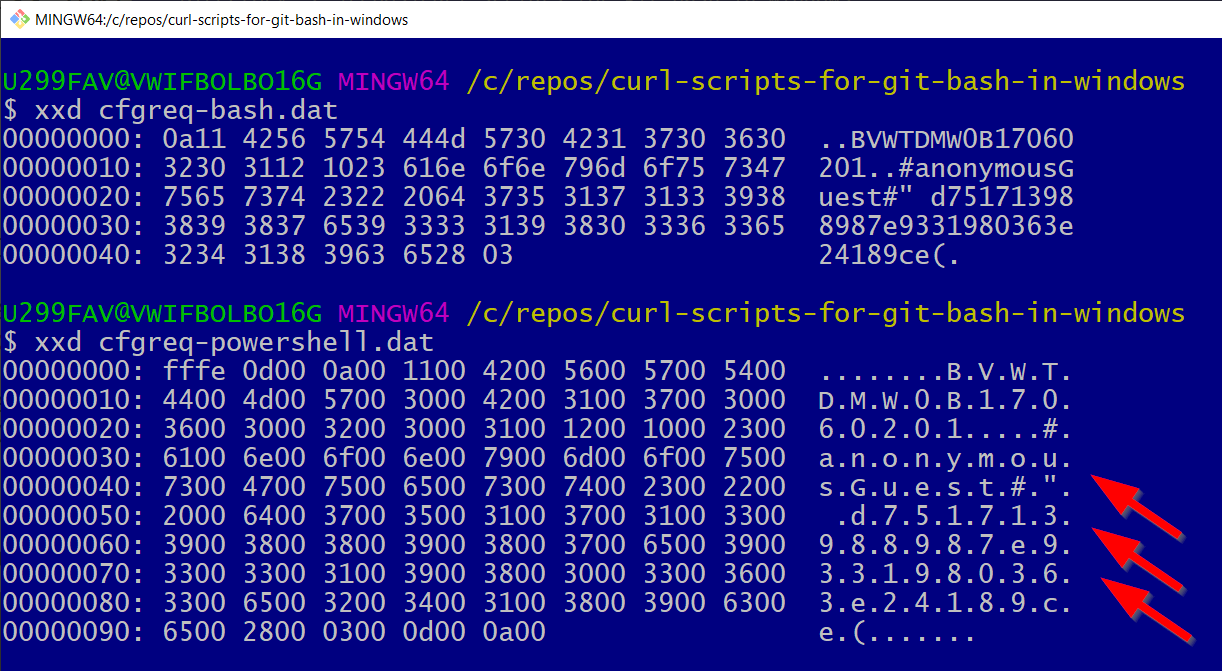

Unfortunately, when I compare the generated binary files in "git bash" and in Powershell, then I see that the latter file has additionaly zero bytes entered.

Here a screenshot of my real case comparison, what is happening here?

CodePudding user response:

It looks like you're ultimately after this:

$SCRIPT_DIR = Split-Path -parent $PSCommandPath

$JSON2PROTO = "$SCRIPT_DIR/json2proto.exe"

$PROTO2JSON = "$SCRIPT_DIR/proto2json.exe"

# Make sure that the output from your $JSON2PROTO executable is correctly decoded

# as UTF-8.

# You may want to restore the original encoding later.

[Console]::OutputEncoding = [System.Text.Utf8Encoding]::new()

# Capture the output lines from calling the $JSON2PROTO executable.

# Note: PowerShell captures a *single* output line as-is, and

# *multiple* ones *as an array*.

[array] $output =

@{

key1 = value1;

version = 3;

} | ConvertTo-Json | & $JSON2PROTO -v 3

# Filter out empty lines to extract the one line of interest.

[string] $singleOutputLineOfInterest = $output -ne ''

# Write a BOM-less UTF-8 file with the given text as-is,

# without appending a newline.

[System.IO.File]::WriteAllText(

"$PWD/request.dat",

$singleOutputLineOfInterest

)

As for what you tried:

In PowerShell,

>is an effective alias of theOut-Filecmdlet, whose default output character encoding in Windows PowerShell is "Unicode" (UTF-16LE) - which is what you saw - and, in PowerShell (Core) 7 , BOM-less UTF8. To control the character encoding, callOut-Fileor, for text input,Set-Contentwith the-Encodingparameter.Note that you may also have to ensure that an external program's output is first properly decoded, which happens based on the encoding stored in

[Console]::OutputEncoding- see this answer for more information.Note that you can't avoid these decoding re-encoding steps in PowerShell as of v7.2.4, because the PowerShell pipeline currently cannot serve as a conduit for raw bytes, as discussed in this answer, which also links to the GitHub issue you mention.

Finally, note that both

Out-FileandSet-Contentby default append a trailing, platform-native newline to the output file. While-NoNewLinesuppresses that, it also suppresses newlines between multiple input objects, so you may have to use the-joinoperator to manually join the inputs with newlines in the desired format, e.g.(1, 2) -join "`n" | Set-Content -NoNewLine out.txt

If, in Windows PowerShell, you want to create UTF-8 files without a BOM, you can't use a file-writing cmdlet and must instead use .NET APIs directly (PowerShell (Core) 7 , by contrast, produces BOM-less UTF-8 files by default, consistently). .NET APIs do and always have created BOM-less UTF-8 files by default; e.g.:

[System.IO.File]::WriteAllLines()writes the elements of an array as lines to an output file, with each line terminated with a platform-native newline, i.e. CRLF (0xD0xA) on Windows, and LF (0xA) on Unix-like platforms.[System.IO.File]::WriteAllText()writes a single (potentially multi-line) string as-is to an output file.Important: Always pass full paths to file-related .NET APIs, because PowerShell's current location (directory) usually differs from .NET's.