I have a Python performance issue. I need to merge two dataframes on an openstreetmaps function, and it is too slow. My first dataframe df1 is 35K rows long, and my second one 2K rows long. Full merge is then 70M. I suppose I won't be able to improve openstreetmaps response time but maybe my code could be faster. Can you see a way of improvments?

Thanks for answers.

import requests, json

from sqlalchemy import create_engine

url = 'http://router.project-osrm.org/route/v1/driving/'

conn = 'postgresql psycopg2://postgres:{0}@localhost/postgres'.format('')

my_conn = create_engine(conn)

def closest(s,df2):

distance_min = 1000000

duration_min = 1000000

place_min = ''

for i in range(len(df2)):

x = str(s['LONG']) ',' str(s['LAT']) ";" str(df2.loc[i, "LONG"]) "," str(df2.loc[i, "LAT"])

response = requests.get(url x)

data = json.loads(response.content)

duration = int((data['routes'][0]['duration']*0.95)/60)

if duration_min > duration:

distance_min = int(data['routes'][0]['distance']*0.000621371*1.609344)

duration_min = duration

place_min = df2.loc[i, "PLACE_NAME"]

s['CLOSEST_PLACE'] = place_min

s['DISTANCE'] = distance_min

s['DURATION'] = duration_min

return s

df1 = df1.apply(lambda x: closest(x, df2),axis=1)

Edit:

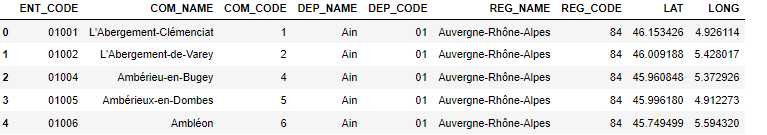

Head of df1 & df2 - it is French open data: basically I want to find the closest station (df2) to each city (df1)

ENT_CODE COM_NAME COM_CODE DEP_NAME DEP_CODE REG_NAME REG_CODE LAT LONG

0 01001 L'Abergement-Clémenciat 1 Ain 01 Auvergne-Rhône-Alpes 84 46.153426 4.926114

1 01002 L'Abergement-de-Varey 2 Ain 01 Auvergne-Rhône-Alpes 84 46.009188 5.428017

2 01004 Ambérieu-en-Bugey 4 Ain 01 Auvergne-Rhône-Alpes 84 45.960848 5.372926

3 01005 Ambérieux-en-Dombes 5 Ain 01 Auvergne-Rhône-Alpes 84 45.996180 4.912273

4 01006 Ambléon 6 Ain 01 Auvergne-Rhône-Alpes 84 45.749499 5.594320

5 01007 Ambronay 7 Ain 01 Auvergne-Rhône-Alpes 84 46.005591 5.357607

6 01008 Ambutrix 8 Ain 01 Auvergne-Rhône-Alpes 84 45.936713 5.332809

7 01009 Andert-et-Condon 9 Ain 01 Auvergne-Rhône-Alpes 84 45.787357 5.657883

8 01010 Anglefort 10 Ain 01 Auvergne-Rhône-Alpes 84 45.909372 5.795160

9 01011 Apremont 11 Ain 01 Auvergne-Rhône-Alpes 84 46.205498 5.657815

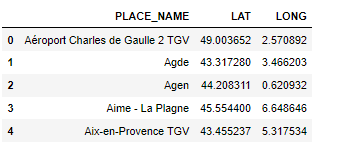

PLACE_NAME LAT LONG

0 Aéroport Charles de Gaulle 2 TGV 49.003652 2.570892

1 Agde 43.317280 3.466203

2 Agen 44.208311 0.620932

3 Aime - La Plagne 45.554400 6.648646

4 Aix-en-Provence TGV 43.455237 5.317534

5 Aix-les-Bains le Revard 45.688161 5.909371

6 Albertville 45.672977 6.383167

7 Amiens 49.890746 2.312592

8 Ancenis 47.369334 -1.177763

9 Angers Saint-Laud 47.464647 -0.556820

CodePudding user response:

The following items come to mind:

- See if the page allows to query multiple locations at once (so you do only one query per row of

df1instead of looping through each row ofdf2) - Use a

threadPoolExecutorto query the page for the locations concurrently - Do the calculations as the

threadPoolExecutorreturns the values

CodePudding user response:

Below is a lot of code, but the general idea is that you don't need to measure the distance between every pair of N=35K cities and and M=2K stations which is prohibitively slow and O(N*M).

You only care about the closest station to each city which means that you can do a pre-filtering step and avoid making 70M request calls.

The steps are:

- Convert each city and station from LAT/LONG to X,Y,Z

- Build a station-rtree of the station which takes O(M) time. An rtree is like a spatial binary search tree

- Iterate through the cities using the station-rtree to find the closest station to each city in euclidean measurements (this is an approximation). This takes O(N*log2(M)) time

- Finding the closest pairs used to be O(N*M) = 70M, but is now O(M) O(N*log(M)) ~= 387K with the rtree (not 100% sure on the time complexity...)

- Now you have a list of approximate closest pairs "as the crow flies" which you can then use the external API to find the actual driving dist/time

- The number of API calls went from 70M to 35K (once for each city). This is still a lot of calls

This is an approximate solution since a city/station might be very close in euclidean space, but very far in driving space (i.e. an indirect route due to a mountain in between). You can hedge against this by using the API to measure the k closest stations to each city. The code below shows k=2

import pandas as pd

import numpy as np

import math

import io #<-- just used to read in the test data

#Package information https://rtree.readthedocs.io/en/latest/

from rtree import index #<-- retree package requires "pip install rtree" (or conda etc)

#input test data

cities = pd.read_csv(io.StringIO("""

ENT_CODE,COM_NAME,COM_CODE,DEP_NAME,DEP_CODE,REG_NAME,REG_CODE,LAT,LONG

01001,L'Abergement-Clémenciat,1,Ain,01,Auvergne-Rhône-Alpes,84,46.153426,4.926114

01002,L'Abergement-de-Varey,2,Ain,01,Auvergne-Rhône-Alpes,84,46.009188,5.428017

01004,Ambérieu-en-Bugey,4,Ain,01,Auvergne-Rhône-Alpes,84,45.960848,5.372926

01005,Ambérieux-en-Dombes,5,Ain,01,Auvergne-Rhône-Alpes,84,45.996180,4.912273

01006,Ambléon,6,Ain,01,Auvergne-Rhône-Alpes,84,45.749499,5.594320

01007,Ambronay,7,Ain,01,Auvergne-Rhône-Alpes,84,46.005591,5.357607

01008,Ambutrix,8,Ain,01,Auvergne-Rhône-Alpes,84,45.936713,5.332809

01009,Andert-et-Condon,9,Ain,01,Auvergne-Rhône-Alpes,84,45.787357,5.657883

01010,Anglefort,10,Ain,01,Auvergne-Rhône-Alpes,84,45.909372,5.795160

01011,Apremont,11,Ain,01,Auvergne-Rhône-Alpes,84,46.205498,5.657815

"""))

stations = pd.read_csv(io.StringIO("""

PLACE_NAME,LAT,LONG

Aéroport Charles de Gaulle 2 TGV, 49.003652, 2.570892

Agde, 43.317280, 3.466203

Agen, 44.208311, 0.620932

Aime - La Plagne, 45.554400, 6.648646

Aix-en-Provence TGV ,43.455237, 5.317534

Aix-les-Bains le Revard, 45.688161, 5.909371

Albertville, 45.672977, 6.383167

Amiens, 49.890746, 2.312592

Ancenis, 47.369334, -1.177763

Angers Saint-Laud, 47.464647, -0.556820

"""))

#estimate x,y,z coordinates from LAT/LONG for each table

#stolen from https://stackoverflow.com/questions/1185408/converting-from-longitude-latitude-to-cartesian-coordinates

R = 6371 #approximate radius of earth in km

to_rad = math.pi/180 #convert from degrees to radians

station_xyz_coords = pd.DataFrame(index=stations.index)

station_xyz_coords['x'] = R*np.cos(stations['LAT']*to_rad)*np.cos(stations['LONG']*to_rad)

station_xyz_coords['y'] = R*np.cos(stations['LAT']*to_rad)*np.sin(stations['LONG']*to_rad)

station_xyz_coords['z'] = R*np.sin(stations['LAT']*to_rad)

city_xyz_coords = pd.DataFrame(index=cities.index)

city_xyz_coords['x'] = R*np.cos(cities['LAT']*to_rad)*np.cos(cities['LONG']*to_rad)

city_xyz_coords['y'] = R*np.cos(cities['LAT']*to_rad)*np.sin(cities['LONG']*to_rad)

city_xyz_coords['z'] = R*np.sin(cities['LAT']*to_rad)

#create an RTree index from df1

#this allows for fast lookups of the closest city for a given station

p = index.Property()

p.dimension = 3

station_rtree = index.Index(properties=p)

for station_ind,s in station_xyz_coords.iterrows():

station_rtree.insert(id=station_ind, coordinates=(s.x, s.y, s.z, s.x, s.y, s.z))

#find, "as the crow flies" the nearest town to each station

#this is an assumption that the closest direct distance will also have the closest driving distance

#can adjust the num_nearest below to get back the k nearest cities to each station

num_nearest = 2

nearby_station_cities = {

'city_ind':[],

'station_ind':[],

'euclidean_dist':[],

}

for city_ind,c in city_xyz_coords.iterrows():

nearby_stations = station_rtree.nearest((c.x, c.y, c.z, c.x, c.y, c.z), num_nearest)

for station_ind in nearby_stations:

nearby_station_cities['city_ind'].append(city_ind)

nearby_station_cities['station_ind'].append(station_ind)

#getting euclidean dist "for fun", wouldn't be needed for anything later

city_coords = city_xyz_coords.loc[city_ind][['x','y','z']].values

station_coords = station_xyz_coords.loc[station_ind][['x','y','z']].values

euclidean_dist = np.linalg.norm(station_coords-city_coords)

nearby_station_cities['euclidean_dist'].append(euclidean_dist)

euc_nearby_df = pd.DataFrame(nearby_station_cities)

#merge the station and city information into the table of closest pairs

euc_nearby_df = euc_nearby_df.merge(

cities,left_on='city_ind',right_index=True,

).merge(

stations,left_on='station_ind',right_index=True,

suffixes = ('_city','_station'),

)

#then iterate through the closest pairs calling the external API

for i,r in euc_nearby_df.iterrows():

x = '{},{};{},{}'.format(

r.LAT_station, r.LONG_station,

r.LAT_city, r.LONG_city,

)

#response = requests.get(url x)

#etc...

#your prior code