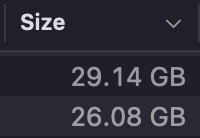

I have two 30 gb CSV files, each contains tens of millions of records.

They are using commas as delimiter, and saved as UTF-16 (thanks Tableau :-( )

I wish to convert these files to utf-8-sig with commas instead of tabs.

I tried the following code (some variables are declared earlier):

csv_df = pd.read_csv(p, encoding=encoding, dtype=str, low_memory=False, error_bad_lines=True, sep=' ')

csv_df.to_csv(os.path.join(output_dir, os.path.basename(p)), encoding='utf-8-sig', index=False)

And I have also tried: Convert utf-16 to utf-8 using python

Both work slowly, and practically never finish.

Is there a better way to make the conversion? Maybe Python is not the best tool for this?

Ideally, I would love the data to be stored in a database, but I'm afraid this is no a plausible option at the moment.

Thanks!

CodePudding user response:

I converted a 35GB file in two minutes, Note that you can optimize performances by changing the constants in the top lines.

BUFFER_SIZE_READ = 1000000 # depends on available memory in bytes

MAX_LINE_WRITE = 1000 # number of lines to write at once

source_file_name = 'source_fle_name'

dest_file_name = 'destination_file_name'

source_encoding = 'file_source_encoding' # 'utf_16'

destination_encoding = 'file_destination_encoding' # 'utf_8'

BOM = True # True for utf_8_sig

lines_count = 0

def read_huge_file(file_name, encoding='utf_8', buffer_size=1000):

def read_buffer(file_obj, size=1000):

while True:

data = file_obj.read(size)

if data:

yield data

else:

break

source_file = open(file_name, encoding=encoding)

buffer_in = ''

for buffer in read_buffer(source_file, size=buffer_size):

buffer_in = buffer

lines = buffer_in.splitlines()

buffer_in = lines.pop()

if len(lines):

yield lines

else:

break

def process_data(data):

def write_lines(lines_to_write):

with open(dest_file_name, 'a', encoding=destination_encoding) as dest_file:

if BOM and dest_file.tell() == 0:

dest_file.write(u'\ufeff')

dest_file.write(lines_to_write)

return ''

global lines_count

lines = ''

for line in data:

lines_count = 1

lines = (line '\n')

if not lines_count % MAX_LINE_WRITE:

lines = write_lines(lines)

if len(lines):

with open(dest_file_name, 'a', encoding=destination_encoding) as dest_file:

write_lines(lines)

for buffer_data in read_huge_file(source_file_name, encoding=source_encoding, buffer_size=BUFFER_SIZE_READ):

process_data(buffer_data)