I have the following expression:

const value = 10 / 2;

In VS Code, if I hover over value, it shows me its type is double, which makes sense. But if I run the following lines of code, they all evaluate to true:

print(value is num); // true

print(value is double); // true

print(value is int); // true

num is the super class, and double & int are sibling classes. How come a value be of the sibling type & the super type simultaneously?

CodePudding user response:

try

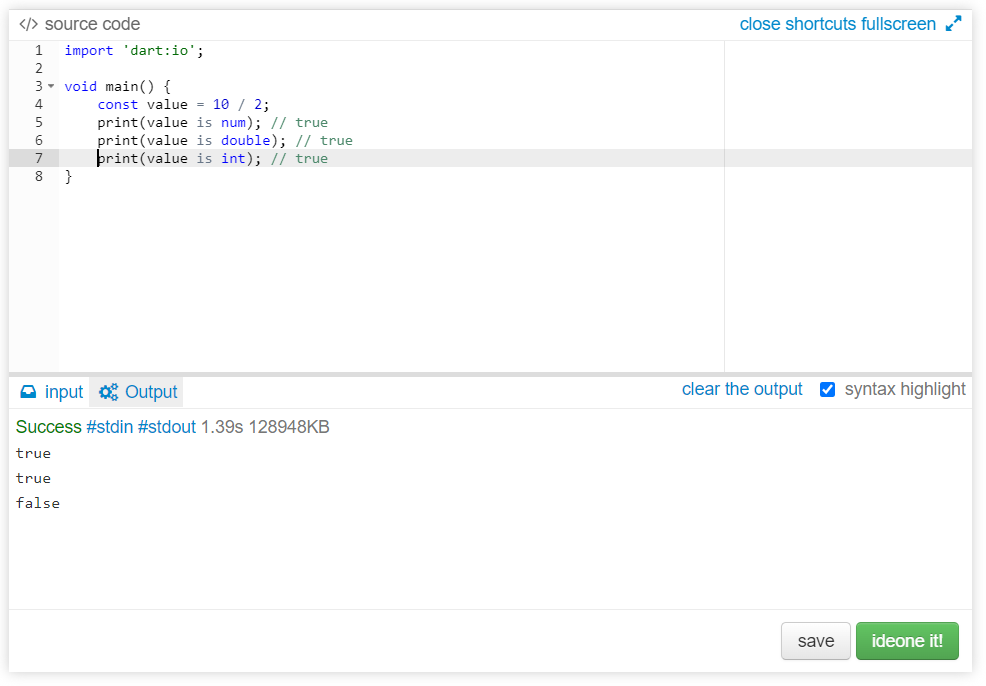

import 'dart:io';

void main() {

const value = 10 / 2;

print(value is num); // true

print(value is double); // true

print(value is int); // true

}

The output is:

true true false

That is expected result.

CodePudding user response:

You are compiling for the web, and running in a browser. DartPad does that for you, and you get the same effect if you compile your program using the web compilers (dart2js or the dev-compiler).

When compiling Dart for the web, all numbers are compiled to JavaScript numbers, because that's the only way to get efficient execution.

JavaScript does not have integers as a separate type. All JavaScript numbers are IEEE-754 double precision floating point numbers, what Dart calls doubles.

So, when you have a number like 10 / 2, which would create a double in native Dart, it creates the JavaScript number 5.0 on the web. So does the integer literal 5. There is only one "5" number in the browser, so both integer and double computations create the same number.

To make that be consistent with the type system, it means that "5" is a value which implements both the int and the double interface. A fractional value like 5.5 only implements double.

When running natively, integers and doubles are separate types.

This is all described in the Dart language tour.