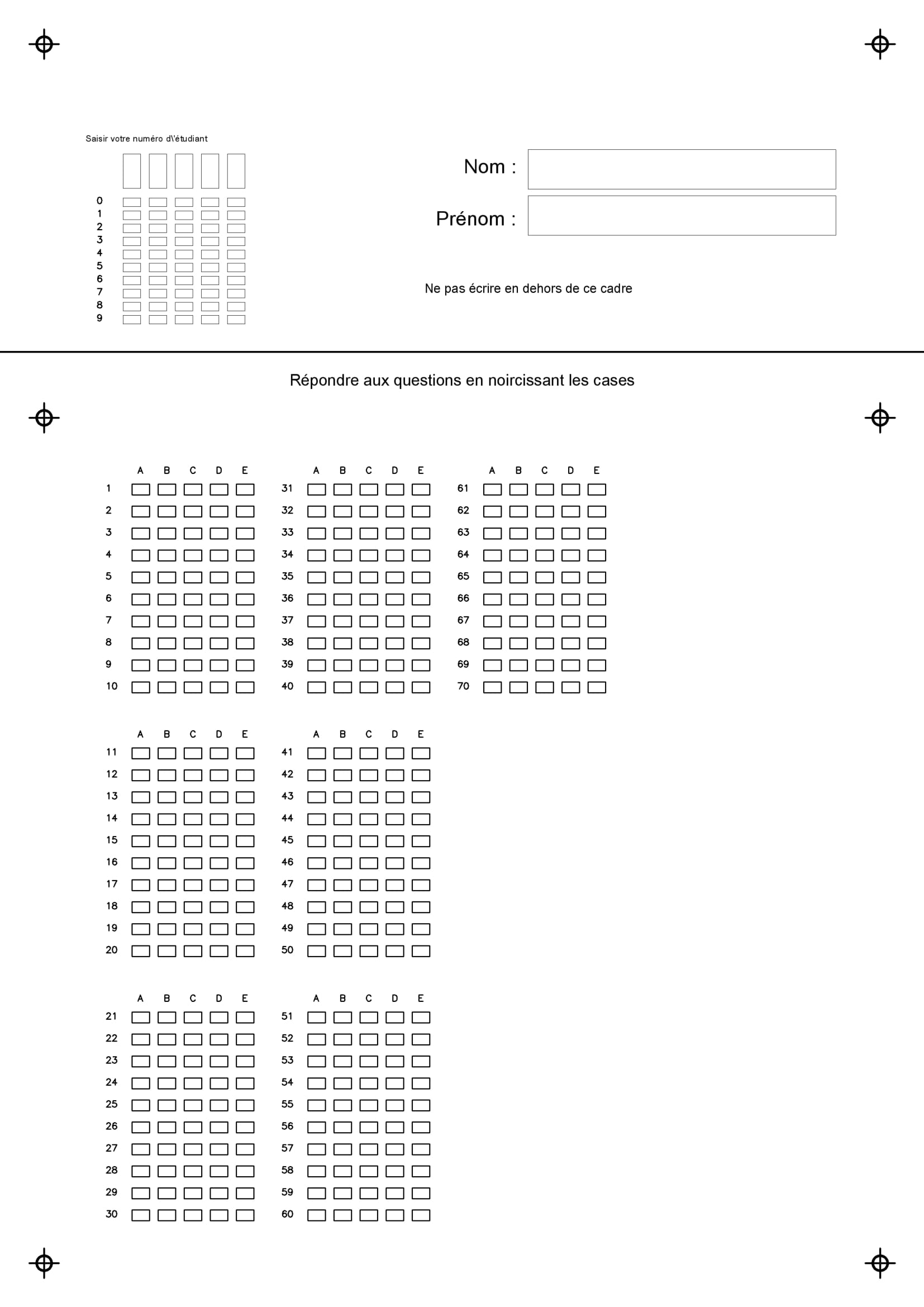

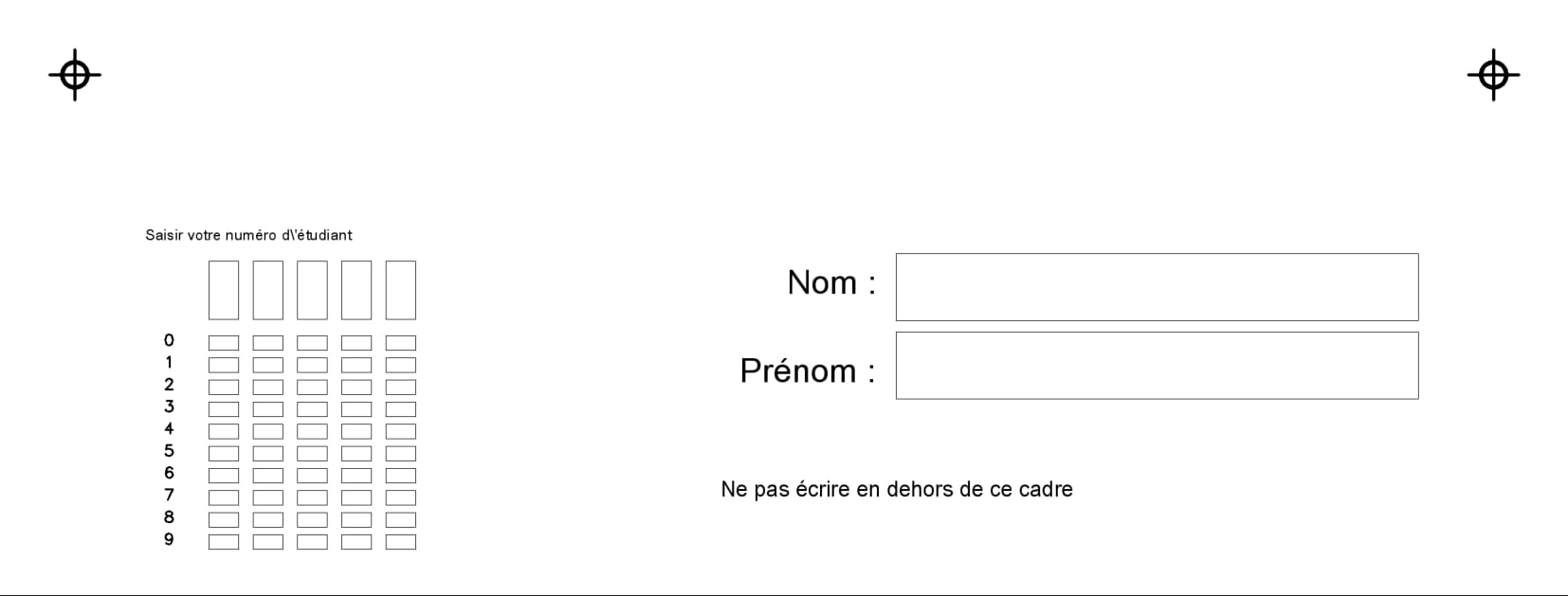

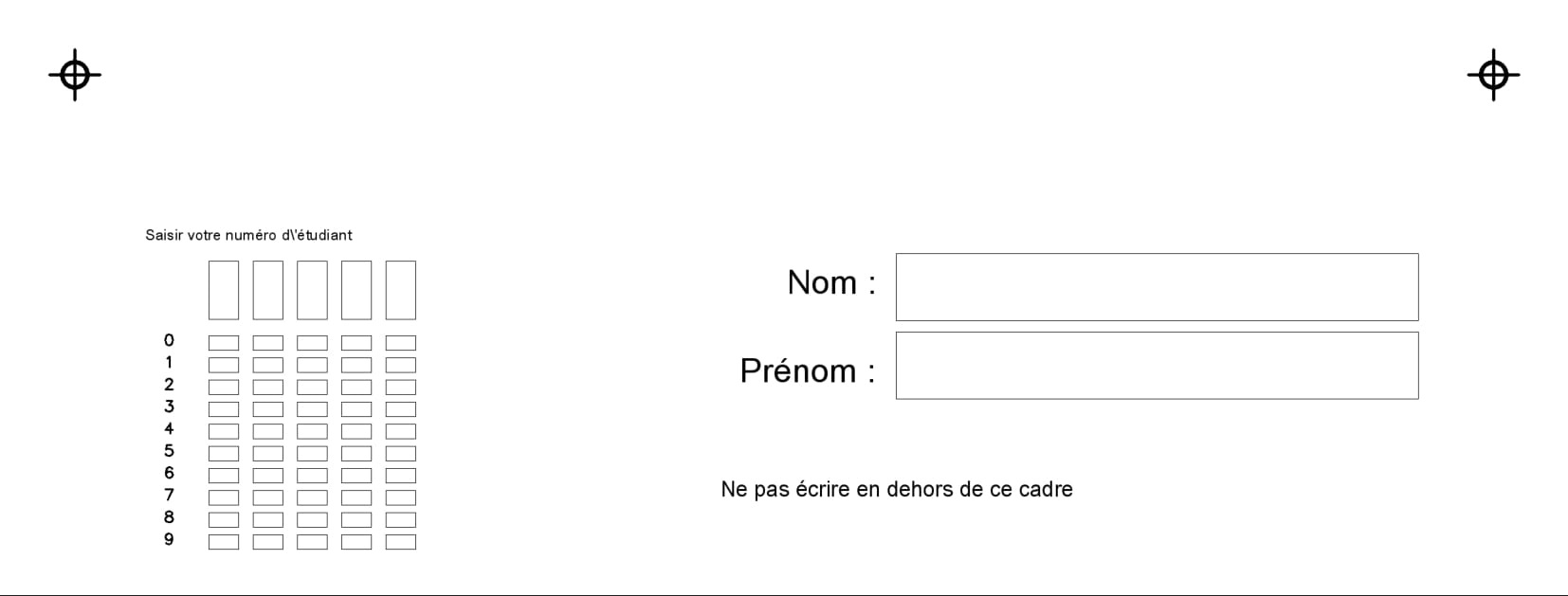

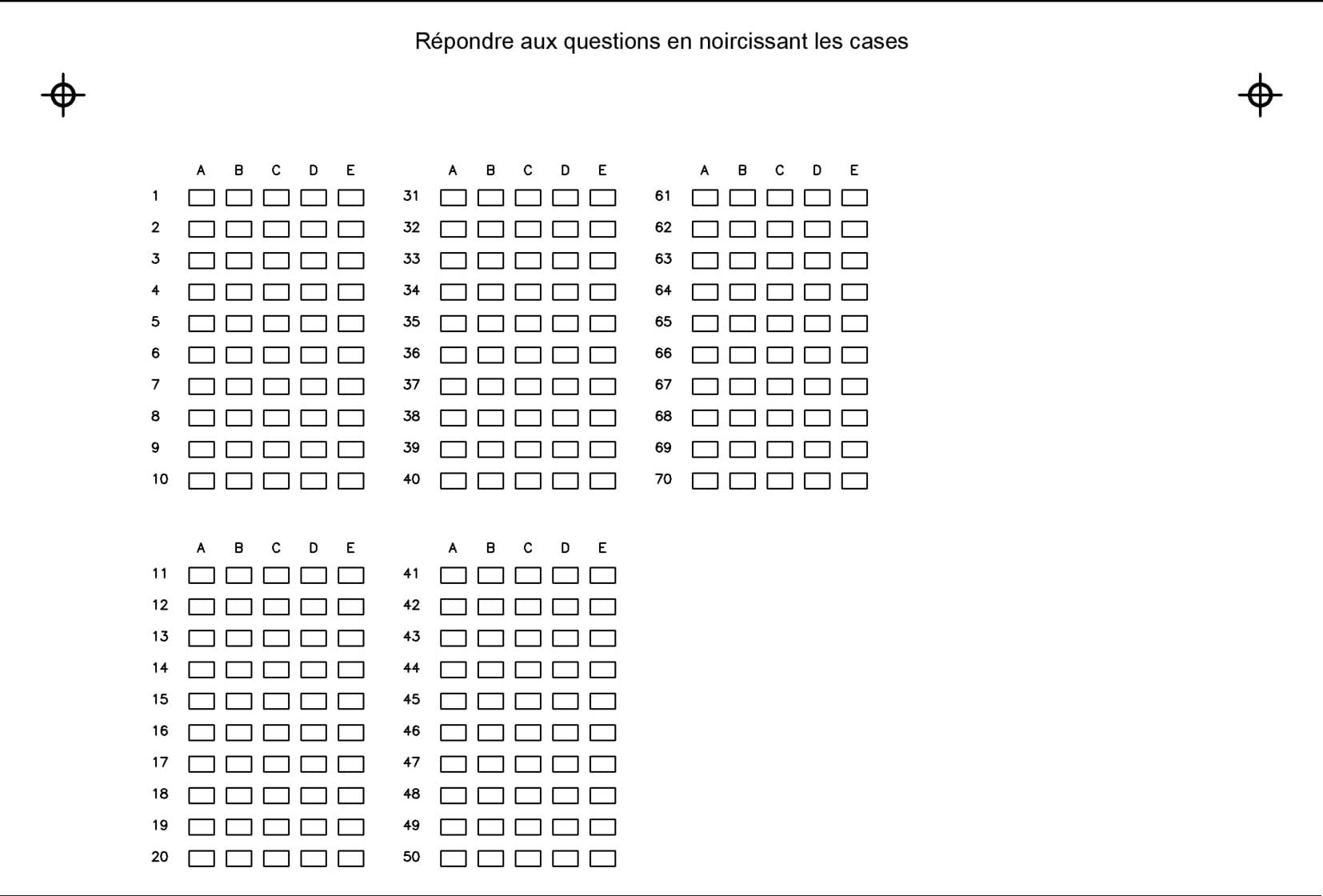

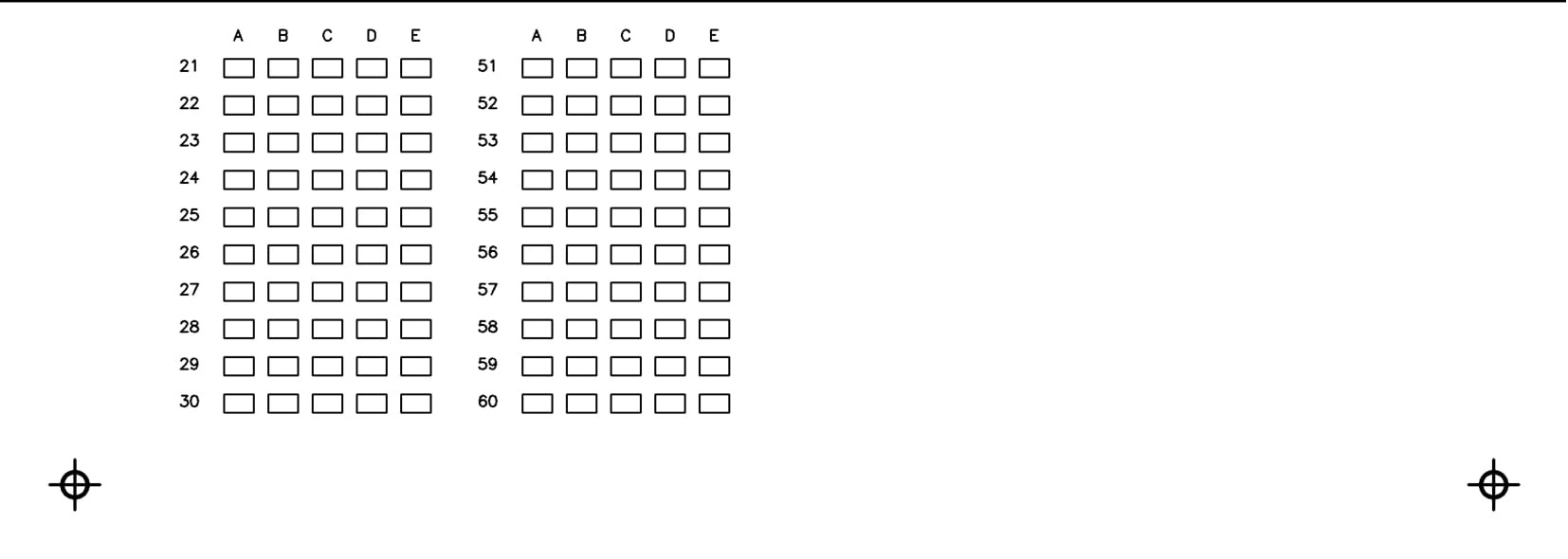

I have this picture below , i want to separate it to tow part, so i can work independently with every part alone , i added those black dots to make my algo detect the dots and separate the part but it's detect all the elements of paper it' doesn't work as i expected, i do not know the reason why

this is my code

import cv2

import numpy as np

image = cv2.imread('18936-model-1.jpg')

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

blurred = cv2.GaussianBlur(gray, (23, 23), 0)

cv2.imshow("blurred", blurred)

canny = cv2.Canny(blurred, 30, 30)

cnts, _ = cv2.findContours(canny.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

print(f"Contours in the image, %d" % (len(cnts)))

shape = image.copy()

cv2.drawContours(shape, cnts, -1, (0, 255, 0), 2)

cv2.imwrite('{}.png'.format('sunflower'), shape)

cv2.imshow("Edges", shape)

cv2.waitKey(0)

this is the original image

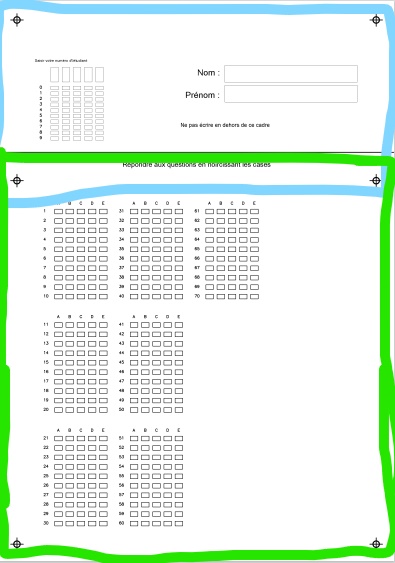

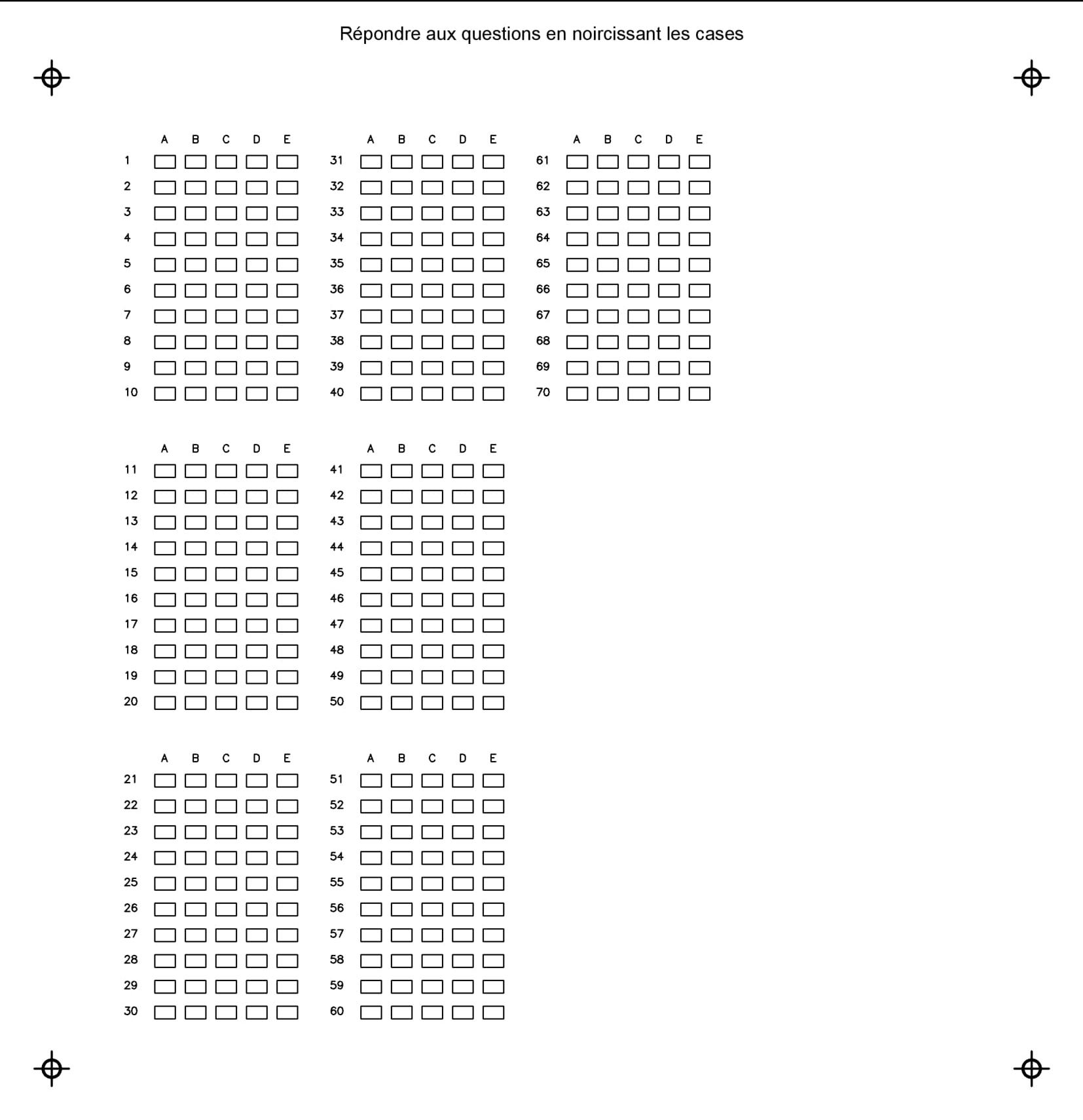

this is how i want separate my picture

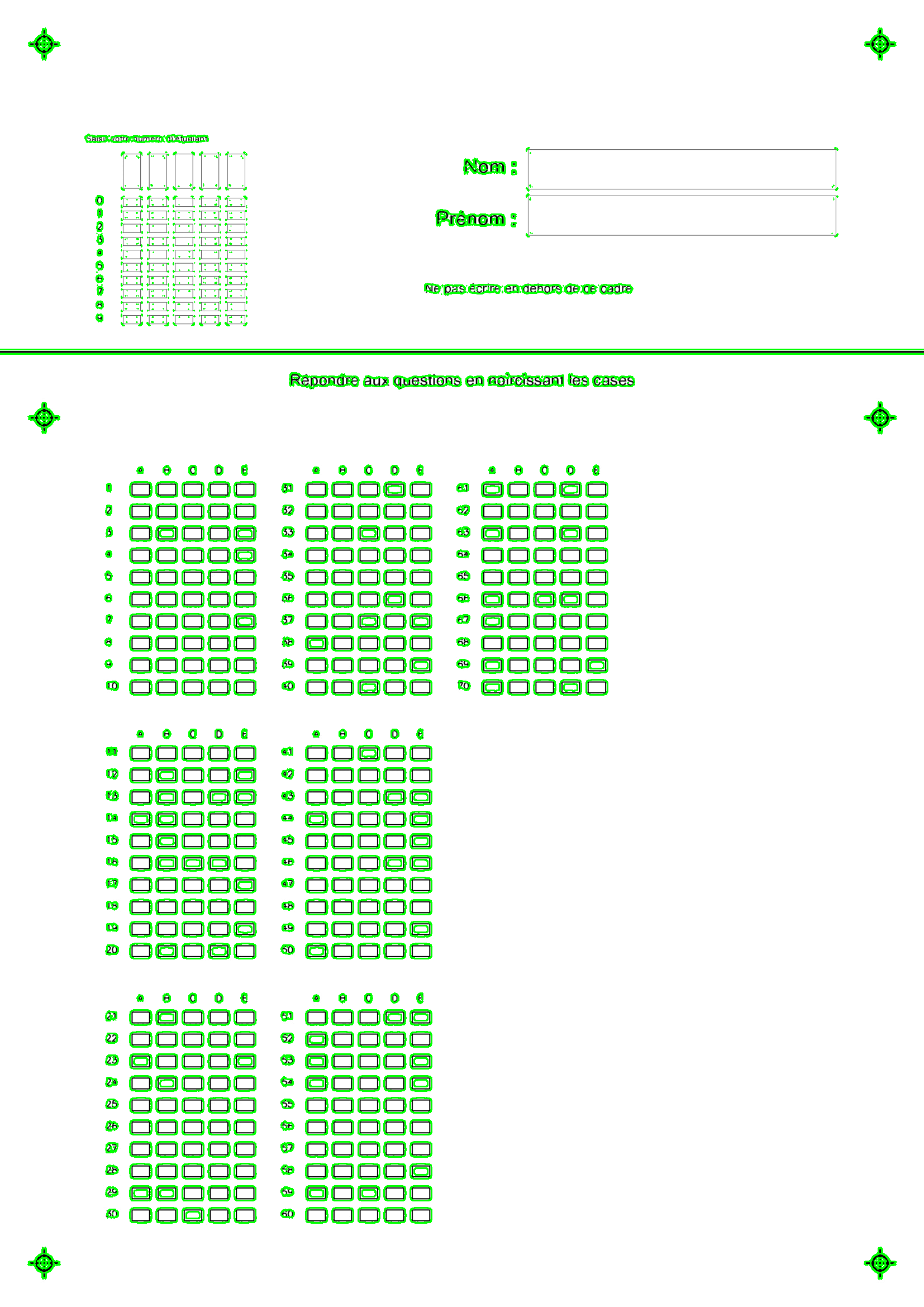

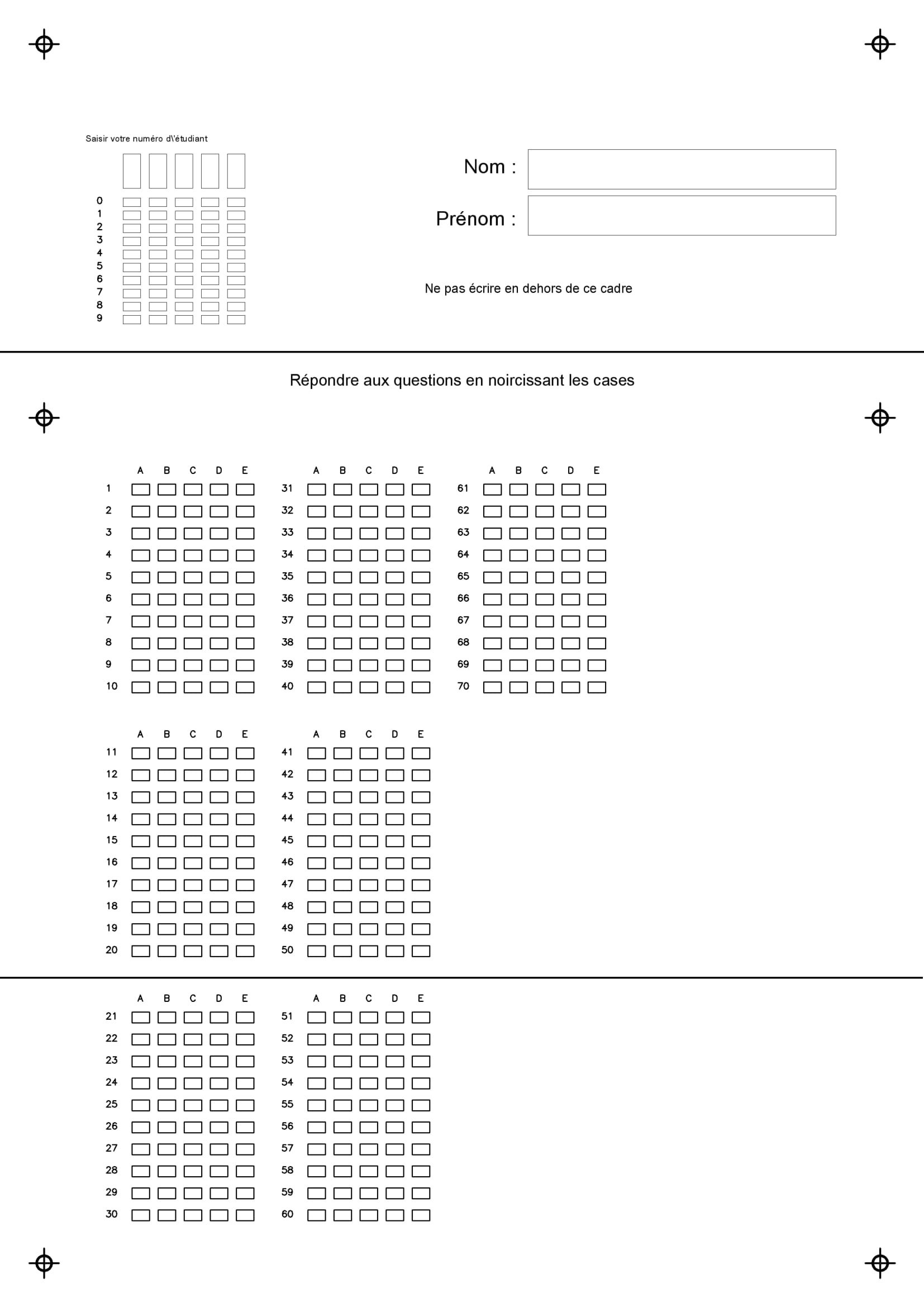

this is what i get

CodePudding user response:

Here's a possible solution. The idea is just to use the horizontal line to split the images. However, we don't really need the full image. The line extends along all the width, so I just extract a couple of first columns, reduced them to a column and search the position (or indices) where the maximum intensity value is present.

After I have this info, I can split the original image along that reference. Let's see the code:

# Imports

import numpy as np

import cv2

# Image path

path = "D://opencvImages//"

fileName = "xIe0M.jpg"

# Reading an image in default mode:

inputImage = cv2.imread(path fileName)

# To grayscale:

grayImage = cv2.cvtColor(inputImage, cv2.COLOR_BGR2GRAY)

# Otsu Threshold:

_, binaryImage = cv2.threshold(grayImage, 0, 255, cv2.THRESH_OTSU cv2.THRESH_BINARY_INV)

# Apply thinning:

binaryImage = cv2.ximgproc.thinning(binaryImage, None, 1)

# Show the image:

showImage("binaryImage", binaryImage)

# Get image dimensions:

(imageHeight, imageWidth) = binaryImage.shape[:2]

# Get ROIs:

roiHeight = imageHeight

roiWidth = int(0.01 * imageWidth)

# Store ROIs here:

verticalCropPoints = []

# Crop first and last row:

horizontalMargin = 2

binaryImage = binaryImage[0:imageHeight, horizontalMargin:imageWidth - horizontalMargin]

# Extract left and right ROIs:

# Set Cropping dimensions:

xStart = clamp((imageWidth - roiWidth), 0, imageWidth)

xEnd = roiWidth

yStart = 0

yEnd = roiHeight

# Crop the ROI:

currentRoi = binaryImage[yStart:yEnd, xStart:xStart xEnd]

# Image reduction to a column:

reducedImage = cv2.reduce(currentRoi, 1, cv2.REDUCE_MAX)

The first step involves converting the image to binary, then apply a thinning. This operation will normalize the horizontal width to one pixel. I don't really need more than one pixel for the horizontal line. This is the result so far:

It is very subtle, but this is the ROI (1% of the original width) reduced to just one column. Let's find where the maximum value is present (you can actually see a faint white pixel on the past image):

# Get indices of maximum values:

maxIndexCol = np.nonzero(reducedImage)

# Store all the indices here:

verticalCropPoints = np.append(verticalCropPoints, maxIndexCol[0])

Now I have the vertical position of the horizontal line. Let's crop based on this info. The approach is implemented with a loop that crops iteratively until the last vertical coordinate is reached:

# Crop image:

cropImage = True

cropCounter = len(verticalCropPoints)

# Loop variables:

xStart = 0

xEnd = imageWidth

yPast = 0

j = 0

while cropImage:

if j < cropCounter:

y = int(verticalCropPoints[j])

yStart = yPast

yEnd = y

yPast = y

else:

yStart = yPast

yEnd = imageHeight

cropImage = False

currentCrop = inputImage[yStart:yEnd, xStart:xStart xEnd]

cv2.imshow("currentCrop", currentCrop)

cv2.waitKey(0)

# Increment step counter:

j = 1

These are the results (scaled for the post, open them in new tabs to see them at full resolution):

Let's check out how the algorithm scales to more than one horizontal line. This is a modification of your original image, with two horizontal lines:

Which yields these individual images:

There's an auxiliary function I use, to clamp a number to a valid range:

# Clamps an integer to a valid range:

def clamp(val, minval, maxval):

if val < minval: return minval

if val > maxval: return maxval

return val

You will also need OpenCV's extended image processing module in order to skeletonize/thin the image.