I was creating a test SQL database with the following code:

import sqlite3

connection = sqlite3.connect('tv.sqlite')

cursor = connection.cursor()

#Create database

sql_query = """ CREATE TABLE tvshows (

id integer PRIMARY KEY,

producer text NOT NULL,

language text NOT NULL,

title text NOT NULL

)"""

#Execute the SQL query

cursor.execute(sql_query)

I get to define the table columns.

However, I want to import a huge database with 50k entries from a CSV that I have. That CSV doesn't have headers (but even if it did, I'd still have the same question).

I found this code online:

import pandas

import sqlite3

connection = sqlite3.connect('tv.sqlite')

cursor = connection.cursor()

#Reads csv and converts to sql

df = pandas.read_csv('tvshows_db.csv')

df.to_sql('tv', connection)

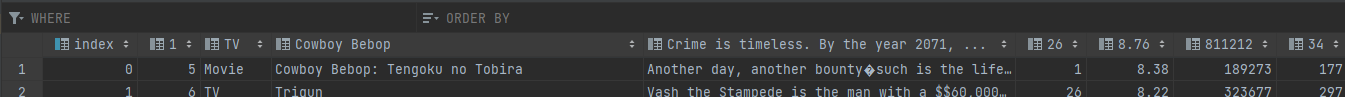

How did it create the database without me setting the column names (it uses the first TV show entry as the header values) and their values (type, char limit, etc.)? And how can I do it with pandas.to_sql?

CodePudding user response:

It is assuming the first row values are the headers and creating it like that. You can set dataframe headers first before you push to db:

df = pandas.read_csv('tvshows_db.csv')

df.columns = ['col1', 'col2']

For column types, if you create a table like in your first code chunk, you can set if_exists parameter to append in to_sql() and it will respect column types when writing to db

df.to_sql('tv', connection, if_exists='append')