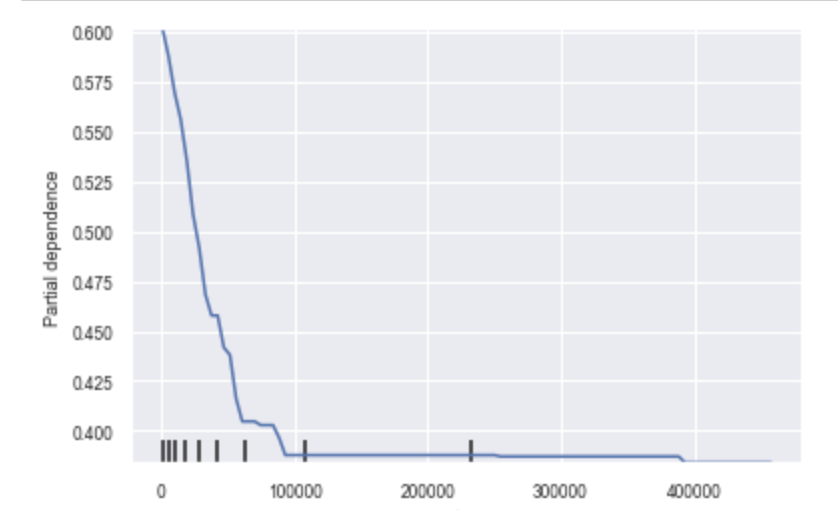

I am not sure what the y-axis of my PDP implies? Is that the probability for my target feature to be 1 (binary classification) or something else?

CodePudding user response:

If you do the partial dependence plot of column a and you want to interpret the y value at x = 0.0, the y-axis value represent the average probability of class 1 computed by

- changing value of column

ain all rows in your dataset to0.0 - predicting all changed row with your fitted model

- averaging the probability given by the model

I may not good at explaining but you can read more about PDP at https://christophm.github.io/interpretable-ml-book/pdp.html. Hope this help :)

CodePudding user response:

Generally speaking, we can produce a classifier from a function, f, producing a real-value output plus a threshold. We call the output an 'activation'. If the activation meets a threshold condition is met, the we say the class is detected:

is_class := ( f(x0, x1, ...) > threshold )

and

activation = f(x0, x1, ...)

PDP plots simply show activation values as they change in response to changes in an input value (we ignore the threshold). That is might plot:

f(x0, x, x2, x3, ...)

as a single input x varies. Typically, we hold the others constant, although we can also plot in 2d and 3d.

Sometimes we're interested in:

- how a single change the activation

- how multiple inputs independently change the activation

- how multiple activations change based on different inputs, and so on.

Strictly speaking, we need not even be talking about a classifier when looking a PDP plots. Any function that productions a real-value output (an activation) in response to one of more real-valued feature inputs that we can vary allows us to produce PDP plots.

Classifier activations need not be, and often should not be, interpreted as probabilities, as others have written. In very many cases, this is simply just incorrect. Nevertheless, the analysis of the activation levels is of interest to us, independently of whether the activations represent probabilities: in PDP plots, we can see, for example, which feature values produce strong change - more horizontal plots may imply a worthless feature. Similarly, in RoC plots, we explicitly examine information about the true-positive and false-position detection rates that result for varying the threshold of activation values. In both cases, there's no necessity that the classifier produce probabilities as its activation.

Interpretation of PDP plots is fraught with dangers. At a minimum, you need to be clear about what is being held constant as a input feature is varied. Were the other features set to zero (a good choice for linear models)? Did we the set them to their most common values in the test set? Or the most common values for a known class in a sample? Without this information, the vertical axis may be less helpful.

Knowing that an activation is a probability also doesn't seem to helpful in PDP plots -- you can't expect the area under it to sum to one. Perhaps the most useful thing you might find is error cases, where output probabilities are not in the range 0..1.