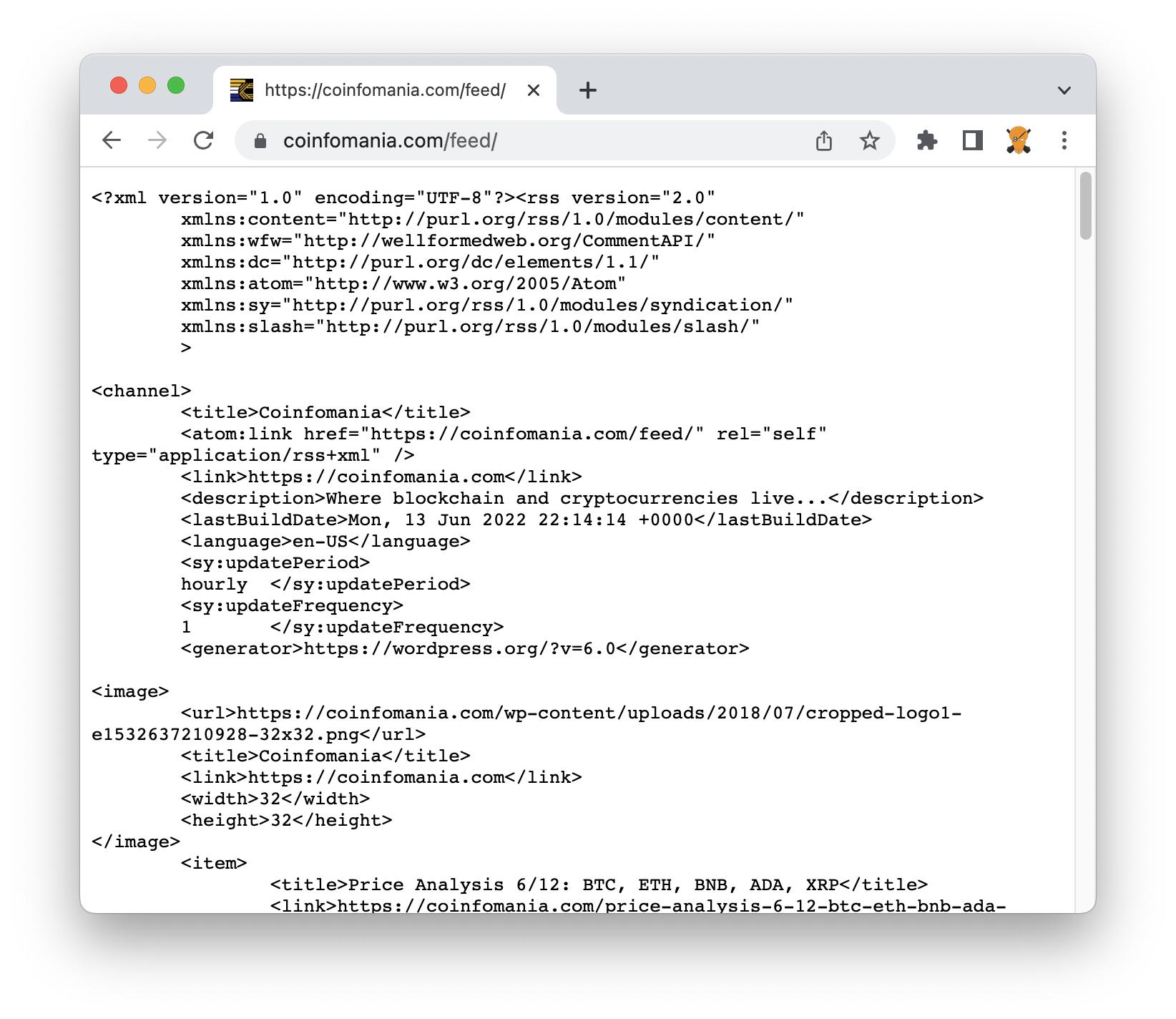

I am trying to load a URL from

When I try to load data from the same URL with aiohttp with the following code, it gives me an error

from datetime import datetime

from urllib.parse import urlparse

from pathlib import Path

import asyncio

import aiohttp

import os

async def fetch_url(url, save_to_file=False):

timeout = aiohttp.ClientTimeout(total=30)

async with aiohttp.ClientSession(timeout=timeout, trust_env=True) as session:

async with session.get(url, raise_for_status=True, ssl=False) as response:

text = await response.text()

if save_to_file:

current_timestamp = datetime.now().strftime('%m_%d_%Y_%H_%M_%S')

name_part = urlparse(url).netloc

# https://stackoverflow.com/questions/12517451/automatically-creating-directories-with-file-output

file_name = Path(os.path.join(os.getcwd(), 'feeds', name_part '_' current_timestamp '.rss'))

file_name.parent.mkdir(exist_ok=True, parents=True)

with open(file_name, 'w ') as f:

f.write(text)

url = 'https://coinfomania.com/feed/'

# if running inside Jupyter notebook

await fetch_url(url, save_to_file=True)

Error

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/vr/.pyenv/versions/3.10.0/lib/python3.10/asyncio/runners.py", line 44, in run

return loop.run_until_complete(main)

File "/Users/vr/.pyenv/versions/3.10.0/lib/python3.10/asyncio/base_events.py", line 641, in run_until_complete

return future.result()

File "<stdin>", line 4, in fetch_url

File "/Users/vr/.local/share/virtualenvs/notebooks-dCnpXdWv/lib/python3.10/site-packages/aiohttp/client.py", line 1138, in __aenter__

self._resp = await self._coro

File "/Users/vr/.local/share/virtualenvs/notebooks-dCnpXdWv/lib/python3.10/site-packages/aiohttp/client.py", line 640, in _request

resp.raise_for_status()

File "/Users/vr/.local/share/virtualenvs/notebooks-dCnpXdWv/lib/python3.10/site-packages/aiohttp/client_reqrep.py", line 1004, in raise_for_status

raise ClientResponseError(

aiohttp.client_exceptions.ClientResponseError: 403, message='Forbidden', url=URL('https://coinfomania.com/feed/')

CodePudding user response:

What's happening is that the website considers your request as unusual, and therefore blocks it. You need to make it look like a normal request made from a browser by adding headers to your request. I found a list of common headers :

headers= {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:98.0) Gecko/20100101 Firefox/98.0",

"Accept": "text/html,application/xhtml xml,application/xml;q=0.9,image/avif,image/webp,*/*;q=0.8",

"Accept-Language": "en-US,en;q=0.5",

"Accept-Encoding": "gzip, deflate",

"Connection": "keep-alive",

"Upgrade-Insecure-Requests": "1",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "none",

"Sec-Fetch-User": "?1",

"Cache-Control": "max-age=0",

}

To add them to your request, just change

async with session.get(url, raise_for_status=True, ssl=False) as response: to

async with session.get(url, raise_for_status=True, ssl=False, headers=headers) as response: