I am working with a relatively big dataframe, 1600000 lines. Each line is an entry of a building code violation. Data includes information from the agency issuing the ticket, building address, building zip code, owner's name, address and zip code, plus violation information, fines data, and some additional columns.

| ticket_id | … | owner_name | … | mailing_address_str_name | zip_code | … |

|---|---|---|---|---|---|---|

| 21771 | … | MORTGAGE, INC., WELLS FARGO HOME | … | STATE VIEW BLVD. | 29715 | … |

| 25534 | … | MORTGAGE INC., WELLS FARGO HOME | … | STATE VIEW BLVD. | 29715 | … |

| 37303 | … | BANK, W ELLS FARGO | … | STATEVIEW BLVD | 29715 | … |

| 39080 | … | MORTGAGE INC, WELLS FARGO | … | STATE VIEW BLVD | 29715 | … |

| 41398 | … | BANK, WELLS FARGO | … | STATE VIEW BLVD. | 29715 | … |

| 46387 | … | REGISTRATION SYSTEM INC, MORTGAGE ELECTRONIC | … | STATE VIEW | 29715 | … |

| 47099 | … | ASSOCIATION, US BANK NATIONAL | … | STATE VIEW BLVD. | 29715 | … |

| 47446 | … | ELECTRONIC REGISTRATION SYSTEM, MORTGAGE | … | STATE VIEW BLVD | 29715 | … |

| 170222 | … | GROUP LLC, INTERSTATE INVESTMENT | … | P.O. BOX 264 | 29054 | … |

| 177660 | … | HOLDINGS LLC, PARAMOUNT LAND | … | HAMPTON ST | 29054 | … |

| 178771 | … | HOLDINGS LLC, PARAMOUNT LAND | … | HAMPTON ST | 29054 | … |

| 181349 | … | GROUP LLC, INTERSTATE INVESTMENT | … | HAMPTON | 29054 | … |

| 181288 | … | GROUP LLC, INTERSTATE INVESTMENT | … | HAMPTON ST | 29054 | … |

| 181597 | … | PARAMOUNT LAND HOLDING LLC, . | … | PO BOX 264 | 29054 | … |

| 21157 | … | I B PROPERTY HOLDINGS, LLC, . | … | PONCE DeLEON BLVD. | 33146 | … |

| 35992 | … | PROPERTY TRUST, BAYVIEW FINANCIAL | … | PONCE DE LEON BLVD. 4TH FLR | 33146 | … |

| 36153 | … | PROPERTY TRUST, BAYVIEW FINANCIAL | … | PONCE DE LEON BLVD | 33146 | … |

| 46970 | … | TRUST ADELWARE TRUST, BAYVIEW FINANCIAL PROPERTY | … | PONCE DE LEON BLVD. 4TH FLR | 33146 | … |

| 53884 | … | IB PROPERTY HOLDINGS, LLC, .4425 | … | PONCE DeLEON BLVD. | 33146 | … |

| 61911 | … | LLC, IB PROPERTY HOLDING | … | PONCE DE LEON BLVD. | 33146 | … |

The column I want to analyze is owner's name. It has a lot of variations for the same name. I do not have the correct spelling, and I don't need it. I need to figure out how many tickets have been issued to a given owner.

The code I wrote works well but takes AGES (3 hrs to clean up the dataframe).

First thing I separate owners addresses by zip codes (there are some 3000 zip codes), assuming zip code is much less prone to error than "owners name". I use:

groups = trandf01.groupby('zip_code', sort=False)

And I get a nice set of 3000 zip codes with something between 1 and 7000 tickets per code. For each group I then proceed to scan it row by row with a basic algorithm. I do a double loop:

import numpy as np

from fuzzywuzzy import fuzz

trandf01 = pd.read_csv('data.csv')

groups = trandf01.groupby('zip_code', sort=False)

keys = groups.groups.keys()

df2 = pd.DataFrame()

for k in keys:

df=groups.get_group(k)

if len(df.index)>2:

df.reset_index(inplace=True)

for i in range(len(df.index)):

aa=df.iat[i,4]

for j in range(i 1,len(df.index)):

bb=df.iat[j,4]

ratio=fuzz.token_set_ratio(aa,bb)

if ratio > 74:

df.iat[j,4]=aa

df2=pd.concat([df2,df])

df2.to_excel("TextCorrectedNEW.xlsx")

Each name is compared with the names that follow it. If I get a match ( fuzz.token_set_ratio(aa,bb) > 74), I replace the new one with the first one. While it may not give an optimal solution (best "matcher" overall), it works quite well, but slow. Speed is n^2 with n the number of entries for each zip code.

There is some other way that is faster?

Thank you.

CodePudding user response:

The main performance challenge is that you have to compare every record with every other record in each zip code group, which is very inefficient no matter how you implement it.

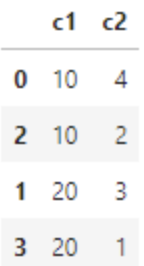

My preference would be to decouple the records by using a hashing algorithm that generates the same hash code for similar strings. I chose

After alignment