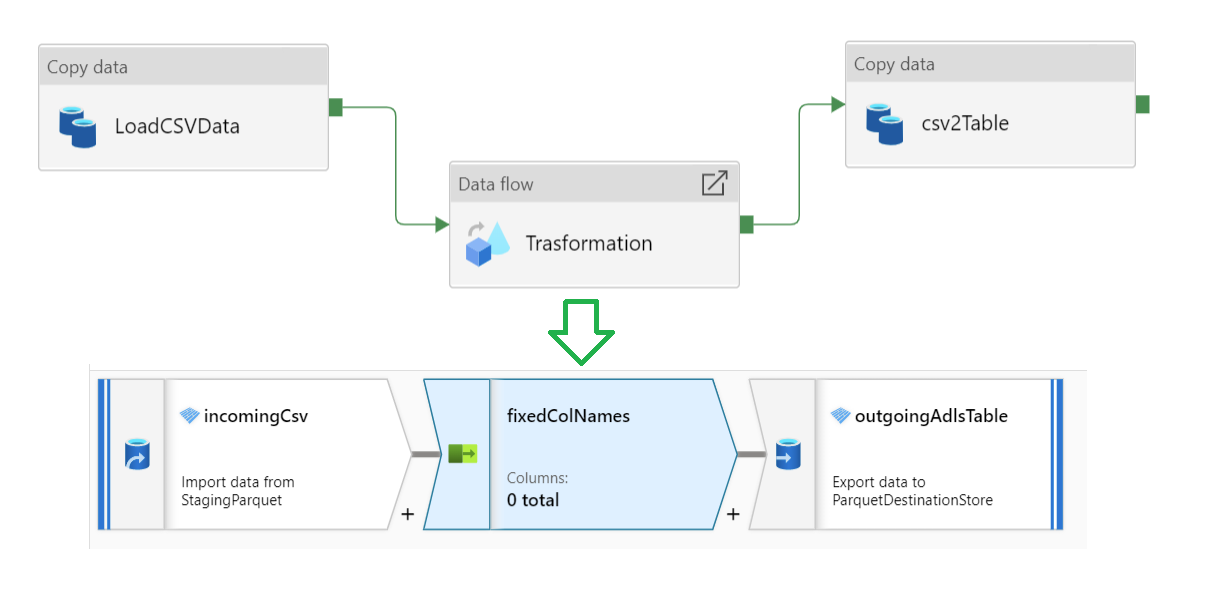

I am making a data pipeline in Azure Synapse. I want to copy a 500 GB CSV file from a Blob container file and convert it into an Azure Data Lake Storage Gen2 table. Before I copy it into the table, I want to make some changes to the data using a Data Flow block, to change some column names and other transformations.

- Is it possible to copy the data and make transformations implicitly, without a staging Parquet store ?

- If yes, how do I make the transformations implicitly ? Ex: Remove dashes ("-") from all column names ?

CodePudding user response:

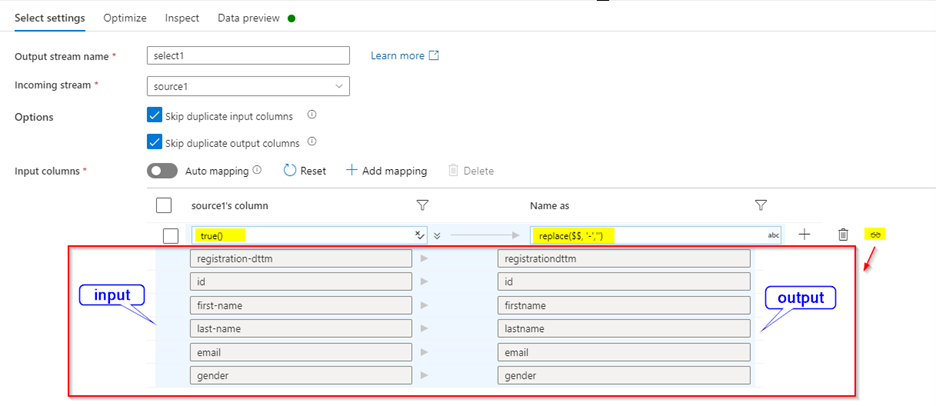

You can use rule-based mapping in the select transformation to remove the Hyphen symbol from all the column names.

Matching condition: true()

Output column name expression: replace($$, '-','')

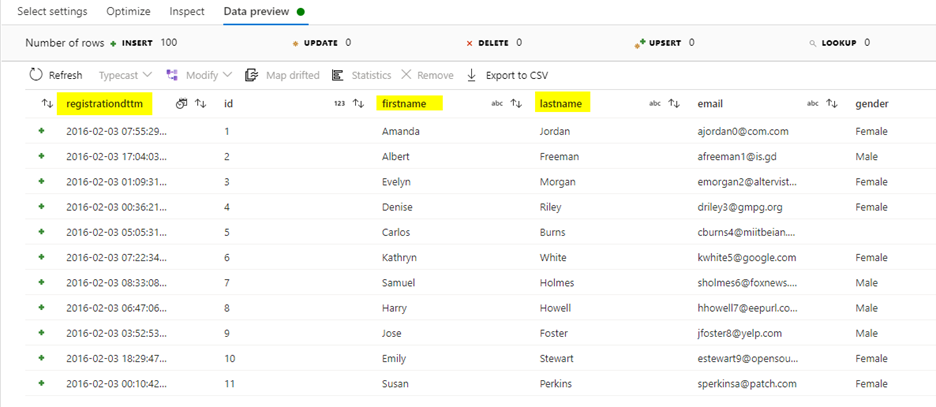

Select output: