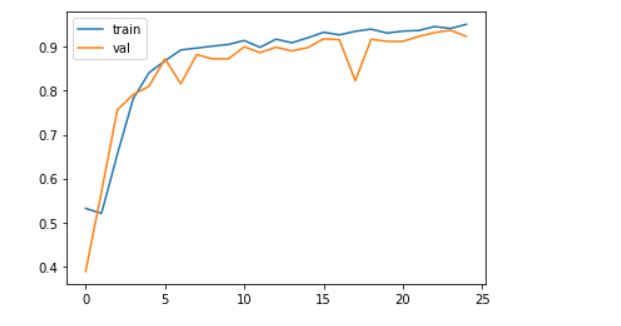

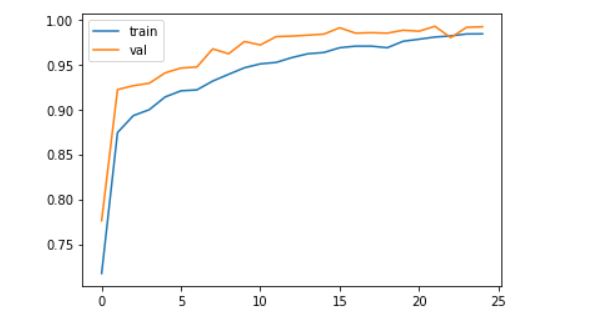

Can you tell me which one among the two is a good validation vs train plot?

Both of them are trained with same keras sequential layers, but the second one is trained using more number of samples, i.e. augmented the dataset.

I'm a little bit confused about the zigzags in the first plot, otherwise I think it is better than the second. In the second plot, there are no zigzags but the validation accuracy tends to be a little high than train, is it overfitting or considerable?

It is an image detection model where the first model's dataset size is 5170 and the second had 9743 samples.

The convolutional layers defined for the model building:

tf.keras.layers.Conv2D(128,(3,3), activation = 'relu', input_shape = (150,150,3)),

tf.keras.layers.MaxPool2D(2,2),

tf.keras.layers.Conv2D(64,(3,3), activation = 'relu'),

tf.keras.layers.MaxPool2D(2,2),

tf.keras.layers.Conv2D(32,(3,3), activation = 'relu'),

tf.keras.layers.MaxPool2D(2,2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(512,activation='relu'),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Dense(128,activation='relu'),

tf.keras.layers.Dropout(0.25),

tf.keras.layers.Dense(1,activation='sigmoid')

Can the model be improved?

CodePudding user response:

From the graphs the second graph where you have more samples is better. The reason is with more samples the model is trained on a much wider probability distribution of images. So when validation is run you have a better chance of correctly classifying the image. You have a lot of dropout in your model. This is good to prevent over fitting, however it will lower the training accuracy relative to the validation accuracy. Your model seems to be doing well. It might improve if you add additional convolution- max pooling layers. Alternative of course is to use transfer learning. I would recommend efficientnetb3. I also recommend using an adjustable learning rate. The Keras callback ReduceLROnPlateau works well for that purpose. Documentation is here.. Code below shows my recommended settings.

rlronp=tf.keras.callbacks.ReduceLROnPlateau(

monitor='val_loss',

factor=0.5,

patience=2,

verbose=1,

mode='auto'

)

in model.fit include callbacks=[rlronp]