Looking into code of L2 regularizer, I understand that it implies penalty for anything that is not-zero. In other words the ideal tensor that allows to avoid this penalty should contain only zeros. Do I understand this concept correctly?

CodePudding user response:

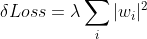

L2 regularizer, when specified for a layer, add all parameters of the layer to the loss function with the corresponding regularization parameter:

where you set lambda during initialization of the regularizer. As you can see from the formula, with positive lambda the minimum of the extra loss term is indeed = 0 only when all weights = 0. So short answer is yes, you are right, but this is only one extra term in the loss, and only the total loss is optimized.