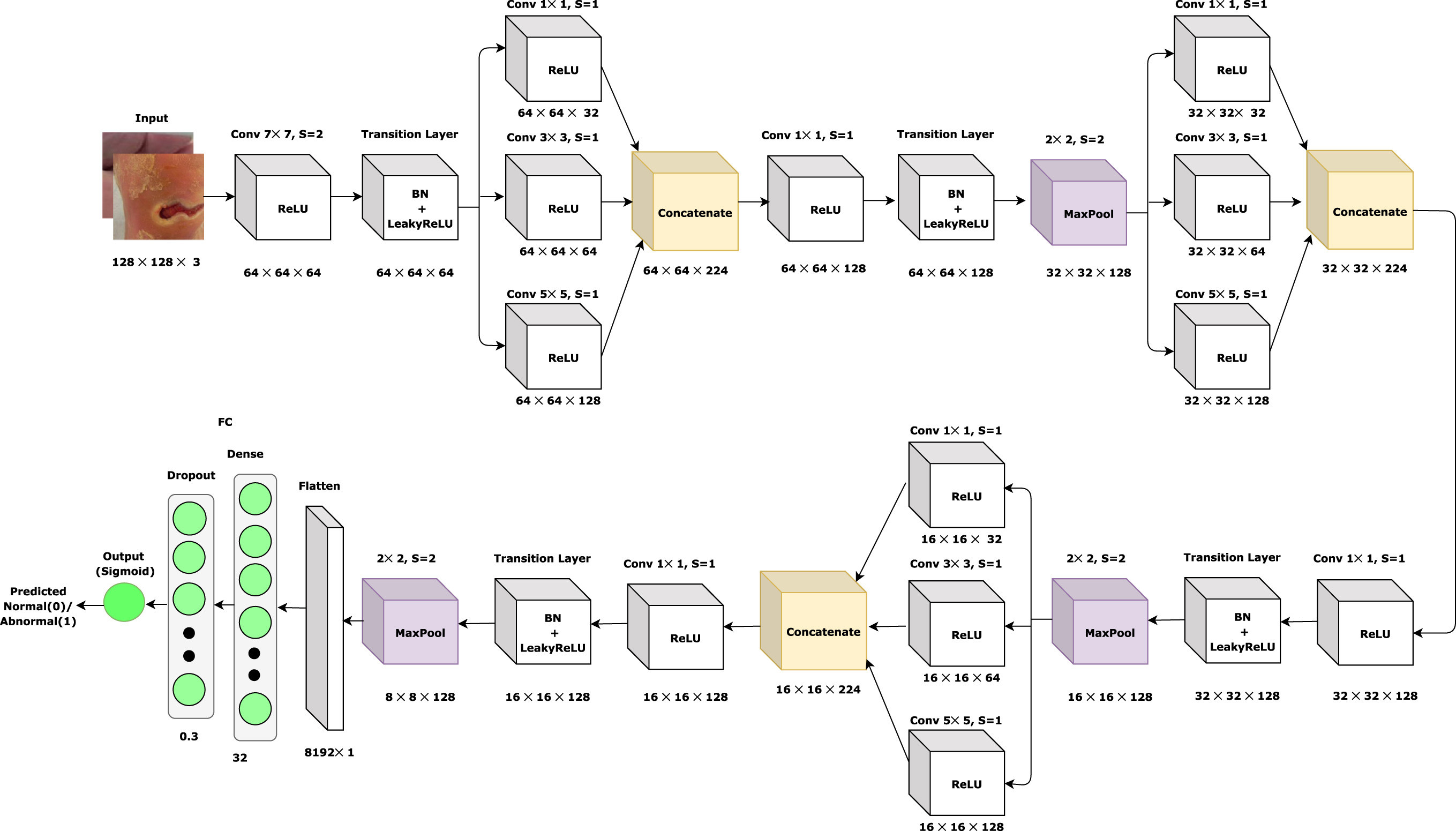

I am trying to make a neural network from a flow diagram. It is necessary for my analysis to translate this network into a code. Could you help me if I'm doing anything wrong. Here is the diagram. The author used binary classification but I'm doing multiple so ignore that one. I'm a kind a new to building CNN and this is all I could come up with different sources from the internet.

import tensorflow as tf

from tensorflow.keras.layers import Input, Conv2D, Concatenate,Dense,Flatten

from tensorflow.keras.models import Sequential

from keras.layers import BatchNormalization

model_1=Sequential()

#First Stacked

model_1.add(Conv2D(filters=64,kernel_size=7,stride=(2,2),activation='relu',input_shape=(128,128,1)))

model_1.add(BatchNormalization())

model_1.add(LeakyReLU(alpha=0.1))

layer_1=Conv2D(filters=32,kernel_size=3,stride=(1,1),activation='relu')(model_1)

layer_2=Conv2D(filters=64,kernel_size=5,stride=(1,1),activation='relu')(model_1)

layer_3=Conv2D(filters=128,kernel_size=5,stride=(1,1),activation='relu')(model_1)

concatenate_1 = keras.layers.concatenate([layer_1, layer_2,layer_3], axis=1)

#Second Stacked

concatenate_1.add(Conv2D(filters=64,kernel_size=1,stride=(1,1),activation='relu')

concatenate_1.add(BatchNormalization())

concatenate_1.add(LeakyReLU(alpha=0.1))

concatenate_1.add(MaxPooling2D((2, 2), strides=(2, 2), padding='same'))

layer_1=Conv2D(filters=32,kernel_size=1,stride=(1,1),activation='relu')(concatenate_1)

layer_2=Conv2D(filters=64,kernel_size=3,stride=(1,1),activation='relu')(concatenate_1)

layer_3=Conv2D(filters=128,kernel_size=5,stride=(1,1),activation='relu')(concatenate_1)

concatenate_2 = keras.layers.concatenate([layer_1, layer_2,layer_3], axis=1)

#Third Stacked

concatenate_2.add(Conv2D(filters=64,kernel_size=1,stride=(1,1),activation='relu')

concatenate_2.add(BatchNormalization())

concatenate_2.add(LeakyReLU(alpha=0.1))

concatenate_2.add(MaxPooling2D((2, 2), strides=(2, 2), padding='same'))

layer_1=Conv2D(filters=32,kernel_size=1,stride=(1,1),activation='relu')(concatenate_2)

layer_2=Conv2D(filters=64,kernel_size=3,stride=(1,1),activation='relu')(concatenate_2)

layer_3=Conv2D(filters=128,kernel_size=5,stride=(1,1),activation='relu')(concatenate_2)

concatenate_3 = keras.layers.concatenate([layer_1, layer_2,layer_3], axis=1)

#Final

concatenate_3.add(Conv2D(filters=64,kernel_size=1,stride=(1,1),activation='relu')

concatenate_3.add(BatchNormalization())

concatenate_3.add(LeakyReLU(alpha=0.1))

concatenate_3.add(MaxPooling2D((2, 2), strides=(2, 2), padding='same'))

concatenate_3=Flatten()(concatenate_3)

model_dfu_spnet=Dense(200, activation='relu')(concatenate_3)

mode_dfu_spnet.add(Dropout(0.3,activation='softmax'))

CodePudding user response:

Concatenate() is done by doing Concatenate(**args)([layers])

keras.layers.concatenate([layer_1, layer_2,layer_3], axis=1)

should be (note the capitalization)

keras.layers.Concatenate(axis=1)([layer_1, layer_2,layer_3])

# axis=1 is default, so you can just do

# keras.layers.Concatenate()([layer_1, layer_2,layer_3])

Then do the same for the other Concatenate().

I'm not sure what you want to do with this:

model_dfu_spnet=Dense(200, activation='relu')(concatenate_3)

But following the picture, that layer should have 32 neurons (seems kinda small for that but idk...)

model_dfu_spnet=Dense(32, activation='relu')(concatenate_3)

You don't put the activation function on Droupout

mode_dfu_spnet.add(Dropout(0.3,activation='softmax'))

but you probably want it on another Dense layer, also with the number of classes as the neurons.

mode_dfu_spnet.add(Dropout(0.3))

mode_dfu_spnet.add(Dense(num_of_classes, activation="softmax", name="visualized_layer"))

I'm not used to doing Sequential models with Concatenate, usually Functional but it shouldn't be any different.