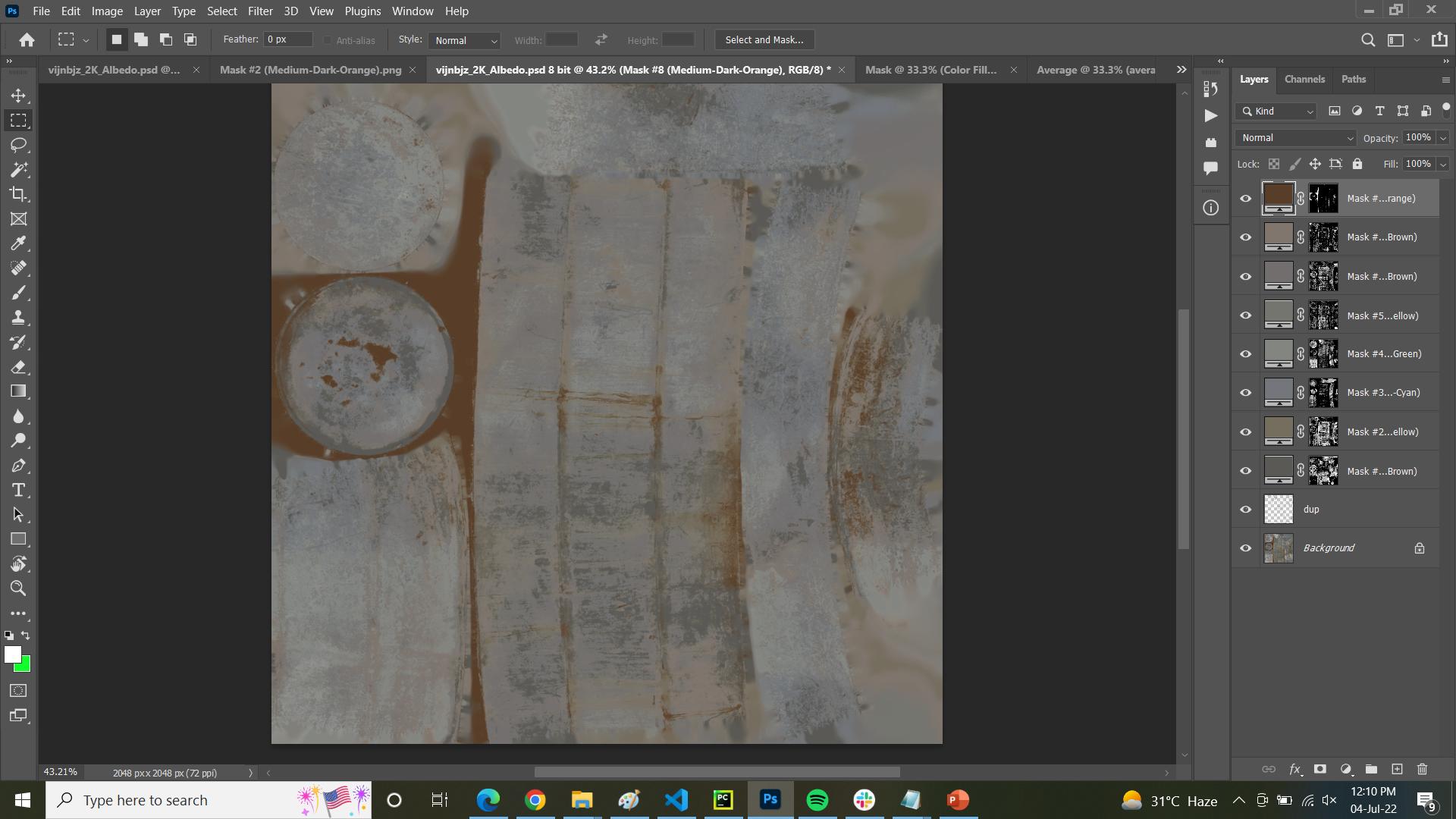

I have all these layers in Photoshop:

I also have these images in Python. In Photoshop, I can get the average color of the entire document from the RGB channel of the document. In Python, I was thinking I would blend the images together using cv2.addWeighted, and then use the histograms of the three channels to get the average color. However, my average color does not match with the one obtained from Photoshop.

Here is my code so far:

im1 = cv2.imread('a1.png')

im2 = cv2.imread('a2.png')

im3 = cv2.imread('a3.png')

alpha = 0.5

beta = (1.0 - alpha)

blended = cv2.addWeighted(im1, alpha, im2, beta, 0.0)

blended = cv2.addWeighted(blended, alpha, im3, beta, 0.0)

# cv2.imwrite('blended.png', blended)

averageColor = []

chans = cv2.split(blended)

colors = ("b", "g", "r")

for (chan, color) in zip(chans, colors):

# create a histogram for the current channel and plot it

hist = cv2.calcHist([chan], [0], None, [256], [0, 256])

for i in range(len(hist)):

if (hist[i]) != 0:

averageColor.append(i)

break

print("Average color: ", averageColor)

base_color = np.fill((aw, ah, 3), averageColor)

base_color_layer = Image.fromarray(base_color)

base_color_layer.save("Base Color.png")

im3 is the "dup" layer containing only one color (129, 127, 121). im2 is all the Mask layers summed together using np.add. im1 is the Background image.

Any thoughts?

CodePudding user response:

# given

im1 = cv2.imread('a1.png')

im2 = cv2.imread('a2.png')

im3 = cv2.imread('a3.png')

# calculations

averageIm = np.mean([im1, im2, im3], axis=0)

averageColor = averageIm.mean(axis=(0,1))

Assuming the images are color without alpha, and assuming you want each picture to have equal weight.

This is not equivalent to a stack of layers and giving each layer the same transparency, because that is equivalent to exponential/geometric average, i.e. alpha * im1 (1-alpha) * (alpha * im2 (1-alpha) * (im3)) which gives an image successively less weight the lower it is on the stack. it goes like [0.5, 0.25, 0.25], or [0.5, 0.25, 0.125, 0.0625, ...]

CodePudding user response:

Disclaimer: I would highly recommend you do this with the CUDA API (either in C or with the Python bindings) but since the qn is about pure python, see below

I'm not going to add all the cv2 reading code bc idk the API but let's assume you've got images 1...n in a list called images and each image is a 3d array of width x height x rgba and let's assume all images are the same size

Image_t = list[list[list[int]]]

def get_average_colour(images: list[Image_t]) -> Image_t:

new_image: Image_t = []

image_width = len(images[0][0]) # len of first row in first image

image_height = len(images[0]) # number of rows

colour_channel_size = len(images[0][0][0])

for h in range(image_height):

row = []

for w in range(image_width):

curr_pixel = [0] * colour_channel_size

for img in images:

for chan, val in enumerate(img[h][w]):

curr_pixel[chan] = val

row.append(list(map(lambda v: int(v / len(images), curr_pixel)) # This will average out your pixel values

new_image.append(row)

return new_image