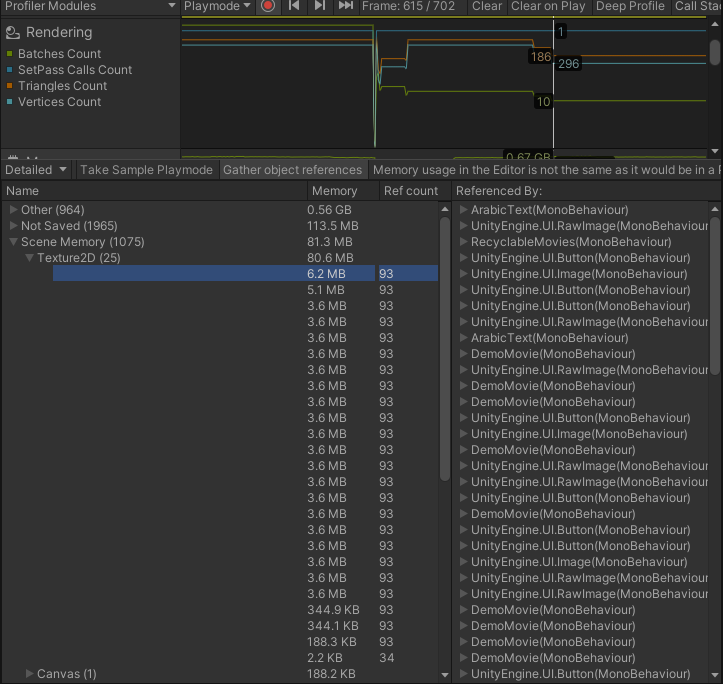

I made a script to download some images from the web on click page, and when I moved to the second page, a second set of images was downloaded, but the problem is that the image size is relatively large, and when there are more than one image, it consumes a lot of RAM, how can I save as much memory as possible by compressing the texture

this is my Code

IEnumerator D_Image(string url_)

{

Destroy(Icon.texture);

Icon.texture = LoadT;

using (UnityWebRequest uwr = UnityWebRequestTexture.GetTexture(url_))

{

yield return uwr.SendWebRequest();

if (uwr.result != UnityWebRequest.Result.Success)

{

Icon.texture = ErrorT;

}

else

{

Icon.texture = DownloadHandlerTexture.GetContent(uwr);

}

uwr.Dispose();

}

}

CodePudding user response:

Try compressing the images, it makes a big difference You can use this code I found it in this TextureOps add-on

public static Texture2D Scale( Texture sourceTex, int targetWidth, int targetHeight, TextureFormat format = TextureFormat.RGBA32, Options options = new Options() )

{

if( sourceTex == null )

throw new ArgumentException( "Parameter 'sourceTex' is null!" );

Texture2D result = null;

RenderTexture rt = RenderTexture.GetTemporary( targetWidth, targetHeight );

RenderTexture activeRT = RenderTexture.active;

try

{

Graphics.Blit( sourceTex, rt );

RenderTexture.active = rt;

result = new Texture2D( targetWidth, targetHeight, format, options.generateMipmaps, options.linearColorSpace );

result.ReadPixels( new Rect( 0, 0, targetWidth, targetHeight ), 0, 0, false );

result.Apply( options.generateMipmaps, options.markNonReadable );

}

catch( Exception e )

{

Debug.LogException( e );

Object.Destroy( result );

result = null;

}

finally

{

RenderTexture.active = activeRT;

RenderTexture.ReleaseTemporary( rt );

}

return result;

}

CodePudding user response:

You can use Addressables for those things. Adressables support CDN which you can download your pre-build content. You can compress those contents aswell

Check Remote content distribution.