I am doing some performance testing regarding creating and editing performance of arrays and noticed that there are some weird characteristics around arrays with about 13k-16k elements.

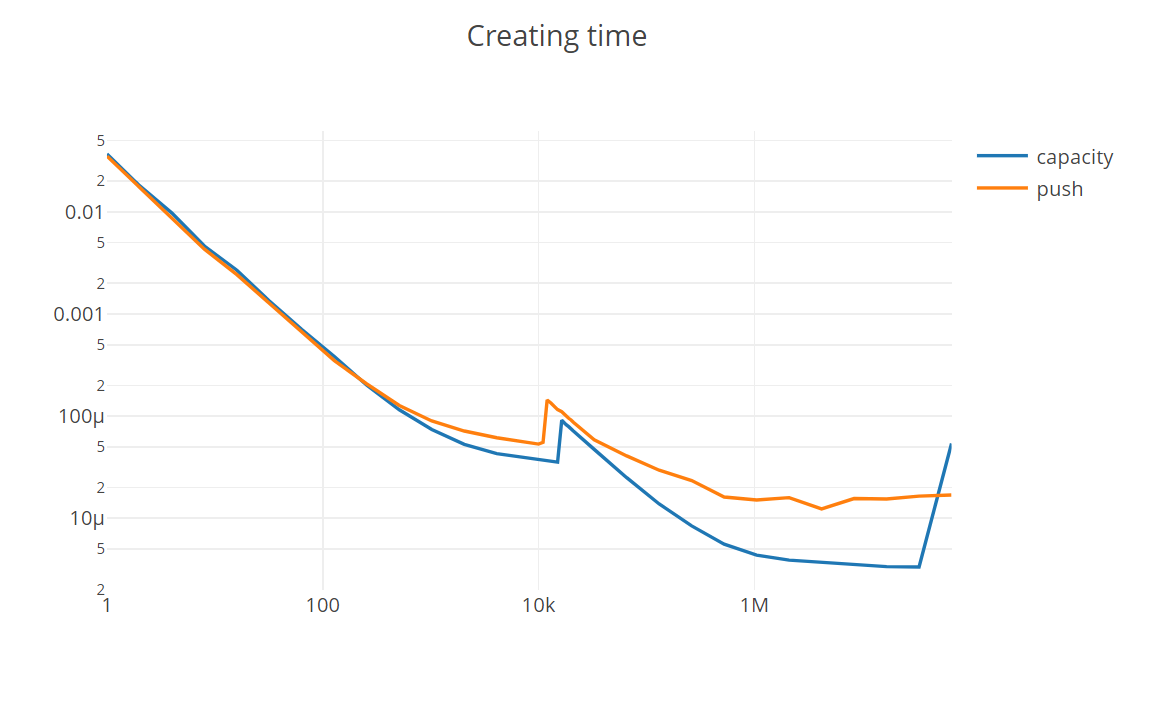

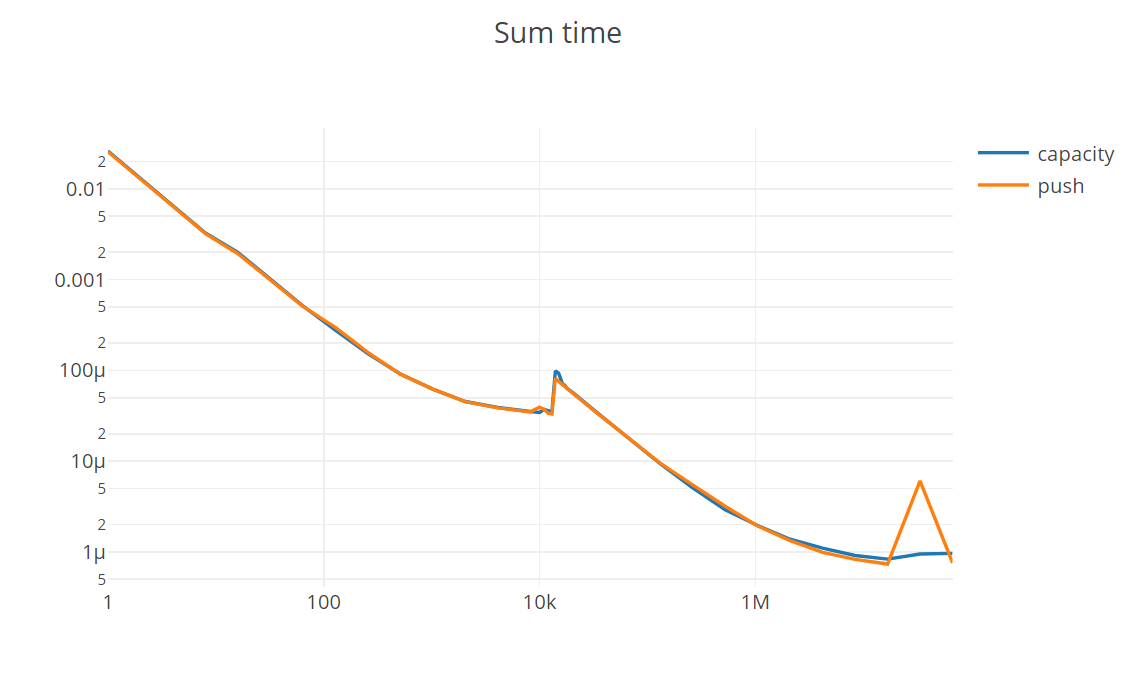

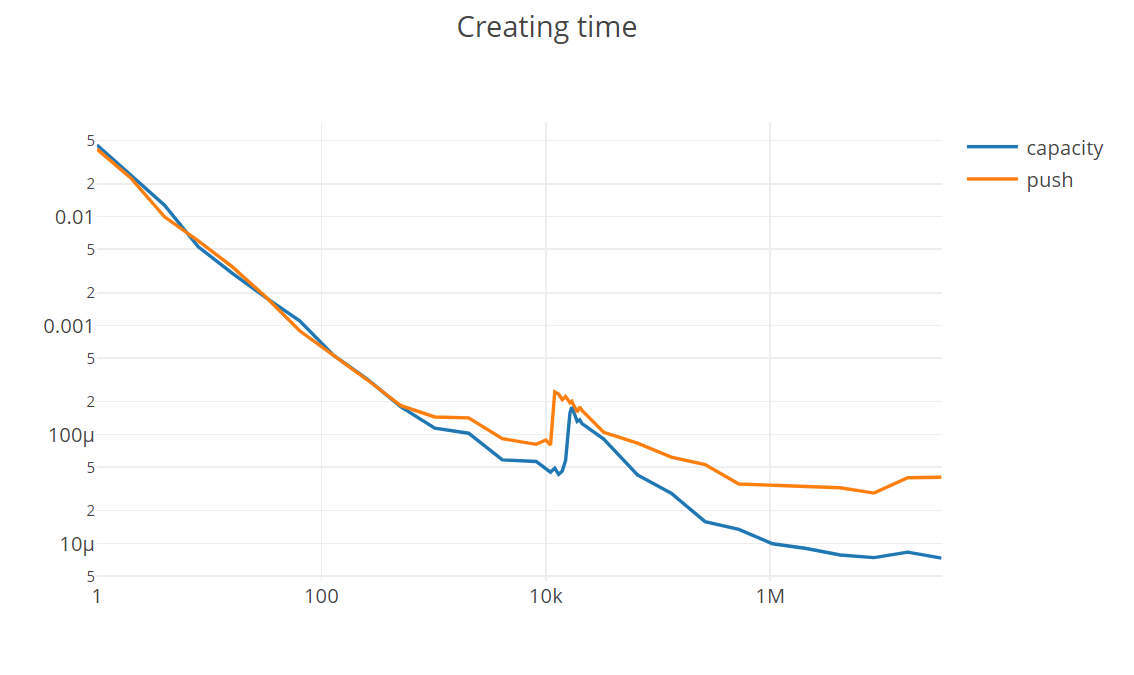

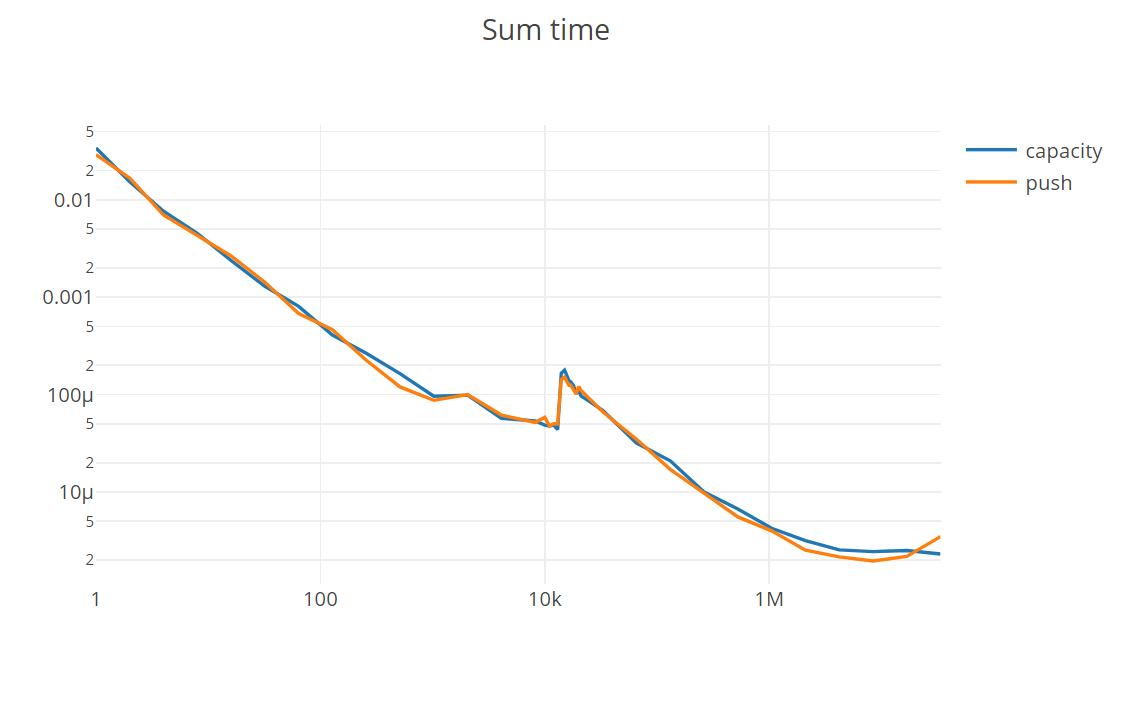

The below graphs show the time per element it takes to create an array and read from it (in this case summing the numbers in it). capacity and push relate to the way the array was created:

- capacity:

const arr = new Array(length)and thenarr[i] = data - push:

const arr = [];and thenarr.push(data)

As you can see, in both cases, the creation of an array and reading from it, there is a performance reduction of about 2-3 times compared to the performance per element at 1k less elements.

When creating an array using the push method, this jump happens a bit earlier compared to creating it with the correct capacity beforehand. I assume that this is happening because, when pushing to an array that is already at max capacity, more than the actually needed extra capacity is added (to avoid having to add new capacity again soon) and the threshold for the slower performance path is hit earlier.

If you want to see the code or test it yourself:

Edit:

I ran node using the --allow-natives-syntax flag to debug log the created arrays with