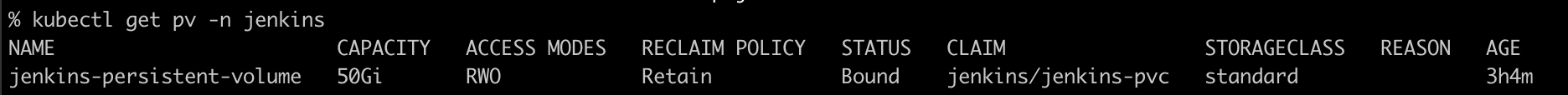

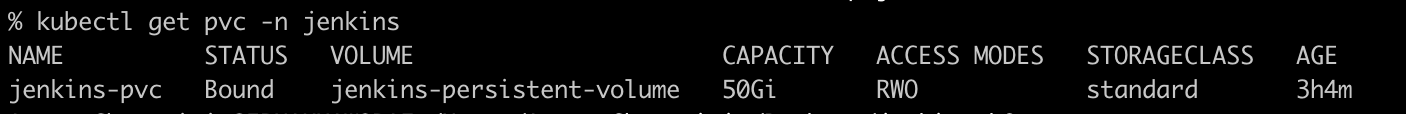

I'm trying to mount existing google cloud Persistent Disk(balanced) to Jenkins in Kubernetes. In the root of the disk located fully configured Jenkins. I want to bring up Jenkins in k8s with already prepared configuration on google Persistent Disk.

I'm using latest chart from the

Files in Persistent Google Disk are 100% 1000:1000 permissions (uid, gid)

I made only one change in official helm chart, it was in values file

existingClaim: "jenkins-pvc"

After running helm install jenkins-master . -n jenkins

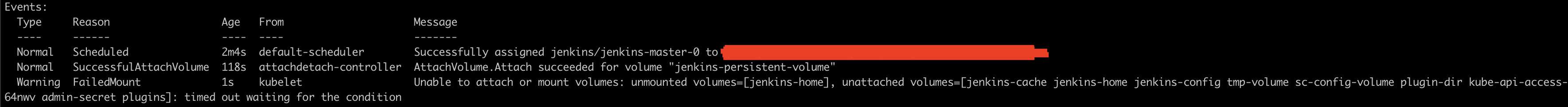

I'm getting next:

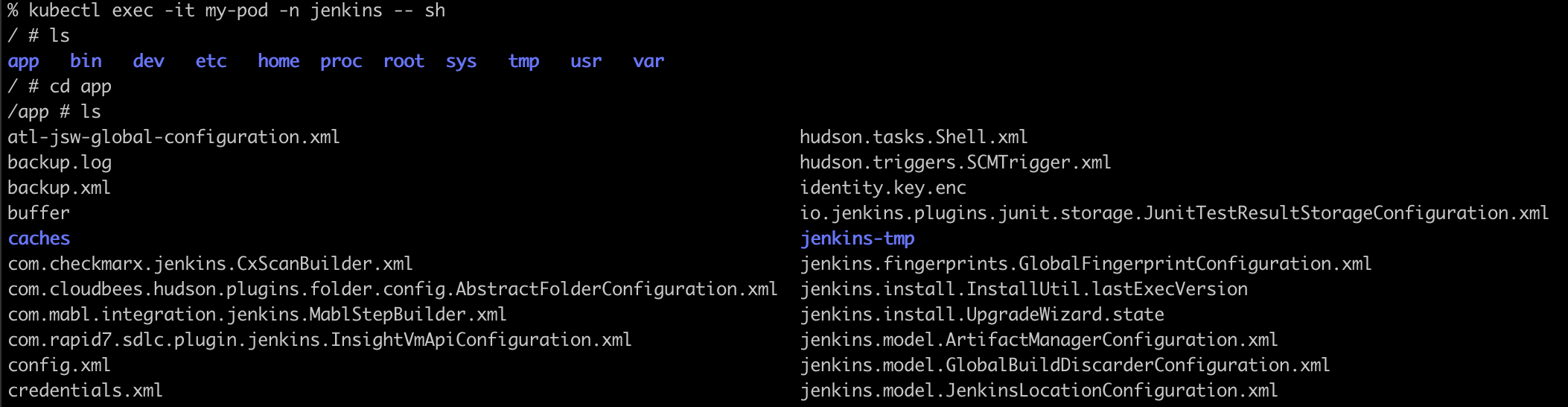

Just for ensure that problem not from GCP side. I mount pvc to busybox and it works perfect.

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: busybox

image: busybox:1.32.0

command:

- "/bin/sh"

args:

- "-c"

- "while true; do echo $(date) >> /app/buffer; cat /app/buffer; sleep 5; done;"

volumeMounts:

- name: my-volume

mountPath: /app

volumes:

- name: my-volume

persistentVolumeClaim:

claimName: jenkins-pvc

I tried to change a lot of values in values.yaml also tried use old charts, or even bitnami charts with deployment instead of stateful set, but always error is the same. Could somebody shows my the right way please.

CodePudding user response:

Change storageClass

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-persistent-volume

spec:

storageClassName: standard

to default:

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-persistent-volume

spec:

storageClassName: default

Also check your existing storageClasses:

kubectl get sc

Alternatively, set storageClass to "" in values:

global:

imageRegistry: ""

## E.g.

## imagePullSecrets:

## - myRegistryKeySecretName

##

imagePullSecrets: []

storageClass: "" # <-

I would have post it in comment, but too low repution to comment

CodePudding user response:

Try set the podSecurityContextOverride and re-install:

controller:

podSecurityContextOverride:

runAsUser: 1000

runAsNonRoot: true

supplementalGroups: [1000]

persistence:

existingClaim: "jenkins-pvc"