To develop a binary classifier, I am fine-tuning a ResNet18 model to distinguish between two classes of images.

To find the best learning rate, I do a grid search considering LR = 0.1, 0.01, 0.001, 0.0001, 0.00001. To choose the optimal LR, I use the following recipe:

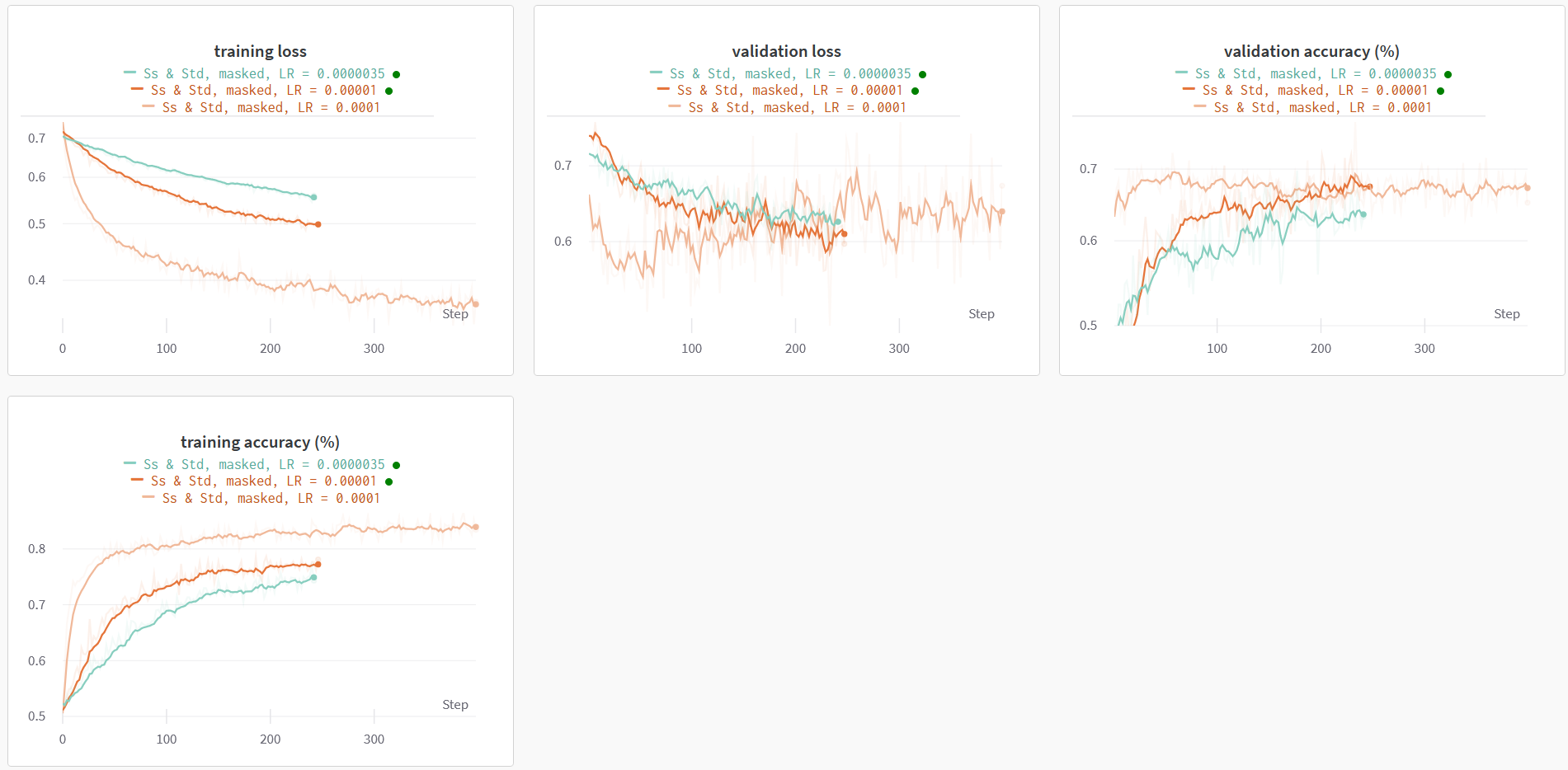

- Look for the LR for which both the training loss and the validation loss decrease as the training progresses (sign of no overfitting).

- Check that both the validation accuracy and the training accuracy increase as the training proceeds.

- Interrupt the training when the training accuracy (and potentially also the validation accuracy) curve is flat.

As a reference, you can have a look at the curves in the attached image.

Do you confirm that this approach is solid? I am considering various combinations of classes, and the most unbalanced is about 30/70%. Besides accuracy, should I also consider precision and recall? Any other suggestion is very welcome!

CodePudding user response:

If you can afford it, the best you can do is train end-to-end with all the parameters. Other than that, your approach is valid, but instead of looking at the curves manually, you should rely on your metrics better.

In the deep learning field, some methods suggest training the model for each candidate parameter for a few epochs (1 or 2) and taking the parameter that performs best regarding the metrics.

Finally, if you have a class imbalance, you should definitely use precision and recall. Otherwise, predicting every sample as the majority class will give you 70% accuracy, which is not bad at all. You don't want your model to go on that path.