When I try integrate Kafka with spark it fails.

Here is my code:

from pyspark.sql import SparkSession

from pyspark.streaming import StreamingContext

import findspark

import kafka

import json

if __name__ == "__main__":

findspark.init("D:\\spark-3.0.1-bin-hadoop2.7\\")

spark = (

SparkSession.builder.appName("Kafka Pyspark Streaming Learning").config(

"spark.jars.packages", "org.apache.spark:spark-sql-kafka-0-10_2.12:3.3.0").master("local[*]").getOrCreate()

)

ssc = StreamingContext(spark, 10)

df = spark.readStream.format("kafka").option("kafka.bootstrap.servers", "localhost:9092").option(

"subscribe", "registered_user").option("startingOffsets", "earliest").load()

print(df.show())

ssc.start()

ssc.awaitTermination()

Here is the error traceback:

Ivy Default Cache set to: C:\Users\BERNARD JOSHUA\.ivy2\cache

The jars for the packages stored in: C:\Users\BERNARD JOSHUA\.ivy2\jars

:: loading settings :: url = jar:file:/D:/spark-3.0.1-bin-hadoop2.7/jars/ivy-2.4.0.jar!/org/apache/ivy/core/settings/ivysettings.xml

org.apache.spark#spark-sql-kafka-0-10_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-37ff0b88-bb9a-4566-ac9e-d00b45eb6881;1.0

confs: [default]

found org.apache.spark#spark-sql-kafka-0-10_2.12;3.3.0 in central

found org.apache.spark#spark-token-provider-kafka-0-10_2.12;3.3.0 in central

found org.apache.kafka#kafka-clients;2.8.1 in central

found org.lz4#lz4-java;1.8.0 in central

found org.xerial.snappy#snappy-java;1.1.8.4 in central

found org.slf4j#slf4j-api;1.7.32 in central

found org.apache.hadoop#hadoop-client-runtime;3.3.2 in central

found org.spark-project.spark#unused;1.0.0 in central

found org.apache.hadoop#hadoop-client-api;3.3.2 in central

found commons-logging#commons-logging;1.1.3 in central

found com.google.code.findbugs#jsr305;3.0.0 in central

found org.apache.commons#commons-pool2;2.11.1 in central

:: resolution report :: resolve 653ms :: artifacts dl 22ms

:: modules in use:

com.google.code.findbugs#jsr305;3.0.0 from central in [default]

commons-logging#commons-logging;1.1.3 from central in [default]

org.apache.commons#commons-pool2;2.11.1 from central in [default]

org.apache.hadoop#hadoop-client-api;3.3.2 from central in [default]

org.apache.hadoop#hadoop-client-runtime;3.3.2 from central in [default]

org.apache.kafka#kafka-clients;2.8.1 from central in [default]

org.apache.spark#spark-sql-kafka-0-10_2.12;3.3.0 from central in [default]

org.apache.spark#spark-token-provider-kafka-0-10_2.12;3.3.0 from central in [default]

org.lz4#lz4-java;1.8.0 from central in [default]

org.slf4j#slf4j-api;1.7.32 from central in [default]

org.spark-project.spark#unused;1.0.0 from central in [default]

org.xerial.snappy#snappy-java;1.1.8.4 from central in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 12 | 0 | 0 | 0 || 12 | 0 |

---------------------------------------------------------------------

:: retrieving :: org.apache.spark#spark-submit-parent-37ff0b88-bb9a-4566-ac9e-d00b45eb6881

confs: [default]

0 artifacts copied, 12 already retrieved (0kB/19ms)

22/09/10 00:17:00 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.apache.spark_spark-sql-kafka-0-10_2.12-3.3.0.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.apache.spark_spark-sql-kafka-0-10_2.12-3.3.0.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.apache.spark_spark-token-provider-kafka-0-10_2.12-3.3.0.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.apache.spark_spark-token-provider-kafka-0-10_2.12-3.3.0.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.apache.kafka_kafka-clients-2.8.1.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.apache.kafka_kafka-clients-2.8.1.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/com.google.code.findbugs_jsr305-3.0.0.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\com.google.code.findbugs_jsr305-3.0.0.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.apache.commons_commons-pool2-2.11.1.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.apache.commons_commons-pool2-2.11.1.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.spark-project.spark_unused-1.0.0.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.spark-project.spark_unused-1.0.0.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.apache.hadoop_hadoop-client-runtime-3.3.2.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.apache.hadoop_hadoop-client-runtime-3.3.2.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.lz4_lz4-java-1.8.0.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.lz4_lz4-java-1.8.0.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.xerial.snappy_snappy-java-1.1.8.4.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.xerial.snappy_snappy-java-1.1.8.4.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.slf4j_slf4j-api-1.7.32.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.slf4j_slf4j-api-1.7.32.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/org.apache.hadoop_hadoop-client-api-3.3.2.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\org.apache.hadoop_hadoop-client-api-3.3.2.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Failed to add file:///C:/Users/BERNARD JOSHUA/.ivy2/jars/commons-logging_commons-logging-1.1.3.jar to Spark environment

java.io.FileNotFoundException: Jar C:\Users\BERNARD JOSHUA\.ivy2\jars\commons-logging_commons-logging-1.1.3.jar not found

at org.apache.spark.SparkContext.addLocalJarFile$1(SparkContext.scala:1833)

at org.apache.spark.SparkContext.addJar(SparkContext.scala:1887)

at org.apache.spark.SparkContext.$anonfun$new$11(SparkContext.scala:490)

at org.apache.spark.SparkContext.$anonfun$new$11$adapted(SparkContext.scala:490)

at scala.collection.mutable.ResizableArray.foreach(ResizableArray.scala:62)

at scala.collection.mutable.ResizableArray.foreach$(ResizableArray.scala:55)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:49)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:490)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 ERROR SparkContext: Error initializing SparkContext.

java.io.FileNotFoundException: File file:/C:/Users/BERNARD JOSHUA/.ivy2/jars/org.apache.spark_spark-sql-kafka-0-10_2.12-3.3.0.jar does not exist

at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:611)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:824)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:601)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:428)

at org.apache.spark.SparkContext.addFile(SparkContext.scala:1534)

at org.apache.spark.SparkContext.addFile(SparkContext.scala:1498)

at org.apache.spark.SparkContext.$anonfun$new$12(SparkContext.scala:494)

at org.apache.spark.SparkContext.$anonfun$new$12$adapted(SparkContext.scala:494)

at scala.collection.immutable.List.foreach(List.scala:392)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:494)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(Unknown Source)

at java.lang.reflect.Constructor.newInstance(Unknown Source)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:247)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:238)

at py4j.commands.ConstructorCommand.invokeConstructor(ConstructorCommand.java:80)

at py4j.commands.ConstructorCommand.execute(ConstructorCommand.java:69)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Unknown Source)

22/09/10 00:17:02 WARN MetricsSystem: Stopping a MetricsSystem that is not running

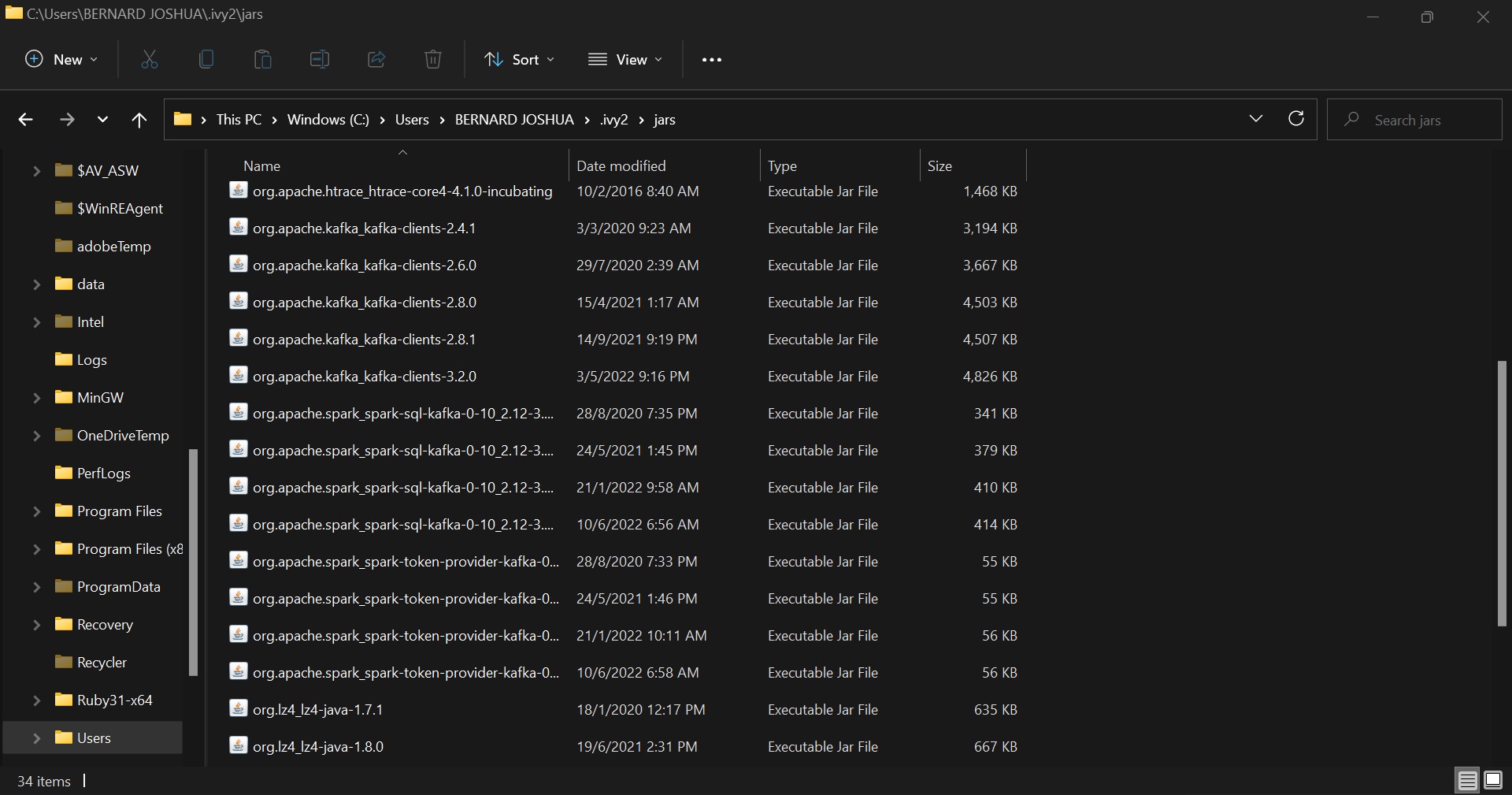

I also have all the dependencies installed in my .ivy2 file:

CodePudding user response:

Firstly, Spark might have issues with you having spaces in your Windows user name... The error includes saying path C:\Users\BERNARD JOSHUA does not exist, which is "true" because of the space being URI encoded.

But, you're running spark-3.0.1, so you should be using 3.0.1 of spark-sql-kafka-0-10 rather than 3.3.0.

Alternatively, upgrade Spark.

See example I wrote here - https://github.com/OneCricketeer/docker-stacks/blob/master/hadoop-spark/spark-notebooks/kafka-sql.ipynb