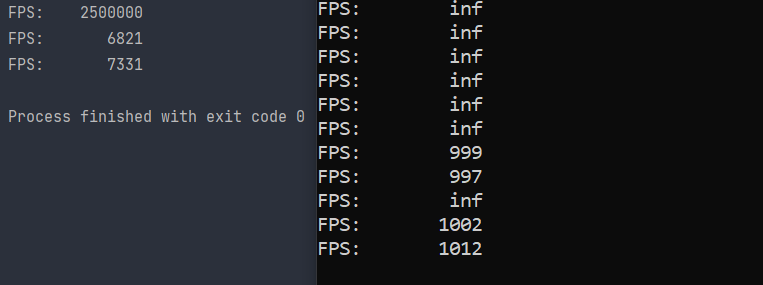

I am running the same OpenGL executable in CLion and cmd, but they show different outputs. CLion shows the right number, cmd shows inf and sometimes the number. cout does the same.

(I've mitigated all ogl calls from the code since they're unnecessary)

The code to that is:

int i = 0;

auto lastTime = std::chrono::high_resolution_clock::now();

while ... {

auto currentTime = std::chrono::high_resolution_clock::now();

if (i % 10000 == 0) {

auto deltaTime = std::chrono::duration_cast<std::chrono::duration<float>>(currentTime - lastTime).count();

printf("FPS: .0f\n", 1.0f / deltaTime);

}

i ;

lastTime = currentTime;

}

CLion left, cmd right

CodePudding user response:

As Peter pointed out, the problem is that some iterations take less time than the resolution of the clock being used and that time in turn depends on the environment where the program is executed. A solution to such problems could be to measure the time for X iterations (10000 in the following example) and then calculate the average time:

int i = 0;

auto lastTime = std::chrono::high_resolution_clock::now();

while ... {

if ( i == 10000) {

auto currentTime = std::chrono::high_resolution_clock::now();

auto deltaTime = std::chrono::duration_cast<std::chrono::duration<float>>(currentTime - lastTime).count();

deltaTime /= 10000.0f; //average time for 1 iteration

printf("FPS: .0f\n", 1.0f / deltaTime);

lastTime = currentTime;

i = 0; //reset counter to prevent overflow in long runs

}

}