I am trying to transfer on-premise files to azure blob storage. However, out of the 5 files that I have, 1 has "no data" so I can't map the schema. Is there a way I can filter out this file while importing it to azure? Or would I have to import them into azure blob storage as is then filter them to another blob storage? If so, how would I do this?

CodePudding user response:

If your on prem source is your local file system, then first copy the files with folder structure to a temporary blob container using azcopy SAS key. Please refer this thread to know about it.

Then use ADF pipeline to filter out the empty files and store it final blob container.

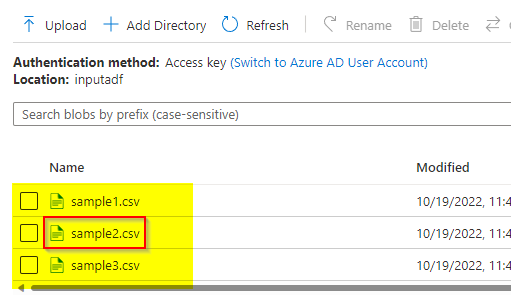

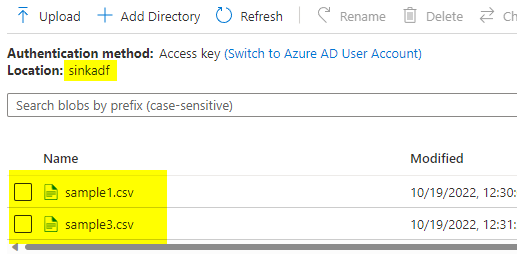

These are my files in blob container and sample2.csv is an empty file.

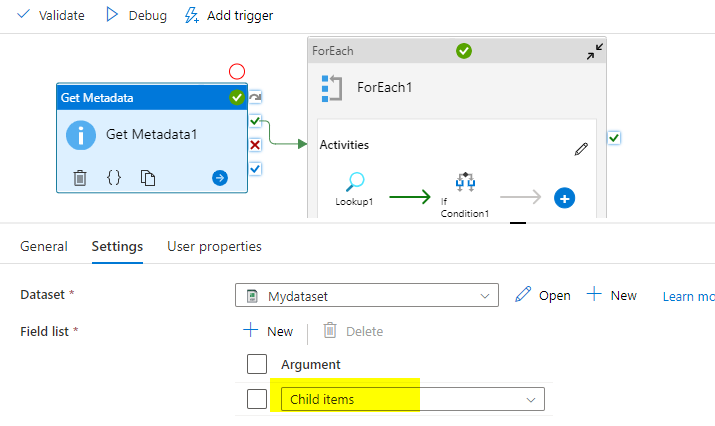

First use Get Meta data activity to get the files list in that container.

It will list all the files and give that array to the ForEach as @activity('Get Metadata1').output.childItems

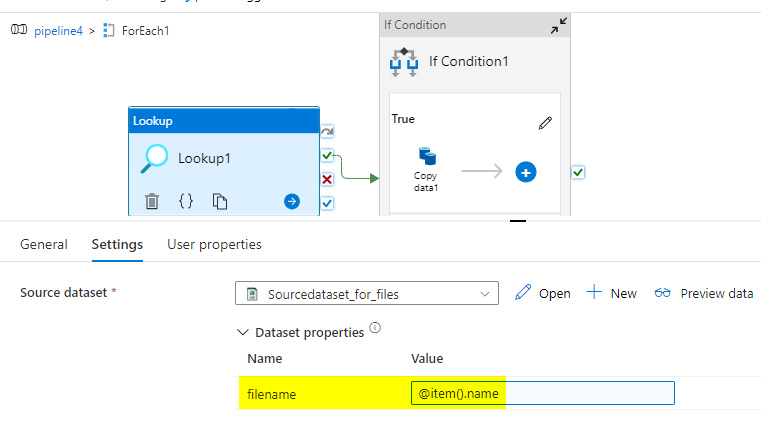

Inside ForEach use lookup to get the row count of every file and if the count !=0 then use copy activity to copy the files.

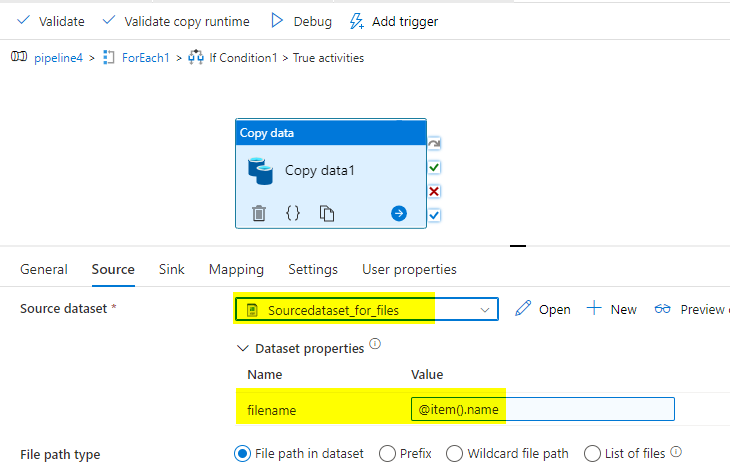

Use dataset parameter to give the file name.

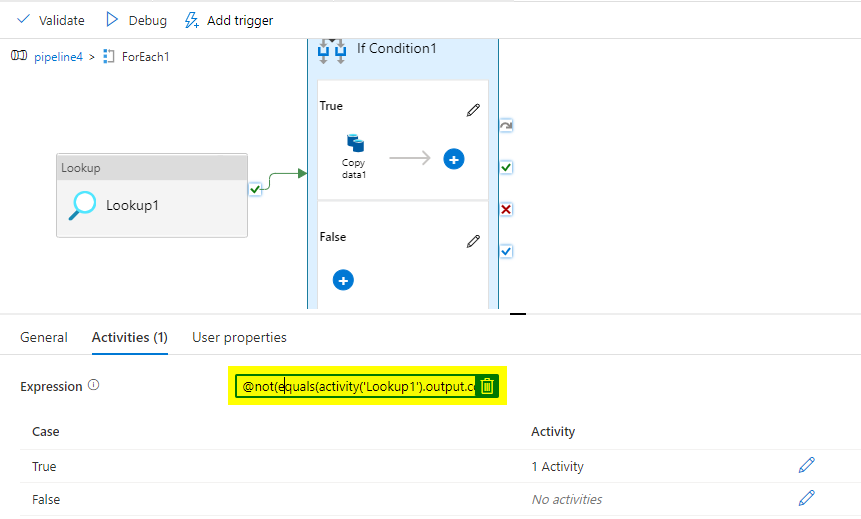

Inside if give the below condition.

@not(equals(activity('Lookup1').output.count,0))

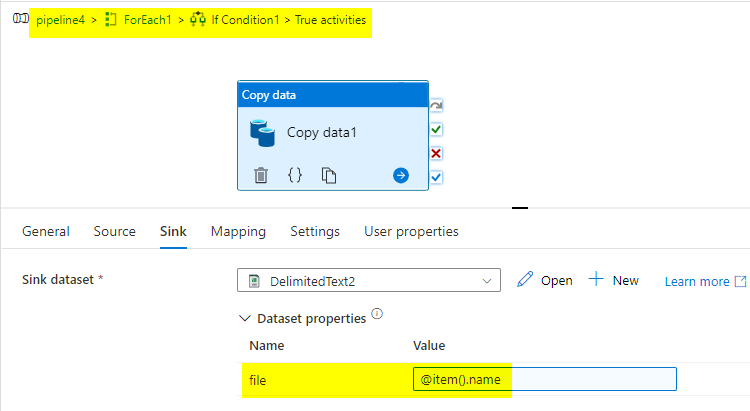

Inside True activities use copy activity.

copy sink to another blob container:

Execute this pipeline and you can see the empty file is filtered out.

If your on-prem source is SQL, use lookup to get the list of tables and then use ForEach. Inside ForEach do the same procedure for individual tables.

If your on-prem source other than the above mentioned also, first try to copy all files to blob storage then follow the same procedure.