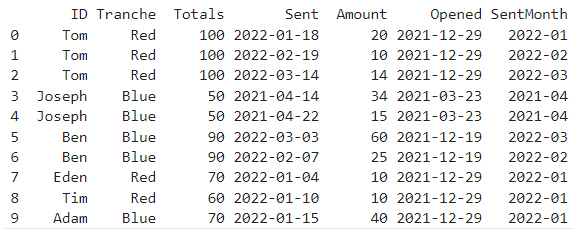

I've seen plenty of examples and I can't make them work on my table. I have this table:

data = {'ID': ['Tom', 'Tom','Tom','Joseph','Joseph','Ben','Ben','Eden','Tim','Adam'], 'Tranche': ['Red', 'Red', 'Red', 'Blue','Blue','Blue','Blue','Red','Red','Blue'],'Totals':[100,100,100,50,50,90,90,70,60,70],'Sent':['2022-01-18','2022-02-19','2022-03-14','2021-04-14','2021-04-22','2022-03-03','2022-02-07','2022-01-04','2022-01-10','2022-01-15'],'Amount':[20,10,14,34,15,60,25,10,10,40],'Opened':['2021-12-29','2021-12-29','2021-12-29','2021-03-23','2021-03-23','2021-12-19','2021-12-19','2021-12-29','2021-12-29','2021-12-29']}

df = pd.DataFrame(data)

df["Opened"] = df["Opened"].astype('datetime64[ns]')

df["Sent"] = df["Sent"].astype('datetime64[ns]')

df['SentMonth'] = pd.to_datetime(df['Sent']).dt.to_period('M')

I want every ID to have every SentMonth, with amount zero if there is no amount (fillna will do if I can get to that point). I need this to make a later .cumsum() give correct results.

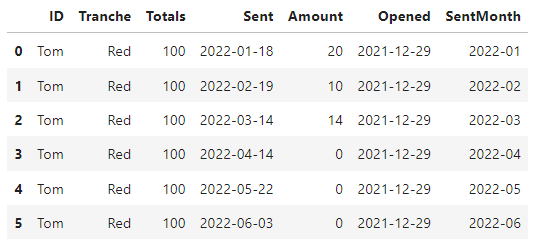

e.g. for Tom, the output should be something like this, but just more rows with more SentMonths. The day in the Sent column does not matter - but there must be one row for every month:

First solution that is always given is reindexing. I can't do this as every SentMonth is duplicated, and Sent will also always have duplicates in my full table.

df.resample('M').sum() gives the error: Only valid with DatetimeIndex, TimedeltaIndex or PeriodIndex, but got an instance of 'RangeIndex'.

Which I tried to fix by doing

df1 = df.set_index('SentMonth').groupby('ID').resample('1D')['Amount'].ffill()

But this brings me back to the unique index error.

Is there any other approach that can get around this? Thanks! :)

CodePudding user response:

One option is with complete from pyjanitor to expose missing rows:

# pip install pyjanitor

import pandas as pd

import janitor

# build a sequence of periods from the 1st month in 2022:

periods={'SentMonth':pd.period_range('2022-01', '2022-12', freq='M')}

#Build the new dataframe:

out = (df

.complete(('ID','Tranche','Totals', 'Opened'), periods)

.fillna({'Amount':0}, downcast='infer')

)

# you can change this, since `Sent` is not important

out.Sent = out.Sent.fillna(out.SentMonth.astype('datetime64[ns]'))

out

ID Tranche Totals Sent Amount Opened SentMonth

0 Tom Red 100 2022-01-18 20 2021-12-29 2022-01

1 Tom Red 100 2022-02-19 10 2021-12-29 2022-02

2 Tom Red 100 2022-03-14 14 2021-12-29 2022-03

3 Tom Red 100 2022-04-01 0 2021-12-29 2022-04

4 Tom Red 100 2022-05-01 0 2021-12-29 2022-05

.. ... ... ... ... ... ... ...

69 Adam Blue 70 2022-10-01 0 2021-12-29 2022-10

70 Adam Blue 70 2022-11-01 0 2021-12-29 2022-11

71 Adam Blue 70 2022-12-01 0 2021-12-29 2022-12

72 Joseph Blue 50 2021-04-14 34 2021-03-23 2021-04

73 Joseph Blue 50 2021-04-22 15 2021-03-23 2021-04

[74 rows x 7 columns]

You can sort the dates in ascending/descending order

CodePudding user response:

This would be a solution:

month_range = df["SentMonth"].unique()

df_per_user = dict()

for user_id, user_df in df.groupby("ID"):

user_df.set_index("SentMonth", inplace=True)

duplicated_rows = user_df.index.duplicated()

if duplicated_rows.any():

keep_rows = user_df[duplicated_rows]

user_df = user_df[~duplicated_rows]

df_per_user[user_id] = user_df.reindex(month_range).sort_index()

if duplicated_rows.any():

df_per_user[user_id] = pd.concat([df_per_user[user_id], keep_rows]).sort_index()

all_df = pd.concat(df_per_user)

So you have to take care for the ID with duplicated SentMonth (Joseph). The result looks like:

ID Tranche Totals Sent Amount Opened

SentMonth

Adam 2021-04 NaN NaN NaN NaT NaN NaT

2022-01 Adam Blue 70.0 2022-01-15 40.0 2021-12-29

2022-02 NaN NaN NaN NaT NaN NaT

2022-03 NaN NaN NaN NaT NaN NaT

Ben 2021-04 NaN NaN NaN NaT NaN NaT

2022-01 NaN NaN NaN NaT NaN NaT

2022-02 Ben Blue 90.0 2022-02-07 25.0 2021-12-19

2022-03 Ben Blue 90.0 2022-03-03 60.0 2021-12-19

Eden 2021-04 NaN NaN NaN NaT NaN NaT

2022-01 Eden Red 70.0 2022-01-04 10.0 2021-12-29

2022-02 NaN NaN NaN NaT NaN NaT

2022-03 NaN NaN NaN NaT NaN NaT

Joseph 2021-04 Joseph Blue 50.0 2021-04-14 34.0 2021-03-23

2021-04 Joseph Blue 50.0 2021-04-22 15.0 2021-03-23

2022-01 NaN NaN NaN NaT NaN NaT

2022-02 NaN NaN NaN NaT NaN NaT

2022-03 NaN NaN NaN NaT NaN NaT

Tim 2021-04 NaN NaN NaN NaT NaN NaT

2022-01 Tim Red 60.0 2022-01-10 10.0 2021-12-29

2022-02 NaN NaN NaN NaT NaN NaT

2022-03 NaN NaN NaN NaT NaN NaT

Tom 2021-04 NaN NaN NaN NaT NaN NaT

2022-01 Tom Red 100.0 2022-01-18 20.0 2021-12-29

2022-02 Tom Red 100.0 2022-02-19 10.0 2021-12-29

2022-03 Tom Red 100.0 2022-03-14 14.0 2021-12-29