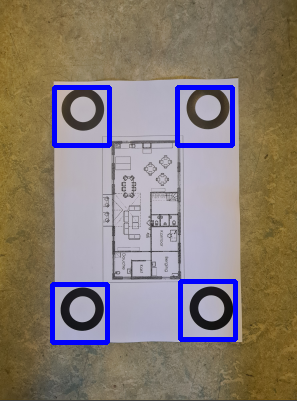

I try to recognize 4 the same fiducial marks on a map. With help of the internet, I have created something, but I am looking for ways to improve the search, since the result is far from perfect.

What I tried so far:

- Changing the threshold

- Trying different cv2 methods

- Making the image and the template smaller

This is my code:

import cv2

import numpy as np

from imutils.object_detection import non_max_suppression

# Reading and resizing the image

big_image = cv2.imread('20221028_093830.jpg')

scale_percent = 10 # percent of original size

width = int(big_image.shape[1] * scale_percent / 100)

height = int(big_image.shape[0] * scale_percent / 100)

dim = (width, height)

img = cv2.resize(big_image, dim, interpolation = cv2.INTER_AREA)

temp = cv2.imread('try_fiduc.png')

# save the image dimensions

W, H = temp.shape[:2]

# Converting them to grayscale

img_gray = cv2.cvtColor(img,

cv2.COLOR_BGR2GRAY)

temp_gray = cv2.cvtColor(temp,

cv2.COLOR_BGR2GRAY)

# Blur the image

img_blurred = cv2.GaussianBlur(img_gray, (7, 7), 0)

# Increasing contrast

img_contrast = img_blurred*3

# Passing the image to matchTemplate method

match = cv2.matchTemplate(

image=img_contrast, templ=temp_gray,

method=cv2.TM_CCOEFF)\

# Define a minimum threshold

thresh = 6000000

# Select rectangles with confidence greater than threshold

(y_points, x_points) = np.where(match >= thresh)

# initialize our list of rectangles

boxes = list()

# loop over the starting (x, y)-coordinates again

for (x, y) in zip(x_points, y_points):

# update our list of rectangles

boxes.append((x, y, x W, y H))

# apply non-maxima suppression to the rectangles

# this will create a single bounding box

boxes = non_max_suppression(np.array(boxes))

# loop over the final bounding boxes

for (x1, y1, x2, y2) in boxes:

# draw the bounding box on the image

cv2.rectangle(img, (x1, y1), (x2, y2),

(255, 0, 0), 3)

# Show the template and the final output

cv2.imshow("Template", temp_gray)

cv2.imshow("Image", img_contrast)

cv2.imshow("After NMS", img)

cv2.waitKey(0)

# destroy all the windows manually to be on the safe side

cv2.destroyAllWindows()

What are more ways to improve the template matching? In the end I want to be able to recognize them from further distance, and not have the false match. Any help would be appreciated.

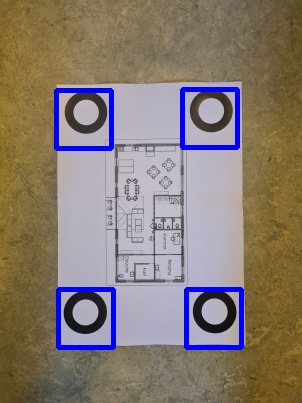

CodePudding user response:

Here is how I would do that in Python/OpenCV. Mostly the same as yours with several changes.

First, I would not bother computing the dim for resize. I would just use your scale_percent/100 so a fraction. Resize permits that in place of the size.

Second, I would threshold your images and invert the template so that you are matching black rings in both the image and template.

Third, I would use TM_SQDIFF and find values below a threshold.

import cv2

import numpy as np

from imutils.object_detection import non_max_suppression

# Reading and resizing the image

big_image = cv2.imread('diagram.jpg')

scale_percent = 10 # percent of original size

scale = scale_percent/100

img = cv2.resize(big_image, (0,0), fx=scale, fy=scale, interpolation = cv2.INTER_AREA)

temp = cv2.imread('ring.png')

# save the image dimensions

W, H = temp.shape[:2]

# Converting them to grayscale

img_gray = cv2.cvtColor(img,

cv2.COLOR_BGR2GRAY)

temp_gray = cv2.cvtColor(temp,

cv2.COLOR_BGR2GRAY)

# threshold (and invert template)

img_thresh = cv2.threshold(img_gray, 0, 255, cv2.THRESH_BINARY cv2.THRESH_OTSU)[1]

temp_thresh = cv2.threshold(temp_gray, 0, 255, cv2.THRESH_BINARY_INV cv2.THRESH_OTSU)[1]

# Passing the image to matchTemplate method

match = cv2.matchTemplate(

image=img_thresh, templ=temp_thresh,

method=cv2.TM_SQDIFF)\

min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(match)

print(min_val, max_val)

# Define a threshold

# thresh between 40000000 and 60000000 works

thresh = 50000000

# Select rectangles with confidence less than threshold for TM_SQDIFF

(y_points, x_points) = np.where(match <= thresh)

# initialize our list of rectangles

boxes = list()

# loop over the starting (x, y)-coordinates again

for (x, y) in zip(x_points, y_points):

# update our list of rectangles

boxes.append((x, y, x W, y H))

# apply non-maxima suppression to the rectangles

# this will create a single bounding box

boxes = non_max_suppression(np.array(boxes))

# loop over the final bounding boxes

result = img.copy()

for (x1, y1, x2, y2) in boxes:

# draw the bounding box on the image

cv2.rectangle(result, (x1, y1), (x2, y2),

(255, 0, 0), 3)

# save result

cv2.imwrite('diagram_match_locations.jpg', result)

# Show the template and the final output

cv2.imshow("Template_thresh", temp_thresh)

cv2.imshow("Image_thresh", img_thresh)

cv2.imshow("After NMS", result)

cv2.waitKey(0)

# destroy all the windows manually to be on the safe side

cv2.destroyAllWindows()

Result: