I'm trying to create a UDP server which can send messages to all the clients that send messages to it. The real situation is a little more complex, but it's simplest to imagine it as a chat server: everyone who has sent a message before receives all the messages that are sent by other clients.

All of this is done via UdpClient, in separate processes. (All network connections are within the same system though, so I don't think the unreliability of UDP is an issue here.)

The server code is a loop like this (full code later):

var udpClient = new UdpClient(9001);

while (true)

{

var packet = await udpClient.ReceiveAsync();

// Send to clients who've previously sent messages here

}

The client code is simple too - again, this is slightly abbreviated, but full code later:

var client = new UdpClient();

client.Connect("127.0.0.1", 9001);

await client.SendAsync(Encoding.UTF8.GetBytes("Hello"));

await Task.Delay(TimeSpan.FromSeconds(15));

await client.SendAsync(Encoding.UTF8.GetBytes("Goodbye"));

client.Close();

This all works fine until one of the clients closes its UdpClient (or the process exits).

The next time another client sends a message, the server tries to propagate that to the now-closed original client. The SendAsync call for that doesn't fail - but then when the server loops back to ReceiveAsync, that fails with an exception, and I haven't found a way to recover.

If I never send a message to the client that's disconnected, I never see the problem. Based on that I've also created a "fails immediately" repro which just sends to an endpoint assumed not to be listening, and then tries to receive. This fails with the same exception.

Exception:

System.Net.Sockets.SocketException (10054): An existing connection was forcibly closed by the remote host.

at System.Net.Sockets.Socket.AwaitableSocketAsyncEventArgs.CreateException(SocketError error, Boolean forAsyncThrow)

at System.Net.Sockets.Socket.AwaitableSocketAsyncEventArgs.ReceiveFromAsync(Socket socket, CancellationToken cancellationToken)

at System.Net.Sockets.Socket.ReceiveFromAsync(Memory`1 buffer, SocketFlags socketFlags, EndPoint remoteEndPoint, CancellationToken cancellationToken)

at System.Net.Sockets.Socket.ReceiveFromAsync(ArraySegment`1 buffer, SocketFlags socketFlags, EndPoint remoteEndPoint)

at System.Net.Sockets.UdpClient.ReceiveAsync()

at Program.<Main>$(String[] args) in [...]

Environment:

- Windows 11, x64

- .NET 6

Is this expected behaviour? Am I using UdpClient incorrectly on the server side? I'm fine with clients not receiving messages after they've closed their UdpClient (that's to be expected), and in the "full" application I'll tidy up my internal state to keep track of "active" clients (who have sent packets recently) but I don't want one client closing a UdpClient to bring down the whole server. Run the server in one console, and the client in another. Once the client has finished once, run it again (so that it tries to send to the now-defunct original client).

The default .NET 6 console app project template is fine for all projects.

Repro code

The simplest example comes first - but if you want to run a server and client, they're shown afterwards.

Deliberately broken server (fails immediately)

Based on the assumption that it really is the sending that's causing the problem, this is easy to reproduce in just a few lines:

using System.Net;

using System.Net.Sockets;

var badEndpoint = new IPEndPoint(IPAddress.Parse("127.0.0.1"), 12346);

var udpClient = new UdpClient(12345);

await udpClient.SendAsync(new byte[10], badEndpoint);

await udpClient.ReceiveAsync();

Server

using System.Net;

using System.Net.Sockets;

using System.Text;

var udpClient = new UdpClient(9001);

var endpoints = new HashSet<IPEndPoint>();

try

{

while (true)

{

Log($"{DateTime.UtcNow:HH:mm:ss.fff}: Waiting to receive packet");

var packet = await udpClient.ReceiveAsync();

var buffer = packet.Buffer;

var clientEndpoint = packet.RemoteEndPoint;

endpoints.Add(clientEndpoint);

Log($"Received {buffer.Length} bytes from {clientEndpoint}: {Encoding.UTF8.GetString(buffer)}");

foreach (var otherEndpoint in endpoints)

{

if (!otherEndpoint.Equals(clientEndpoint))

{

await udpClient.SendAsync(buffer, otherEndpoint);

}

}

}

}

catch (Exception e)

{

Log($"Failed: {e}");

}

void Log(string message) =>

Console.WriteLine($"{DateTime.UtcNow:HH:mm:ss.fff}: {message}");

Client

(I previously had a loop actually receiving the packets sent my the server, but that doesn't seem to make any difference, so I've removed it for simplicity.)

using System.Net.Sockets;

using System.Text;

Guid clientId = Guid.NewGuid();

var client = new UdpClient();

Log("Connecting UdpClient");

client.Connect("127.0.0.1", 9001);

await client.SendAsync(Encoding.UTF8.GetBytes($"Hello from {clientId}"));

await Task.Delay(TimeSpan.FromSeconds(15));

await client.SendAsync(Encoding.UTF8.GetBytes($"Goodbye from {clientId}"));

client.Close();

Log("UdpClient closed");

void Log(string message) =>

Console.WriteLine($"{DateTime.UtcNow:HH:mm:ss.fff}: {message}");

CodePudding user response:

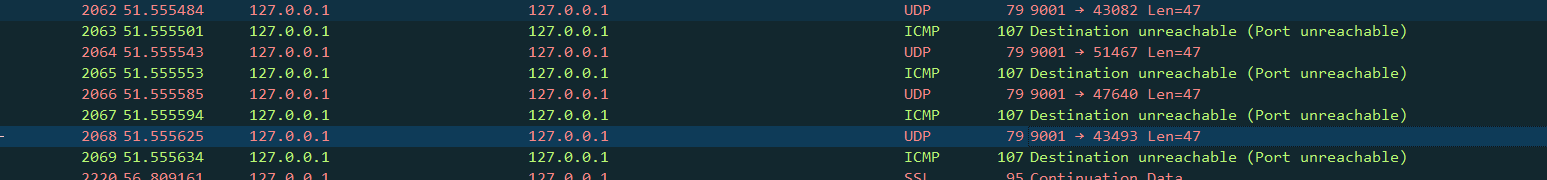

OK, so as you may be aware, if a host receives a packet for a UDP port that is not currently bound, it may send back an ICMP "Port Unreachable" message. Whether or not it does this is dependant on the firewall, private/public settings, etc. On localhost however it will pretty much always send this packet back.

In your server code, you are calling SendAsync to old clients, which prompts these "port unreachable" messages.

Now, on Windows (and only on Windows), by default, a received ICMP Port Unreachable message will close the UDP socket that sent it, hence, the next time you try to receive on the socket, it will throw because the socket has been closed by the OS.

Obviously, this causes a headache in the multi-client, single server socket set-up you have here, but luckily there is a fix.

You need to utilise the not-often-required SIO_UDP_CONNRESET Winsock control code, that turns off this built-in behaviour that closes the socket.

I don't believe this ioctl code is available in the dotnet IoControlCodes type, but you can define it yourself. If you put the following code at the top of your server repro, the error no longer gets raised.

const uint IOC_IN = 0x80000000U;

const uint IOC_VENDOR = 0x18000000U;

/// <summary>

/// Controls whether UDP PORT_UNREACHABLE messages are reported.

/// </summary>

const int SIO_UDP_CONNRESET = unchecked((int)(IOC_IN | IOC_VENDOR | 12));

var udpClient = new UdpClient(9001);

udpClient.Client.IOControl(SIO_UDP_CONNRESET, new byte[] { 0x00 }, null);